Technologies based on Artificial Intelligence are all the rage these days — both because of their stunning capabilities and the numerous ways in which they make our lives easier and because of the unknown future that we project they may bring.

One particular stream of development in the field of AI is image recognition based on machine learning algorithms. It’s being used in so many fields today that it’s challenging to start counting them.

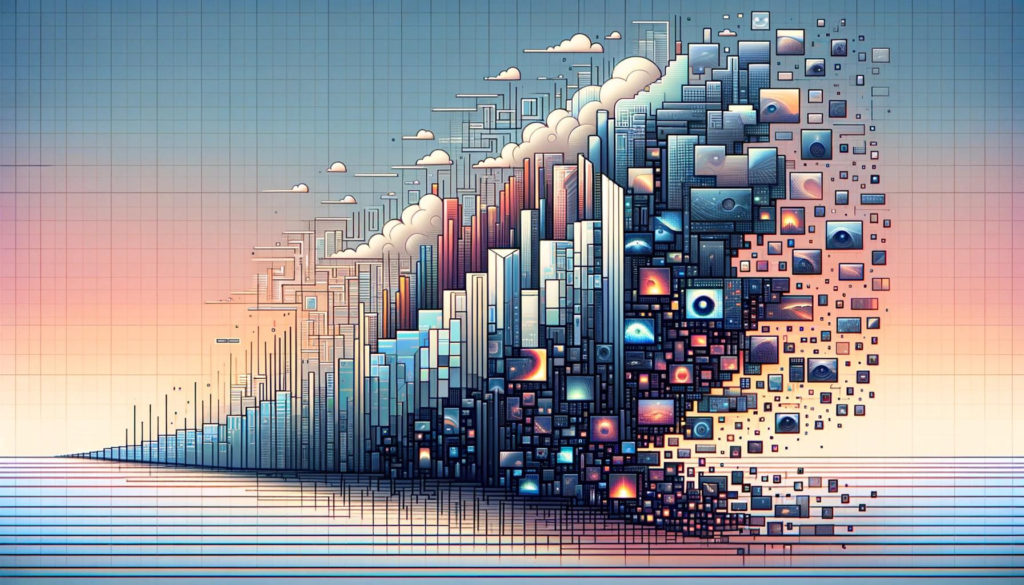

In the fast-paced digital world, image recognition powers up crucial activities like content moderation on a large scale, as required by the exponentially growing volume of user-generated content on social platforms.

It’s not only that, though: image recognition finds great uses in construction, security, healthcare, e-commerce, entertainment, and numerous other fields where it brings unseen benefits in terms of productivity and precision. Think also about innovations like self-driving cars, robots, and many more — all made possible by computer vision.

But how did image recognition start, and how did it evolve over the decades to reach the current levels of broad use and accuracy that sometimes even surpass the capabilities of human vision?

It all started with a scientific paper by two neurophysiologists in the distant 1959, which was dealing with research on cat’s neurons…

Let’s dive into the history of this field of AI-powered technology in the sections below.

1950s: The First Seeds of the Image Recognition Scientific Discipline

As with many other human discoveries, image recognition started out as a research interest in different other fields.

In the last years of the 1950s, two important events occurred that were far away from the creation and use of computer systems but were central to developing the concept of image recognition.

In 1959, the neurophysiologists David Hubel and Torsten Wiesel published their research on the Receptive Fields of Single Neurons in the Cat’s Striate Cortex. The paper became popular and widely recognized, as the two scientists made an important discovery while studying the responses of visual neurons in cats and in particular, how their cortical architecture is shaped.

Hubel and Wiesel found that the primary visual cortex has simple and complex neurons. They also discovered that the process of recognizing an image begins with the identification of simple structures, such as the edges of the items being seen. Afterward, the details are added, and the whole complex image is understood by the brain. Their research on cats thus, by chance, became a founding base for image recognition based on computer technologies.

The second important event from the same year was the development of the first technology for the digital scanning of images. Russel Kirsch and a group of researchers led by him invented a scanner that could transform images into numbers so that computers can process them. This historical moment led to our current ability to handle digital images for so many different uses.

1960s and 1970s: Image Recognition Becomes an Official Academic Discipline

The 1960s were the time when image recognition was officially founded. Artificial intelligence, and hence image recognition as a significant part of it, was recognized as an academic discipline with growing interest from the scientific community. Scientists started working on the seemingly wild idea of making computers identify and process visual data. These were the years of dreams about what AI could do — and the projections for revolutionary advancements were highly positive.

The name of the scientist Lawrence Roberts is linked to the creation of the first image recognition or computer vision applications. He put the start of it all by publishing his doctoral thesis on Machine Perception of Three-Dimensional Solids. In it, he details how 3D data about objects can be obtained from standard photos. Roberts’ first goal was to convert photos into line sketches that could then become the basis for 3D versions. His thesis presented the process of turning 2D into 3D representations and vice versa.

Roberts’ work became the ground for further research and innovations in 3D creation and image recognition. They were based on the processes of identifying edges, noting lines, construing objects as consisting of smaller structures, and the like, and later on included contour models, scale-space, and shape identification that accounts for shading, texture, and more.

Another important name was that of Seymour Papert, who worked at the AI lab at MIT. In 1966, he created and ran an image recognition project called “Summer Vision Project.” Papert worked with MIT students to create a platform that had to extract the background and front parts of images, as well as to detect objects that were not overlapping with others. They connected a camera to a computer to mimic how our brains and eyes work together to see and process visual information. The computer had to imitate this process of seeing and noting the recognized objects — thus, computer vision came to the front. Regretfully, the project wasn’t deemed successful, but it is still recognized as the first attempt at computer-based vision within the scientific realm.

1980s and 1990s: The Moves to Hierarchical Perception and Neural Networks

The next big moment in the evolution of image recognition came in the 1980s. In the following two decades, the significant milestones included the idea of hierarchical processing of visual data, as well as the founding blocks of what later came to be known as neural networks.

The British neuroscientist David Marr presented his research “Vision: A computational investigation into the human representation and processing of visual information” in 1982. It was founded on the idea that image recognition’s starting point is not holistic objects. Instead, he focused on corners, edges, curves, and other basic details as the starting points for deeper visual processing.

According to Marr, the image processing had to function in a hierarchical manner. His approach stated that simple conical forms can be employed to put together other complex objects.

The evolution of the Hough Transform, a method for recognizing complex patterns, was another important event around this period. The algorithm was foundational for creating advanced image recognition methods like edge identification and feature extraction.

At the beginning of the 1980s, another significant step forward in the image recognition field was made by the Japanese scientist Kunihiko Fukushima. He invented the Neocognitron, seen as the first neural network categorized as ‘deep’. It is believed to be the predecessor of the present-day convolutional networks used in machine learning-based image recognition.

The Neocognitron artificial network consisted of simple and complex cells that identified patterns irrespective of position shifts. It was made up of a number of convolutional layers, each triggering actions that served as input for the next layers.

In the 1990s, there was a clear shift away from David Marr’s ideas about 3D objects. AI scientists focused on the area of recognizing features of objects. David Lowe published the paper Object Recognition from Local Scale-Invariant Features in 1999, which detailed an image recognition system that employs features that are not subject to changes from location, light, and rotation. Lowe saw a resemblance between neurons in the inferior temporal cortex and these features of the system.

Gradually, the idea of neural networks came to the front. It was based on the structure and function of the human brain — with the idea of teaching computers to learn and spot patterns. This is how the first convolutional neural networks (CNNs) came about, equipped to gather complex features and patterns for more complicated image recognition tasks.

Again, in the 1990s, the interplay between computer graphics and computer vision pushed the field forward. Innovations like image-based rendering, morphing, and panorama stitching brought about new ways to think about where image recognition could go.

2000s and 2010s: The Stage of Maturing and Mass Use

In the first years of the 21st century, the field of image recognition reshifted towards object recognition as a primary goal. The first two decades were a time of steady growth and breakthroughs that eventually led to the mass adoption of image recognition in different types of systems.

In 2006, Princeton Alumni Fei-Fei Lin, who later became a Professor of Computer Science at Stanford, was conducting machine learning research and was facing the challenges of overfitting and underfitting. To address them, in 2007, she founded Imagenet, an ameliorated dataset that could power machines to make more accurate judgments. In 2010, the dataset consisted of three million visual items, tagged and categorized in over 5,000 sections. Imagenet served as a major milestone for object recognition as a whole.

In 2010, the first Imagenet Large Scale Visual Recognition Challenge (ILSVRC) brought about the massive evaluation of object identification and classification algorithms.

It led to another significant step in 2012 — Alexnet. The scientist Alex Krizhevsky was behind this project, which employed architecture based on convolutional neural networks. Alexnet was recognized as the first use of deep learning. This brought about a significant reduction in error rates and boosted the whole field of image recognition.

All in all, the progress with Imagenet and its subsequent initiatives was revolutionary, and the neural networks set up back then are still being used in various applications, such as the popular photo tagging on social networks.

2020s: The Power of Image Recognition Today

Our current decade is a witness to a powerful move in image recognition to maximize the potential of neural networks and deep learning algorithms. With their help, deep learning algorithms are constantly evolving and gaining higher levels of accuracy, as well as pushing further the advancement of the whole field with a focus on classification, segmentation, and optical flow, among others.

The industries and applications in which image recognition is being used today are innumerable. Just a few of them include content moderation on digital platforms, quality inspection and control in manufacturing, project and asset management in construction, diagnostics and other technological advancements in healthcare, automation in areas like security and administration, and many more.

Learn How Image Recognition Can Boost Your Business

At Imagga, we are committed to the most forward-looking methods in developing image recognition technologies — and especially tailor-made solutions such as custom categorization — for businesses in a wide array of fields.

Do you need image tagging, facial recognition, or a custom-trained model for image classification? Get in touch to see how our solutions can power up your business.