Imagga’s Adult Video Content Detection Model: Smarter Moderation, Safer Platforms

Video is everywhere today — from social feeds and online communities to shopping sites and e-learning platforms. It’s engaging, relatable, and immediate. But this very immediacy makes video moderation critical. Inappropriate or explicit content can spread fast, harming users, brands, and trust. That’s why Imagga’s Adult Video Content Detection Model is designed to tackle this challenge head-on. Our AI-powered system brings accuracy, and scalability to video moderation, ensuring that platforms stay safe, compliant, and user-friendly.

Why Video Adult Content Detection Is Hard

Adult video content detection is challenging because of the sheer volume, complexity, and speed at which videos are shared. Traditional moderation methods can’t keep up with thousands of uploads each day, nor with the expectation for real-time review to stop explicit content before it spreads. While human moderators still play a role, it’s nearly impossible for them to consistently catch subtle or borderline NSFW material, and they may struggle with cultural nuances and context. AI-powered moderation addresses these issues by processing large volumes quickly, distinguishing between explicit, suggestive, and safe content with clear thresholds, and allowing for customization to match different cultural standards. Most importantly, advanced models help reduce mistakes, minimizing false positives and missed explicit clips, protecting both user trust and platform reputation.

How Imagga's Advanced Model Enables Effective Video Moderation

To overcome these challenges, new technology offers impressive solutions that can handle the volume, speed, real-time demand, and complexity of video distribution today.

Using cutting-edge AI technology based on image recognition and machine learning algorithms, Imagga's Adult Content Detection Model provides highly accurate video analysis. It performs reliable and scalable adult detection in real-time — outperforming other adult content detection models by up to 26%. On top of that, we can customize the model to the particular needs of a specific business.

The power of Imagga’s video adult content detection model lies in its precise classification, context-rich tagging, and frame-by-frame analysis.

Best-in-Class Precision and Recall

With precision now at 0.96 and recall at 0.98, our upgraded model detects explicit and suggestive content more accurately than ever. This means fewer false positives, fewer misses, and more trust in your automated video moderation — even as your content volume grows.

Smarter, Faster Video Analysis

Our optimized scene analysis method significantly speeds up processing by reducing the number of frames analyzed, without compromising accuracy. This makes the analysis more cost efficient, while still detecting even subtle or hidden adult content (as few as 1–3 frames). Built on a high-quality dataset and a custom AI pipeline, our model ensures you get efficient, reliable moderation at scale.

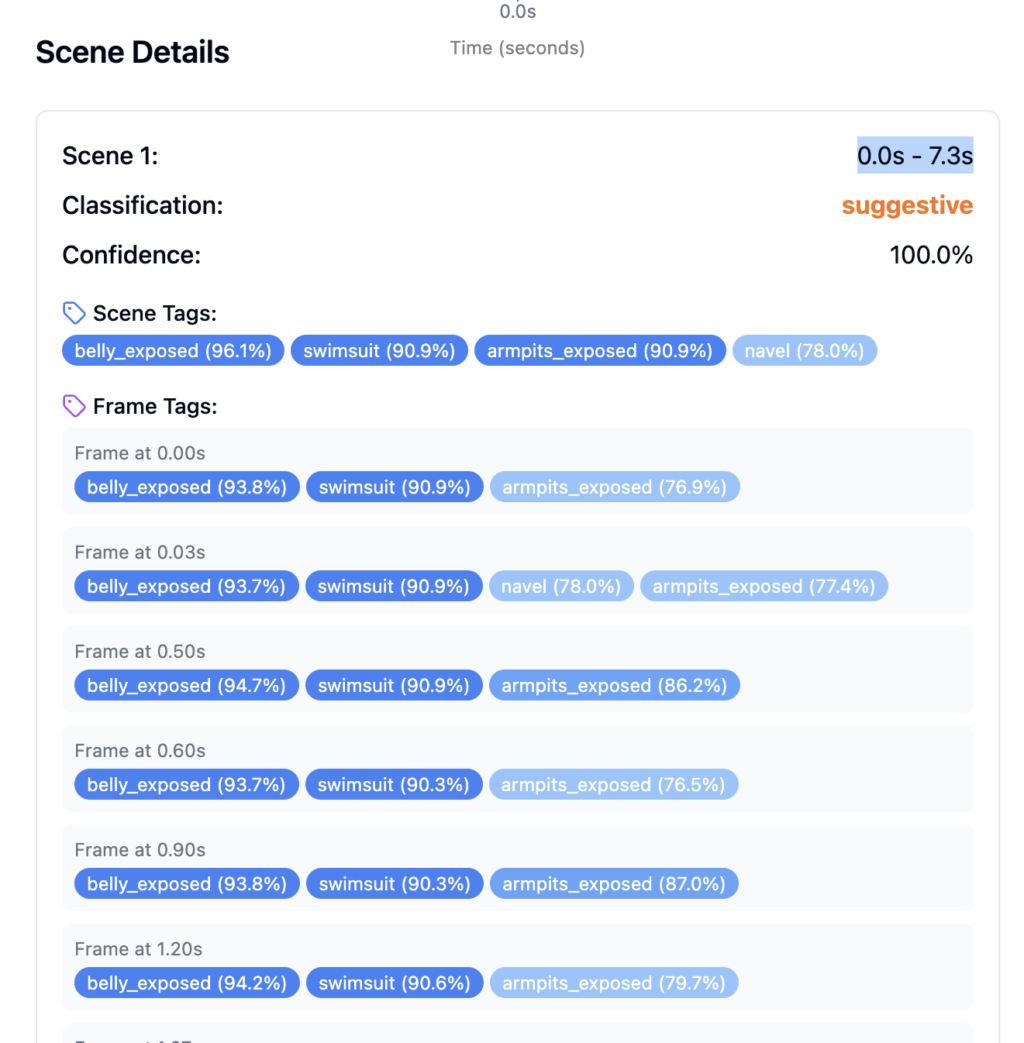

Precise Scene-Level Classification

The model breaks videos down into clearly defined scenes. Each scene is individually analyzed and classified as explicit, suggestive, or safe, accompanied by exact timestamps. This allows moderation teams to pinpoint problematic sections immediately, dramatically improving workflow efficiency.

Context-Rich Tagging for Deeper Insights

Beyond scene classification, Imagga provides contextual tags that offer deep insight into why content is flagged as suggestive or explicit. This is achieved by using additional AI models on top of Imagga’s world-class classification system. The tags highlight specific visual elements commonly associated with adult or borderline content, such as "swimsuit," "belly exposed," or "armpits exposed". Each accompanied by a confidence score indicating the likelihood of their presence. These granular, context-rich tags give moderation teams the clarity they need to make faster, more consistent, and more accurate decisions.

Practical Applications

Adult content detection is essential for a wide range of digital platforms that require a high level of safety and compliance measures.

Most types of user-generated content platforms fall in this category, and in particular, social media platforms. The spread of explicit video materials can easily go out of control. Video moderation allows social media channels to uphold their community guidelines and adhere to the latest content moderation regulations.

E-commerce marketplaces also need immediate explicit content detection to protect their reputation and keep their users engaged. Inappropriate product review videos or user demos have to be quickly identified and removed to maintain an user-friendly and safe shopping environment. The same goes for fake listings and profiles.

Online education platforms need a particularly high level of protection from adult content. They have to protect students and to uphold their reputation as a reliable education provider. Explicit video material has to be moderated in real-time to prevent any exposure, especially to underage students.

Dating websites and apps often struggle with adult content detection, as do gaming platforms too. In both cases, quick detection and removal of explicit videos is essential for user safety and platform trust. You can see the benefits of Imagga’s Adult Content Detection Model in practice in the case study of how we transformed content moderation for World Singles, a leading dating platform.

The Benefits of Effective Adult Content Detection in Video

Integrating effective video adult content detection into the moderation workflow of your digital platform presents tangible benefits on a couple of levels.

AI-powered content detection brings immense benefits in terms of cost and time efficiency. Manual moderation cannot keep up with the sheer volume of content that requires screening in real time. But automated detection can handle these challenges, saving both time and money.

The precision of content detection offered by AI models is growing and stable. This , guarantees the accuracy and reliability of the video moderation process. Imagga’s model boasts a whooping 98% in recall for explicit content detection. This minimizes the chances of mistakes like false positives and negatives.

Automated detection delivers unparalleled scalability and flexibility. Besides handling large amounts of content in real-time, it can adjust to content peaks as needed.

Content moderation regulations continue to change and adapt to the realities of our digital world. Effective adult content detection ensures seamless fulfillment of legal requirements, so that digital platforms can stay safe and compliant.

Last but not least, reliable and consistent video moderation is crucial for user safety and trust. It’s also key in delivering a secure and pleasant user experience. These factors are central to raising platform engagement, brand loyalty, and overall user satisfaction. For educational platforms, shopping platforms and many others, adult content detection is of paramount importance for protecting younger and vulnerable audiences.

Explore the Benefits of Effective Video Adult Content Detection

Imagga’s Automated Adult Content Detection provides a state-of-the-art moderation solution for digital platforms across different industries. Our model helps businesses ensure comprehensive user and community protection, brand reputation safeguarding, and legal compliance.

Ready to test the benefits of our model for your platform? Get in touch with our team.

How Smart Video Moderation Boosts Platform Trust

A 2025 global survey conducted by the University of Oxford and the Technical University of Munich (TUM) shows that the majority of people worldwide support moderation of content on social media, especially when it comes to violence, threats and defamation. Automated text, image and video moderation is a powerful method for protecting users from harmful and illegal content. Its safety advantages are visible and numerous, but its business benefits are still to be fully explored.

In fact, smart video moderation can bring much more for digital businesses in terms of nurturing user trust and, down the line, increasing revenue.

Rather than being simply a necessary safety net, content moderation has immense potential for boosting trust in video platforms — and with misinformation and deepfakes on the rise, trust is precious in our digital age. Effective video moderation doesn’t just keep bad content away — it helps create more engaging and trusted online environments.

In the sections below, we explore the importance and challenges to building trust on digital platforms, examples how video moderation nurtures trust, and practical proof of how smart video moderation boosts platform trust.

Why Trust Is the New Growth Engine for Digital Platforms

Trust has immense importance for the success of any digital platform. It has a major impact on people’s perceptions and their behavior, which makes it a significant factor in the overall approval of a platform.

In particular, trust is critical for keeping users on a platform, whether it’s social media, e-commerce website, or a dating platform. People are much more likely to stick with a platform if they trust it, which is the cornerstone for user retention. It means they feel safe using it, and that’s the basis to keep engaging with it.

Trust is a crucial factor for advertiser confidence too. Brands are more likely to advertise on platforms that offer a safe and user-friendly digital environment. Investing in building trust is thus related to the ability to attract high-quality advertisers and increase ad revenues.

Last but not least, trust is essential for creating a sense of credibility. When people feel that a platform is a trustworthy source of information and a safe space for entertainment, they view it as credible. This perception can be created only through consistent and adequate content moderation and maintenance of quality and safety standards. By building credibility, platforms can uphold and enhance their reputation — and attract and retain users.

Challenges in Building and Keeping Video Platform Trust

Trust is of central importance for video platforms because users are particularly sensitive to harmful content in videos. Visual content is more immediate, so people quickly notice if something has been missed in the moderation process.

Inappropriate content, misinformation and spam harm the quality of the user experience and the reputation of the digital brand. They have significant negative effects on the overall trust in a platform.

Inappropriate content is a leading reason for damaging user trust and making people quit a platform. This content can be anything from explicit material, violence, hate speech, defamation, and more. It negatively affects the user experience and down the road, leads to brand reputation damage and dropout.

Another major challenge to platform trust is misinformation which travels at the speed of light these days. False or misleading information can start affecting people’s opinions and actions quite quickly. This can soon lead to the discreditation of a platform, especially if the cases repeat.

Spam is yet another obstacle to building and keeping people’s trust. Unwanted ads and repeating messages lead to clutter and worsen the user experience. Their continuous presence inevitably leads to user disengagement and dropout.

3 Examples How Smart Video Moderation Builds Trust

Boosting trust in a video platform is a long and rigorous process, but automated video moderation offers powerful tools for achieving it. With its help, video platforms can improve user safety and content appropriateness, increase user satisfaction, and nurture a positive digital environment.

Faster Response Times

Slow removal of inappropriate or harmful content can result in viral harm. That’s why delayed moderation is not an option in the process of creating trust.

The key to success is acting before the damage is done, which requires real-time or near-real-time flagging of bad content. AI-powered moderation platforms like Imagga are able to offer the speed and accuracy required to prevent the spread of unwanted content.

Transparent Feedback for Contributors

Having your content removed without good reasoning can be frustrating and can lead to disengagement. Content creators have a higher sense of trust in digital platforms when they are informed and understand why their content has been flagged or removed.

The same goes for transparent and fair appeal processes in cases when creators feel the content removal has been inadequate or unfair.

Content Appropriateness for All

People trust platforms when they consistently deliver a safe, fair and positive user experience by filtering out all inappropriate content. AI-powered video moderation guarantees that any visual content that reaches users has been screened and meets the community guidelines, as well as Trust and Safety programs and regulations.

Effective moderation is of utmost importance for vulnerable user groups, such as children, who need to be protected from harmful experiences at all times. This directly contributes to the inclusivity and fairness of a platform.

The Shift in Trust-Building Moderation: From Blocking to Curating

Blocking harmful content is the basic function of content moderation. But its role is ever evolving — and is now spanning also active curation of positive and engaging digital environments. This shift reflects a bigger trend in digital platform management which highlights the importance of building positive user experience.

The traditional view on moderation is that it is focused on finding and removing harmful or inappropriate content. This is a reactive role whose goal is only protective.

The new emerging view on moderation is more advanced: it not only serves as a safety mechanism, but helps shape relevant and pleasant user experiences.

In this paradigm shift, content moderation has a proactive role of promoting engaging and relevant content. This is clearly seen in early livestream flagging, for example, as the issue is handled in real-time. It has a double positive effect: users are not exposed to inappropriate content, and digital creators are encouraged to stick to platform standards on the spot.

What is Video Moderation

Video moderation is the use of technology or human review to identify and manage content in videos that may be harmful, explicit, or violate platform rules. It helps ensure that videos shared on websites, apps, and social platforms are safe, appropriate, and aligned with community standards. This process can include detecting nudity, violence, hate symbols, or misleading information. AI models are often used to scan video files — scene by scene or frame by frame — making it possible to moderate large amounts of content quickly and consistently.

So what does smart moderation in action look like?

Offers Scalability

Smart video moderation tools are able to efficiently process large volumes of visual content — both videos and live streams. This means that platforms can rely on adequate and consistent moderation even when there is increased user activity or special events. This predictability is essential for building trust.

Boasts Context Awareness

Advanced AI and machine learning technology provides moderation tools with the ability to grasp not only the facts about content, but also its context. Going beyond simple keyword flagging, moderation platforms keep learning how to differentiate nuances of cultural content, intent, and language. Context-aware moderation can thus make a difference between harmful content and a legitimate discussion about the topic, for example.

Provides Configurable and Flexible Solutions

Moderation tools offer different options for configuration, so that platforms can modify the parameters to match their community guidelines. In particular, they can set thresholds, rules, and paths for action that are in line with the particularities of the platform and its users.

Discover Trust-Building Moderation for Your Platform

At Imagga, we have developed and perfected our Adult Content Detection model to help digital businesses from different industries in handling adult visual content on their platforms.

Ready to explore trust-building moderation for your platform? Get in touch with our experts.

Image Moderation - Meaning, Benefits and Applications

Image moderation has become indispensable for keeping our digital environments safe and user-friendly. With the growing amount of user generated content uploaded on digital platforms, the process of screening and filtering inappropriate and harmful visual content is crucial. Image moderation meaning refers to the process of reviewing and managing visual content to ensure a positive user experience, safeguard brand reputation, and enhance online presence. In the sections below, we dive into the basics about image moderation -- methods, technology, benefits, and applications.

What Is Image Moderation?

The image moderation process encompasses the filtering, analyzing and managing visual content on different types of online platforms — from social media and e-commerce websites to learning platforms and corporate systems.

Its goal is to find and handle inappropriate images in the context of the specific platform, including explicit, offensive, or harmful.

The main purpose of image moderation is to detect, flag and remove inappropriate visuals. Nowadays, the process is handled to a large extent with the help of Artificial Intelligence and machine learning algorithms trained to identify certain types of images. Manual review is necessary only in certain cases.

Automated image moderation allows companies to set moderation criteria and filters that match their community standards. Immediate removal of harmful content is possible with real-time monitoring.

Protecting user safety is paramount for the functioning of any digital platform today — and image moderation is the key to achieving it.

Along with that, companies also have to preserve their brand reputation by not allowing inappropriate content to circulate freely. This is also often necessary in order to comply with international and national legal regulations aimed at safeguarding digital users.

How Does Image Moderation Work?

Image moderation techniques follow a structured workflow that starts at the moment of upload of an image. Once the image is up, it is screened against a list of predefined guidelines formulated to safeguard the platform’s users and its overall integrity.

If there is inappropriate or harmful content, the image can be flagged, blurred or directly removed.

Methods Used

Image content moderation can be conducted in a number of ways, which bring different levels of accuracy and scalability.

Manual Moderation

Before AI started being used to moderate images, manual moderation was the main method. It entails human moderators reviewing each and every uploaded image. Today human moderation is necessary only in special cases.

Automated Moderation

With the rapid development of AI-driven tools, most of the image moderation is done with the help of algorithms. They allow for unseen scalability and speed, as well as real-time monitoring.

Hybrid Approaches

Combining AI with human judgment for accuracy is the most common method today. Automated tools filter through the massive amount of uploaded content. They remove harmful content and flag for human moderation images that need a more nuanced approach, catch false positives, and the like.

Key Technologies

Automated image moderation is empowered by AI advancements like computer vision and machine learning. With their help, moderation systems can learn to ‘see’ images and recognize patterns and anomalies that point to potentially problematic content.

Machine learning algorithms get better with time, improving their accuracy and speed on the basis of the processed visual data.

Image moderation techniques also include image similarity detection (removing identical images), as well as optical character recognition that turns text from images into data that can be edited.

What Are the Benefits of Image Moderation?

Effective image moderation brings a bunch of other benefits beyond the mere filtering of visual content.

The positive impact is substantial for both people using online platforms and for the companies developing and maintaining them.

Enhanced User Safety

First and foremost, image moderation ensures the safety of users on online platforms. It fosters safe digital environments where people, and especially vulnerable members, are shielded from harmful or offensive content.

Brand Reputation Protection

Adhering to high ethical standards in terms of content moderation is a must for reputable brands today. When users know that a platform is safe, they are more likely to engage on it and trust the brand behind it. Safeguarding the reputation is directly linked to attracting new users and improving brand loyalty.

Compliance with Regulations

There are numerous legal regulations that online platforms have to comply with — both on national and international level. By doing this, they guarantee the safety of users, while also minimizing the risk of legal penalties.

Improved User Experience

When image moderation is done right, online communities can thrive — as platforms harbor only relevant and high quality content. Improved experience leads to loyal users and a solid brand image.

Applications of Image Moderation

The use of image moderation services in the current digital landscape is ubiquitous — and essential to guaranteeing safety and quality. Here are some of its most popular applications.

Social Media Platforms

Moderation of visual content is key for social media. They have to filter out massive amounts of unsafe and explicit content, so that they can provide a normal experience for their users.

E-Commerce Sites

With the help of image moderation, e-commerce platforms can maintain product integrity and remove misleading, fake and inappropriate images. This is essential for building customer trust and satisfaction.

Dating Apps

Dating platform users are especially vulnerable to inappropriate visual content. That’s why photo moderation is a crucial tool for creating a safe and pleasant space for meaningful exchanges. Read how World Singles transformed content moderation for their global platform using Imagga technology.

Online Communities

Different types of digital communities where users post pictures use moderation to filter out harmful and offensive images. This helps them stick to their community guidelines and foster a sound digital environment.

Advertising Platforms

Photo moderation comes in handy for advertising platforms when it comes to ensuring ads meet preset standards. Misleading or offensive visuals can be removed before going online — protecting reputation and raising the overall content quality.

Best Practices for Effective Image Moderation

Effective image moderation starts with a solid strategy that is based on the latest technological advancements and regulatory requirements.

Use a Hybrid Approach

Combining AI moderation with human oversight is currently the most effective and balanced image moderation technique. Moderators need to review only complicated cases where a nuanced and culturally sensitive approach is necessary.

Regularly Update AI Models

Continuous updates of AI models are essential for ensuring that your automated moderation system is up-to-date with the latest inappropriate content tendencies.

Define Clear Moderation Policies

Clear guidelines for identifying and filtering images that may be harmful, irrelevant and explicit are needed for both human and AI moderation.

Ensure Transparency

Platforms that are open about their moderation policies earn the trust of their users.

The Role of AI in Modern Image Moderation Solutions

As a trailblazer in the AI-powered image recognition field, Imagga has been exploring the newest technology and developing cutting edge solutions for image moderation for a dozen years now.

Our robust image moderation platform is fueled by tools like facial recognition and adult content detection, among others. With their help, businesses can create safe digital spaces, comply with regulations, and uphold their brand image in the tumultuous tides of digital innovations.

Ready to explore the capabilities of our AI image moderation tools that can assist your business? Get in touch with our experts.

Conclusion

The role of image moderation in creating safe and engaging digital environments cannot be overstated. The moderation process ensures the removal of harmful visual content, thus providing for protection of users, compliance with regulatory requirements, and upholding of community standards.

Investing in effective image moderation is a strategic step to creating safe, robust and attractive platforms that help build up a brand's name — and ultimately, serve their users well.

FAQ

Social media, e-commerce, digital forums and communities, and user content platforms benefit most from image moderation, as it helps them handle the huge amounts of newly uploaded content.

Manual image moderation can be harmful to human moderators, is extremely time-consuming and difficult to scale, and is subject to human error.

Automated image moderation tools are powered by AI developments like computer vision and ML algorithms that are able to identify, flag and remove inappropriate and harmful content.

AI can’t replace human moderators fully. The hybrid approach that relies on both automated and human moderation has proven as the most effective and fair moderation technique.

Virtual Try-On Fashion: Transforms the Shopping Experience

Technology is stepping in to revolutionize most aspects of our lives, and the fashion industry is no stranger to this trend. Virtual try-on technology is revamping the way consumers choose clothes, accessories and makeup — and the whole shopping experience is being transformed.

The transformation of fashion e-commerce is a particularly important one, keeping in mind it’s one of the world’s biggest market segments. By 2029, the industry is expected to reach a market volume of $1.183 trillion, according to Statista.

The stunning growth is fueled by consumers’ massive use of mobile devices for shopping, as well as technological advancements — and virtual try-on technology is certainly among the leaders. As people become more and more tech-savvy, trying on clothes in a virtual dressing room and testing makeup on digital images is not an extra perk, but the expected norm.

Trying clothes virtually is enabled by the integration of Artificial Intelligence (AI), Augmented Reality (AR), and 3D scanning and modeling. Let’s dive into the details of how technology and fashion meet, the applications and benefits of virtual try-on technology, and the future ahead.

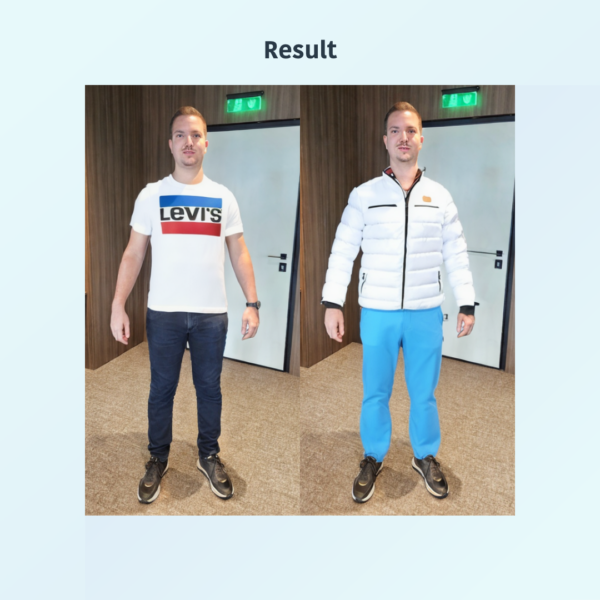

What is Virtual Try-On in Fashion?

Virtual try-on technology allows customers to interact with clothing and beauty products in a completely new way — changing the shopping experience and improving a number of aspects related to online purchases. People can try how clothes, accessories, makeup and other items suit them through a simulation, which is usually a virtual fitting room. The technology scans the face or body of the customer and visualizes how the product would look on them.

This preview helps people get a clear idea of how an item enhances their appearance. This way, they can make more informed purchasing decisions with a greater level of confidence.

Virtual try-on contributes to decreasing returns, improving overall customer satisfaction, and boosting product discovery -- which helps increase sales and conversion rates. It contributes to the creation of personalized and highly immersive shopping experiences. Besides fashion, it has similarly useful applications for beauty, makeup, hairstyle and nail polish businesses, among others.

How It Works: The Technology Behind Virtual Try-On

Virtual try-on technology is fueled by a powerful blend of AR, AI, 3D scanning and mapping, and seamless e-commerce integration. The mix of these technologies allows for realistic and immersive ecommerce experience for customers.

Augmented Reality (AR)

Augmented reality has a central role in digital try-on. It allows the overlaying of items, such as virtual clothes, onto actual customer photos. The AR technology instantly maps their body or face in real time using the camera of their mobile device. Then it creates a digital representation which can be analyzed and modified.

Artificial Intelligence (AI)

AI algorithms are key for a couple of central virtual try-on functionalities, and in particular computer vision and image recognition. They enable size prediction for the customer, fit analysis of each item, and personalized recommendations for products. With the help of advanced image recognition algorithms, the try-on can thus suggest the best fit and style for a particular client.

3D Scanning and Modeling

Creating realistic representations of how an item fits a customer is powered by 3D scanning and modeling. The technology analyzes the measurements and shapes, allowing the generation of an avatar that is as close to the real person as possible. This makes the clothing preview accurate and reliable.

Integration with E-Commerce

The combination of these three technologies blends seamlessly together in the e-commerce platforms with the help of APIs and specialized software. This allows the smooth integration of try-on technology straight into e-commerce websites and apps -- allowing the creation of virtual fitting rooms and similar simulations.

Benefits of Virtual Try-On for Consumers and Retailers

Virtual try-on can be used in a variety of sectors, including e-commerce platforms for clothes, accessories, beauty products, and makeup, as well as in-store experiences for traditional stores. It has applications in luxury fashion — and even in sustainability initiatives.

Virtual try-on technology brings a number of benefits and addresses key challenges in the retail industry. In particular, it improves customer experience, decreases operational inefficiencies, and drives a higher level of sustainability.

Enhanced Customer Experience & Lower Return Rates

Virtual try-on provides realistic previews of products that are interactive and immersive. It helps customers make informed purchasing decisions — decreasing returns and improving customer satisfaction with online shopping, as well as boosting convenience.

In-store Experience

Digital try-on technology has a role in physical retail spaces too. It offers virtual dressing rooms and personalized recommendations that help engage and retain customers who can discover new items through complimentary apps and platforms.

Challenges and Considerations

To maximize the potential of try-on in the retail industry, it’s important to know the potential challenges and considerations for its successful implementation.

Technical Limitations

There is a constant need for technological updating to manage issues with rendering accuracy, as well as compatibility across different devices, that may otherwise affect user experience.

Consumer Adoption

The learning curve for using try-on solutions can be significant for some customers, which may lead to potential resistance. Easily accessible educational materials, as well as seamless UX/UI design are thus key.

Privacy Concerns

Consumers are more and more concerned about their data security and privacy. Powerful privacy measures in handling sensitive data and images are thus essential to ensuring user security.

How Image Recognition Powers Virtual Try-On Experiences

One of the most important technologies behind virtual try-on is image recognition — and we at Imagga have extensive experience and in-depth expertise in precisely that.

Our cutting edge image recognition platform boasts visual search and facial recognition, among other capabilities. Visual search enhances user experience, improves product discovery, and fuels personalization. We offer custom model training, which allows us to tailor AI models to your particular business and its needs.

Want to learn more how our image recognition solution powers up virtual try-on? Get in touch with our experts.

FAQ

Virtual try-on tools vary in their accuracy, but are often quite realistic, especially with recent advancements in AI, AR and 3D scanning.

Virtual try-on technology can create realistic visual representations of a variety of clothing, accessories, makeup and beauty items. Some items might be more challenging due to their complexity or material.

Using try-on solutions by proven brands usually means data safety and privacy are a must. It’s a good idea to still check the policies of each tool before using it.

Virtual try-on improves the customer experience of online shopping, making it more seamless, interactive and personalized. For clothing brands, it helps reduce return rates, improves inventory management, and increases overall sales.

From E-Learning to Shopping: The Overlooked Need for Adult Content Detection

Content moderation has become a necessity in our online lives, providing protection from illegal, harmful and explicit content. Adult content detection is an important part of content moderation, identifying and removing pornographic, erotic, and other types of explicit content from platforms where it shouldn’t be.

Adult content detection has become customary for social media and dating apps. Since their use is so widespread, unwanted explicit content is often published there — so in fact, we expect content filters to be there.

But did you know that intelligent adult content detection, and in particular Not Safe for Work (NSFW) filtering, is important for a whole bunch of other industries and platforms?

Educational platforms, shopping websites, work platforms, streaming, and fitness apps are just some examples of digital spaces where unwanted explicit content gets published. It can be offensive, distracting, or outright harmful.

Ensuring NSFW moderation for this wide array of platforms is thus essential for creating safe online environments.

Let’s dive into the basic definition of adult content detection and NSFW filtering, its common uses, and — most of all — in its still unexpected applications that turn out to be quite important.

The Basics About Adult Content Detection and NSFW

The terms adult content detection and Not Safe for Work (NSFW) filtering, also known as Not Safe for Work, are close in meaning, with the first being broader. In this article, we’re using them interchangeably to denote the identification and/or removal of content that people wouldn’t want to be seen viewing in a public or professional setting — usually because it is either inappropriate or offensive, causing harm or discomfort.

More often than not, this means explicit content, be it pornography, nudity, or the like. Adult content may be in the form of images, video, or text.

The Purposes of Adult Content Detection

With the growth of digital platforms of various types, the necessity of adult content moderation has increased dramatically as well. Websites, online communities, social media — all these spaces have to provide a safe and predictable environment to their users, and are often legally required to do so. That’s why the use of adult content filters stems from both ethical and legal considerations.

The purpose of adult content detection is thus manyfold. First and foremost, it protects people, and in particular young audiences, from explicit and inappropriate content. In the workplace context, NSFW filtering guarantees professional digital spaces that don’t contain offensive or distracting content. This type of moderation is also necessary for safeguarding the image of brands and digital businesses, ensuring they are trustworthy places for users of all ages. Last but not least, NSFW filtering helps online platforms conform with national and international legal requirements in terms of content moderation and protection of minors.

AI-Powered Adult Content Detection

In practical terms, adult content moderation entails the categorization of online content as either containing or not containing explicit visuals. The filters can be set in different ways, depending on the particular needs of the digital platform — including thresholds and types of explicit content that has to be filtered out. Some platforms have an option NSFW that users can click when uploading their content, which helps categorize it and restrict access to it as necessary.

With the advancement of AI tools, adult content filtering can now be fully automated. This means that human moderators are not exposed to such content when removing it manually. Instead, machine-learning algorithms that are trained on large volumes of data are able to identify patterns — and thus to remove explicit content automatically.

Image recognition powered by AI is at the heart of the process, allowing the accurate recognition of NSFW content within images, videos and live streams. Once the moderation system identifies an item as explicit content, it can remove or blur it — or in some cases, forward it for further review. Adult content filtering provides dynamic moderation that can work in real-time. This means they can block explicit content before it becomes visible, which is especially important for live streaming.

The Benefits of Using Adult Content Detection

Striking the right balance between moderation and censorship is not easy, but when it comes to adult content, the benefits are numerous.

With NSFW filters in place, people can browse websites and use platforms without the risk of stumbling upon explicit content that can be disturbing or distracting. This improves user experience for digital businesses.

Platforms and websites that target a wide array of demographic groups benefit from adult content filters immensely. By having this moderation in place, they can safely offer content to students, professionals, minors, and families.

In terms of brand image, having adult content moderation is a sign of trustworthiness. It demonstrates that the business puts user protection first, aiming to offer a safe digital space for its customers.

Common (and Unsurprising) Uses of Adult Content Detection

Many online platforms have been struggling with adult content in the last few years. By now, we’re used to thinking that our social media channels have to be moderated, so that we don’t get exposed to different types of harmful and explicit content, and especially adult content. All big platforms like Facebook, Instagram, and TikTok are employing such filtering.

The same goes for dating apps, which are a common place where some users might want to share explicit images. However, it’s especially important to make dating platforms a safe space for people looking for their soulmates, and that’s where adult content filters help out.

Another common use of NSFW filters include websites and platforms targeted at minors. Their protection in the digital space is paramount, so this kind of content moderation is essential.

Other fields in which adult content moderation is already being used include stock photography and videography platforms, among others.

Unexpected Platforms That Need Adult Content Detection

And now for something different — which fields and platforms benefit from adult content moderation, even though we might not expect it to be necessary?

Educational Websites and Platforms

While it’s not so obvious, e-learning platforms struggle with NSFW content. Since educational websites and platforms often have to allow upload of user materials, or support user forums and discussions, the risk of explicit content going online is present. At the same time, the platforms have to ensure all content adheres to their standards and rules and to provide a safe learning environment, so that they are trusted by students and educators alike.

What’s more, many educational platforms are targeted at minors. The protection of younger audiences is of utmost importance and requires extra care, which makes adult content moderation essential for e-learning.

Shopping Websites

Online marketplaces with user generated content benefit greatly from NSFW filtering. When users upload their product photos or reviews, they may include adult content. The platform thus has to take care to remove any explicit content in order to ensure a safe browsing experience for customers of all ages.

Professional Networking and Work Platforms

NSFW means, after all, Not Safe for Work. Adult content doesn’t have a place on professional platforms, whether those intended for networking and socializing like LinkedIn, or workplace communication and project management tools like Slack or Asana.

Health and Fitness Apps and Communities

Health, wellness and fitness online communities often share personal photos and other user generated content. As with all such content, there is always a risk of explicit content sharing, either unintentionally or on purpose. For example, the famous “before” and “after” photos may be too revealing, or in some cases, perceived as offensive by other community members.

VR and Online Gaming Platforms

Since VR and gaming platforms have many options for user generated content, they need adult content filtering like the rest — given their attractiveness for younger audiences.

In the case of VR platforms, adult content may be shared in virtual rooms or hangouts — and given the immersive nature of VR, the effect might be quite intense. As for online gaming, inappropriate content can be posted in the form of user avatars, mods, and custom skins, as well as in the game chats.

Streaming Platforms

Video and live streaming platforms are especially vulnerable to explicit content. It’s not easy to monitor live streams where users may easily share adult content. The same goes for video streaming platforms like YouTube where problematic content can be published as videos or as user interactions. Streaming platforms often have younger audiences, which means they have to pay special attention to adult filtering to provide safe and appropriate content to minors.

Explore the Powers of Imagga’s Adult Image Content Moderation

At Imagga, we have developed and perfected our Adult Content Detection model to help digital businesses from all venues in handling adult visual content on their platforms.

Powered by our state-of-the-art image recognition technology, our Adult Content Detection can process large amounts of images and videos, and works instantly and in a fully automated way. It performs 26% better in accuracy against the leading adult detection models on the market. Read more about the performance comparison.

Want to try our Adult Content Detection system for keeping your platform safe? Get in touch with us to get started.

What is Image Recognition? Technology, Applications, and Benefits

What if technology could perceive the world around us the way we do? That’s exactly what image recognition makes possible. It enables artificial intelligence to spot and ‘understand’ objects, people, places, text, and actions in digital images, videos, and livestreams. From facial recognition object detection to medical images analysis, this technology is already a part of our everyday life.

AI image recognition technology has applications across the board. Businesses in media, retail, healthcare, security, and beyond are using it to improve productivity, automate tasks, and gain insights into various aspects of their operations. Despite the obvious benefits, many companies don’t even realize they can use image recognition, or think it’s not affordable.

In this post, we’ll explore how it works and the technology behind it, its benefits and future developments, and how you can make the most of it for your business.

What is Image Recognition?

Image recognition is the ability of computer software to recognize objects, places, people, faces, logos, and even emotions in digital images and videos. It is a subset of computer vision and as such, it is transforming how computers process and interpret visual data.

At its core, image recognition algorithms rely on machine learning and increasingly, on deep learning . An algorithm is trained on large datasets of visual content, allowing it to recognize and categorize content based on patterns it has learned. Unlike the human brain, which interprets images through experience, artificial intelligence processes them as complex mathematical matrices. This enables it to recognize people and perform object detection with high levels of accuracy.

How It Differs from Computer Vision

While they are often used as having the same meaning, they are in fact different.

Computer vision is a broader term of Artificial Intelligence that encompasses many different tasks that machines can execute in relation to extracting meaning from images and videos. It includes picture recognition, as well as image segmentation, object detection, motion tracking, and 3D scene reconstruction, among others.

Image recognition is just one task within this broader domain of computer vision. Its focus is on identifying and classifying objects, people, places, text, patterns and other attributes within an image or a video.

Why It Matters

Image recognition is much bigger than just a fad that helps us unlock our phones. The technology behind it is playing a key role in making the systems that we use become smarter, safer, and more efficient.

For businesses of all sizes and across industries, image recognition offers new methods for optimising operations and creating enhanced experiences for end users. It contributes to the creation of smarter systems that are also easier to work with and more responsive.

Security systems also benefit from this technology. It provides facial recognition technology, threat identification mechanisms, and deviations detection, which makes it crucial in fraud prevention and safety in various fields — from cybersecurity and banking to manufacturing.

Since it enables machines to interpret data from images and videos with high accuracy, image recognition boosts automation and thus helps improve overall efficiency and productivity. Its applications are numerous in diverse fields, such as finance, manufacturing, and retail, among others

How Does Image Recognition Work?

Image recognition applications are powered by complex computer vision technology that evolves as we speak. In the sections below, we take a dive into the basics about machine learning, deep learning, and neural networks. We also review the key steps in the image recognition process, and the types of tasks that it can perform.

The Technology Behind It

It is largely powered by deep learning models (DL). They are a subset of machine learning (ML) which uses advanced multi-layered algorithms known as neural networks.

Machine learning, or ‘nondeep’, is the traditional method of enabling computers to learn from data and to analyze it and make decisions. It uses simple neural networks consisting of few computational layers. Deep learning techniques, on the other hand, employs more than three computational layers for its neural network architecture. Usually the layers are within the hundreds or even thousands.

Neural networks imitate the way the human brain works in terms of processing information. They analyze data and make predictions or decisions. To serve the purposes of picture recognition, neural networks are trained on large datasets containing images with labels. The goal of this process is that the neural networks learn to spot patterns and features, which would enable them to identify them in images and videos later on.

The biggest power of deep learning algorithms is that they can grasp complex patterns from raw data. In the context of image recognition, this means they can differentiate between objects and identify complex details in images and videos through grasping both simple and complex features.

Within the realm of neural networks, convolutional neural networks have a particular importance for deep learning image recognition technology because of their hierarchical structure. Their strength is in scanning images with a number of filters already in the early layers, which are able to identify even minor features like textures and edges. In the deeper layers, the CNNs can detect more complex patterns like objects, shapes, and scenes.

Key Steps in the Process

There are three main steps in the process of image recognition, whether through deep learning or machine learning — data collection and labeling, training and model development, and its deployment for actual use.

The first step of data collection and labeling entails a large and diverse set of images which spans the different types of content — the variety of objects, scenes, or people — which later needs to be recognized. The images have to be categorized and labeled, so that the model would be able to spot them adequately. This process often involves human input too, so it is quite labor-intensive and time-consuming, but is a must. The bigger the training data is, the more effective the work of the model will be. The same goes for the accuracy of the datasets and their labeling.

The next step of the process is the model training and development. The deep learning image recognition model, often a convolutional neural network (CNN), is trained on the labeled images. It has to learn to recognize patterns in the data and to bring down to a minimum prediction errors. This stage is quite heavy on computational resources, and often requires fine-tuning of hyperparameters to achieve maximum performance of the model.

The third step is the real-world deployment of the model — within mobile apps, security systems, or the like. At this stage, the model has to be adjusted for the actual use and modified in a way that it can process large amounts of data with high accuracy. It often involves new training and monitoring to guarantee its optimal performance.

Types of Image Recognition Tasks

As a subset of computer vision, image recognition technology can perform a wide variety of tasks that have their applications in many different industries. The four main tasks include object detection, facial recognition, scene classification, and optical character recognition (OCR).

Object detection allows us to identify objects within an image or video. It differs from image classification which involves labelling the whole image. Besides the capability to automatically identify objects, object detection also includes the identification of their locations with the help of drawing bounding boxes around them. This is crucial in a number of computer vision applications like security systems, quality control, and self-driving vehicles.

Identification and verification of people is done with the help of facial recognition in a number of different contexts — from security and surveillance to image tagging on social media and personalization of products and services. The image recognition model maps the individual’s facial features and thus crafts a unique biometric signature. It is then used to identify a face in different settings.

With the help of scene classification, the recognition of the context and environment of a visual become possible. This image recognition system task is important in photo album sorting, image catalogue and listings analysis, and self-driving vehicles, among others. Through scene classification, image recognition software can provide the larger context of digital images that helps their overall understanding.

Last but not least, optical character recognition (OCR) allows the detection and extraction of text from images. Its applications are manyfold — from identification of vehicle license plates to digitizing paper documents. OCR makes seamless the conversion of text on paper into digital format that can be analyzed, searched, and archived.

Applications of Image Recognition

The use of the technology is wider than we might suspect. Some of its most common applications include online platforms, healthcare and medical imaging, security, and self-driving cars.

Online Platforms

Image recognition improves user experience, enhances security and boosts personalization for different types of online platforms.

- Dating platforms: It helps identify fake profiles through analysis of profile images for potential manipulations and ID verification. Content moderation based on image recognition filters out inappropriate content such as offensive or explicit images, promoting safety.

- Social media and content sharing: Image recognition software provides auto-tagging in photos, which is a popular perk on social media. Its use in content moderation is also crucial, as it detects and removes offensive content, contributing to the safety of online platforms. Image recognition also provides for enhanced user interactions through targeted content and personalized ads.

- E-commerce: Image recognition identifies images in online search. With its help, people can conduct image-based searches for products, which makes the shopping experience much easier. Through analysis of previous purchases, image recognition helps the creation of personalized recommendations too.

Healthcare and Medical Images

With the help of AI-powered image analysis, healthcare providers have invaluable tools for improving diagnostics and early detection of illnesses. Image recognition is particularly useful in the identification of anomalies in X-rays, MRIs, and CT scans through automated image analysis.

The image recognition systems can identify irregularities in scans such as tumors, fractures, or other signs of diseases, which makes the diagnostic process faster and less prone to errors. It also helps automate tasks and allows medical staff to focus on to the more significant features of patient care.

Security and Surveillance

Being a subset of computer vision, image recognition powers up the enhancement of security systems. Facial recognition, object detection, and monitoring for suspicious activity provide for improved overall security. Image recognition systems can identify individuals in video footage and cross-check in databases, boosting threat detection and improving response times.

Face recognition helps access control and person identification in public or secure areas. Automatic detection of unusual items and unauthorised behavior is also enabled by object detection.

Autonomous Systems

Autonomous systems like self-driving vehicles and drones rely on computer vision for the interpretation of visual information that allows them to move in space. Equipped with cameras and sensors that constantly supply new images, they can understand their surroundings and differentiate the elements on the road like vehicles, pedestrians, and traffic signs. This allows them to avoid obstacles and incidents and navigate in complex environments.

Benefits of Image Recognition

Image recognition powered by deep learning or machine learning holds immense potential for both businesses and individuals.

Its benefits are manifold, including:

- Efficiency and automation: Image recognition algorithms help decrease manual work in repetitive and time-consuming tasks in all kinds of industries. Automating processes like defect detection saves up a ton of time and effort in fields like manufacturing and engineering.

- Improved Accuracy: When it comes to tasks that require high levels of precision, as well as consistency, AI-powered image recognition can perform better than us. In areas like medical image analysis, the increased accuracy that it can offer can be of huge importance.

- Enhanced User Experiences: Automatic tagging and personalized content are perks that many of us enjoy on social media channels. In e-commerce and retail, capabilities such as visual search of products and tailored recommendations are certainly driving customer satisfaction.

- Scalability: Businesses that have to handle large-scale visual data in an effective way simply need image recognition. It allows them to keep up on accuracy and performance, while having the possibility to increase the volume of processed visual information.

Challenges in Image Recognition

Data Privacy and Ethical Concerns

Image recognition software raises a number of ethical and data privacy concerns, and they mostly revolve around facial recognition technology. While its use is welcomed when it comes to photo tagging, it can also be used for unauthorized surveillance or even criminal misuse. Monitoring people without their consent and handling sensitive personal data are issues that still haven’t found an all-round solution. Balancing between personal privacy and tech advancements is certainly a field that proves challenging — as it requires both adequate policy-making and enforcement.

Bias in AI Models

AI models are trained on datasets — and the fairness of the datasets will inevitably reflect on the fairness of these models. If the datasets are not diverse enough, this can lead to biased patterns in the image recognition model, which has proven to be the case for people of color, for example. Careful selection of diverse training data is thus key to ensure fair representation and treatment.

High Computational Requirements

Deep learning models like convolutional neural networks (CNNs) that are used in computer vision systems need a lot of computational power. This requires large-scale GPUs and special infrastructure, which can be expensive and complex to build — and difficult to access for smaller businesses.

Handling Complex Contexts

While AI-powered image recognition develops rapidly, there are some limitations to the current technology in terms of understanding complex contexts and deeper meanings in images. The biggest challenges are in grasping emotional and social contexts, as well as understanding interactions.

The Future of Image Recognition

As technological advancements in the AI field are already reshaping our concepts of what’s possible, we can expect that the coming years will bring significant innovations in the field of machine learning image recognition and computer vision as a whole.

Emerging Trends

A number of new trends in the field are developing, but there are two in particular that we believe hold significant potential.

Integration of real-time image recognition algorithms in augmented reality (AR) and virtual reality (VR) is one of them. It boosts the capabilities of AR and VR applications by enabling them to interact with and identify objects present in the real world. The uses of this integration are numerous, including retail, simulations, and gaming.

The other significant trend is the development of AI-powered multimodal systems that combine image, text, and audio. This helps the creation of applications that have a greater understanding of human context and our ways of communication.

Potential Innovations

The possible venues for innovation in computer vision are expanding constantly, but at the moment, there are three that we believe are truly intriguing.

A novel application is in smart cities. With the help of image recognition, they can be improved with smart traffic management and enhanced safety.

An especially important application of the technology is the creation of advanced medical solutions. They can become crucial tools for predictive diagnostics, early disease detection, and individually tailored healthcare plans.

Ethical AI Development

The development of machine learning image recognition should go hand in hand with upholding high ethical standards. The most important issues include fair models, privacy, and transparency. Achieving these goals entails the usage of diverse and fair datasets for model training, as well as prioritising privacy in AI development.

How AI-Driven Solutions Are Transforming Image Recognition

As a pioneer in the image recognition field, at Imagga we are exploring and developing the AI field for more than a decade. We are embedding cutting edge AI technology to provide our clients with the best tools for their business needs.

Our AI-powered technology offers powerful tools for image tagging, facial recognition, visual search, and adult content detection, and more. We strive to drive innovation while integrating an ethical approach to all our work. Let's get in touch to discuss your case.

Conclusion

Image recognition is one of the most powerful and impressive applications of AI today. It is bringing unseen innovation across industries — from e-commerce and social media to healthcare and manufacturing.

Driven by an ethical AI development approach, novel applications hold immense potential. They are able to help improve the way we work, interact with machines, and get healthcare, among many other fields — with promising potential innovations in multimodal AI systems, practical applications in healthcare and smart cities, and real-time image recognition in AR and VR.

The technology can achieve remarkable accuracy when trained on high-quality and diverse datasets.

Our cognitive process involves perceiving objects through context, experience and memory. Computer vision, on the other hand, entails image processing through mathematical matrices.

Image recognition has numerous real-time applications like face detection , security surveillance, content moderation, AR and VR, autonomous vehicles and systems, and more.

Image recognition models are trained on diverse datasets which enables image processing in different lightning, background, and angles.

The main ethical considerations include user privacy, transparency of technological development, and bias in AI models due to limited training data.

Integration of AI-powered image recognition tools is possible through cloud-based services, APIs, and custom-made models.

Image Tagging | What Is It? How Does It Work?

The digital world is a visual one — and making sense of it is based on the premise of quick visual searching. Companies and users alike need effective ways to discover visuals by using verbal cues like keywords. Image tagging is the way to realize that, as it enables the classification of visuals through the use of tags and labels. This allows for the quick searching and identifying of images, as well as the adequate categorization of visuals in databases.

For both businesses and individuals, it’s essential to know what their visual content contains. This is how people and companies can sort through the massive amounts of images that are being created and published online constantly — and use them accordingly.

Here is what image tagging constitutes — and how it can be of help for your visual database.

What Is Image Tagging?

From stock photography and advertising to travel and booking platforms, a wide variety of businesses have to operate with huge volumes of visual content on a daily basis. Some of them also operate with user-generated visual content that may also need to be tagged and categorized.

This process becomes manageable through the use of picture tagging. It allows the effective and intuitive search and discovery of relevant visuals from large libraries on the basis of preassigned tags.

At its core, image tagging simply entails setting keywords for the elements that are contained in a visual. For example, a wedding photo will likely have the tags ‘wedding’, ‘couple’, ‘marriage’, and the like. But depending on the system, it may also have tags like colors, objects, and other specific items and characteristics in the image — including abstract terms like ‘love’, ‘relationship’, and more.

Once visuals have assigned keywords, users and businesses can enter words relevant to what they’re looking for into a search field to locate the images they need. For example, they can enter the keyword ‘couple’ or ‘love’ and the results can include photos like the wedding one from the example above.

It’s important to differentiate between image tagging and metadata. The latter typically contains technical data about an image, such as height, width, resolution, and other similar parameters. Metadata is automatically embedded in visual files. On the other hand, tagging entails describing with keywords what is visible in an image.

How Does Image Tagging Work?

The process of picture tagging entails the identification of people, objects, places, emotions, abstract concepts, and other attributes that may pertain to a visual. They are then ascribed to the visual with the help of predefined tags.

When searching within an image library, users can thus write the keywords they are looking for, and get results based on them. This is how people can get easy access to visuals containing the right elements that they need.

With the development of new technology, photo tagging has evolved to a complex process with sophisticated results. It not only identifies the actual items, colors and shapes contained in an image, but an array of other characteristics. For example, image tagging can include the general atmosphere portrayed in an image, concepts, feelings, relationships, and much more.

This high level of complexity that image tagging can offer today allows for more robust image discovery options. With descriptive tags attached to visuals, the search capabilities increase and become more precise. This means people can truly find the images they’re after.

Applications of Image Tagging

Photo tagging is essential for a wide variety of digital businesses today. E-commerce, stock photo databases, booking and travel platforms, traditional and social media, and all kinds of other companies need adequate and effective image sorting systems to stay on top of their visual assets.

Read how Imagga Tagging API helped helped Unsplash power over 1 million searches on its website.

Image tagging is helpful for individuals too. Arranging and searching through personal photo libraries is tedious, if not impossible, without user-friendly image categorization and keyword discoverability.

Eden Photos used Imagga’s Auto-Tagging API to index users personal photos and provide search and categorization capabilities across all Apple devices connected to your iCloud account.

Types of Image Tagging

Back in the day, image tagging could only be done manually. When working with smaller amounts of visuals, this was still possible, even though it was a tedious process.

In manual tagging, each image has to be reviewed. Then the person has to set the relevant keywords by hand — often from a predefined list of concepts. Usually it’s also possible to add new keywords if necessary.

Today, image tagging is automated with the help of software. Automated photo tagging, naturally, is unimaginably faster and more efficient than the manual process. It also offers great capabilities in terms of sorting, categorizing and content searching.

Instead of a person sorting through the content, an auto image tagging solution processes the visuals. It automatically assigns the relevant keywords and tags on the basis of the findings supplied by the computer vision capabilities.

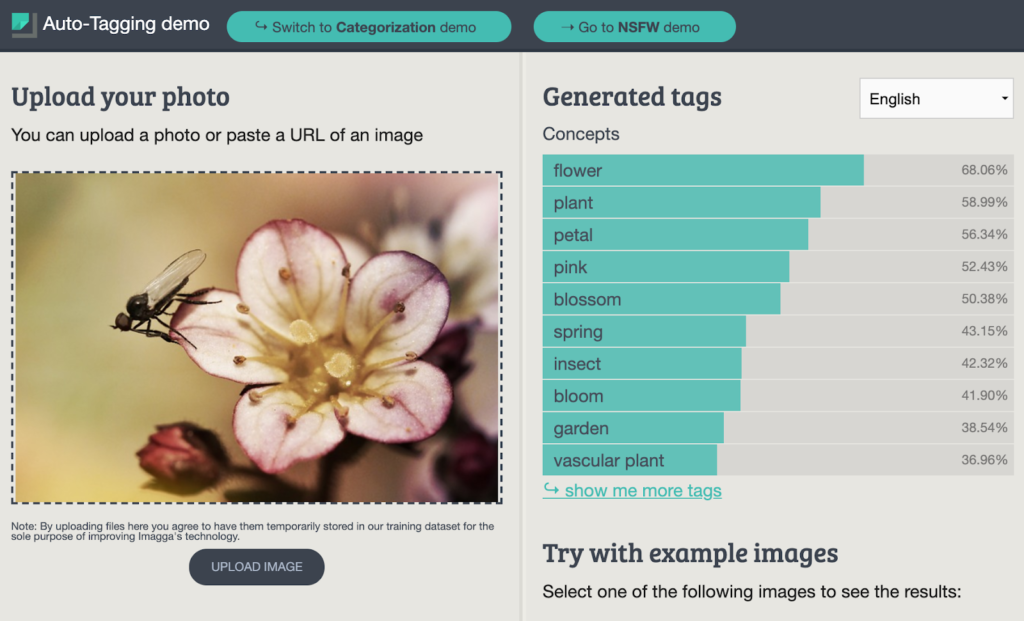

Auto Tagging

AI-powered image tagging — also known as auto tagging — is at the forefront of innovating the way we work with visuals. It allows you to add contextual information to your images, videos and live streams, making the discovery process easier and more robust.

How It Works

Imagga’s auto tagging platform allows you to automatically assign tags and keywords to items in your visual library. The solution is based on computer vision, using a deep learning model to analyze the pixel content of every photo or video. In this way, the platform identifies the features of people, objects, places and other items of interest. It then assigns the relevant tag or keyword to describe the content of the visual.

The deep learning model in Imagga’s solution operates on the basis of more than 7,000 common objects. It thus has the ability to recognize the majority of items necessary to identify what’s contained in an image.

In fact, the image recognition model becomes more and more precise with regular use. It ‘learns’ from processing hundreds and thousands of visuals — and from receiving human input on the accuracy of the keywords it suggests. This makes using auto tagging a winning move that not only pays off, but also improves with time.

Benefits

Automated image tagging is of great help to businesses that rely on image searchability. It saves immense amounts of time and effort that would otherwise be wasted in manual tagging — which may not be even plausible, given the gigantic volumes of visual content that has to be sorted.

Auto tagging allows companies not only to boost their image databases, but to be able to scale their operations as they need. With automated image tagging, businesses can process millions of images — which enables them to grow without technical impediments.

Examples

You can try out our generic model demo to explore the capabilities of Imagga’s auto tagging solution. You can insert your own photo or use one of the examples to check out how computer vision can easily identify the main items in an image.

In the example above, the image contains a pink flower with an insect on it. The image tagging solution processes the visual in no time, supplying you with tags containing major concepts. You also get the accuracy percentage of each identified term.

The highest ranking ones typically include the basics about the image — such as the object or person and the main details about them. Further down the list of generated tags, you can also find colors, shapes, and other terms describing what the computer vision ‘sees’ in the picture. They can also include notions about space, time, emotions, and similar.

How to Improve Auto Tagging with Custom Training

The best perk of auto-tagging is that it can get better with time. The deep learning model can be trained with additional data to recognize custom items and provide accurate tagging in specific industries.

With Imagga’s custom training, your auto-tagging system can learn to identify custom items that are specific to your business niche. You can set the categories to which visual content should be assigned.

Custom training of your auto-tagging platform allows you to fully adapt the process to the particular needs of your operations — and to use the power of deep learning models to the fullest. In particular, it’s highly useful for businesses in niche industries or with other tagging particularities.

Imagga’s custom auto-tagging can be deployed in the cloud, on-premise, or on the Edge.

FAQ

Image tagging is a method for assigning keywords to visuals, so they can be categorized. This, in turn, makes image discovery easier and smoother.

Image tagging is used by users and companies alike. It is necessary for creating searchable visual libraries of all sizes — from personal photo collections to gigantic business databases.

Yes. For SEO, image tagging focuses on keywords, alt text, and structured data that help search engines understand and rank images in web search. For internal search (e.g., DAMs, eCommerce platforms), tagging prioritizes detailed metadata that enables users to filter and retrieve images efficiently within a closed system.

In eCommerce, precise image tagging enhances product discoverability and recommendation algorithms, leading to higher engagement and sales. When tags include detailed attributes like color, texture, and style, search engines and on-site search functions can better match customer queries to relevant products, reducing friction in the buyer’s journey.

Top 5 Content Moderation Tools You Need to Know About

Keeping online content safe isn’t just a priority - it’s a necessity. For businesses, AI-driven content moderation tools have become critical in protecting users and ensuring compliance with ever-evolving regulations. One of the most vital tasks in this space? Filtering adult content. Today’s AI-powered tools offer real-time protection, helping platforms stay safe and user-friendly.

From social media and e-commerce to educational websites, dating platforms and digital communities, content moderation serves two primary goals: meeting legal obligations and safeguarding user trust. Whether it's about protecting young users or maintaining a brand’s image, the stakes are higher than ever. Below, we explore why adult content filtering matters, which businesses need it most, and five leading AI tools to help you stay compliant and safe.

Why Adult Content Detection Is Essential

As regulatory frameworks tighten - EU’s Digital Services Act, Section 230 of the Communications Decency Act in the U.S., and the Online Safety Bill in the UK - platforms are legally bound to protect their users. Beyond compliance, adult content detection is crucial for fostering a safe and welcoming online experience. Read more on what the DSA & AI Act mean for content moderation.

This process involves removing explicit user-generated content from platforms where it doesn’t belong. AI-driven algorithms excel here, scanning images, videos, and live streams in real-time to catch explicit material with impressive accuracy.

Businesses like social media platforms, e-commerce marketplaces, dating apps, and educational websites face unique challenges. For platforms catering to minors or educational spaces, the stakes are even higher. Balancing between user safety and freedom of expression is no easy task, but modern AI models are stepping up to solve it.

Who Needs Content Moderation Tools?

Content moderation tools are not just for social media giants. Any platform that allows user-generated content needs a strategy for keeping things clean. Here’s a quick look at who benefits most:

- Dating platforms - ensuring shared photos and messages remain appropriate

- E-commerce sites - keeping user-uploaded product photos and reviews family-friendly.

- Online communities - gaming forums, interest groups, and other communities with visual content sharing

- Educational platforms - protecting minors and maintaining professional environments

- Social media and messaging apps - where user-generated content flows constantly

Top 5 Tools for Content Moderation

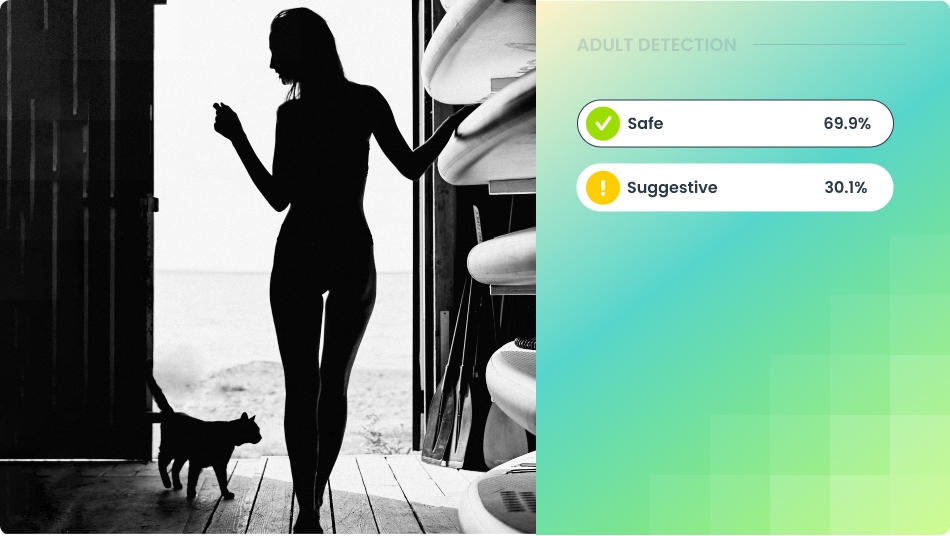

1. Imagga Adult Content Detection

The Imagga Adult Content Detection model has been around for a while, but was previously known as NSFW detection. After significant improvements, it has now achieved a whooping 98% in recall for explicit content detection and 92.5% overall model accuracy. The new model has been trained on an expanded and diversified training dataset consisting of millions of images of different types of adult content.

The model is able to differentiate content in three categories with high precision: explicit, suggestive, and safe. It thus provides reliable detection for both suggestive visuals containing underwear or lingerie and for explicit nudes and sexual content. The adult content detection operates in real time, preventing inappropriate content from going live unnoticed. Imagga offers both a Cloud API and on-premise deployment.

With a stellar recall rate of 98% for explicit content and 92.5% accuracy overall, Imagga Adult Content Detection sets new standards in content moderation.

2. Google Cloud Vision AI

The Cloud Vision API was launched by Google in 2015 and is based on advanced computer vision models. At first, it was targeting mainly image analysis like object and optical character recognition. Today it also boasts a special tool for detecting explicit content called SafeSearch. It works with both local and remote image files.

The Cloud Vision API is powered by the wide resource network of Google, which fuels its accuracy in adult content detection too. SafeSearch detects inappropriate content in five categories — adult, spoof, medical, violence, and racy. The API is highly scalable and can process significant amounts of detection requests, which makes it attractive for platforms with large-scale user-generated content.

The Google Cloud Vision AI has a readily available API (REST and RPC) and can be easily integrated with other Google Cloud services.

3. Amazon Rekognition

Amazon Web Services (AWS) introduced Amazon Rekognition in 2016. It provides computer vision tools for the cloud, including content moderation. Amazon Rekognition can detect and remove inappropriate, unwanted, or offensive, which spans adult content.

Powered by a deep learning model, Amazon Recognition can identify unsafe or inappropriate content in both images and videos with high precision and speed. The identification can be based on general standards, or can be business-specific. In particular, businesses can use the Custom Moderation option to adapt the model to their particular needs. They can upload and annotate images to train a custom moderation adapter.

The enterprise-grade scalable model can be integrated hassle-free with a Cloud API, as well as with other AWS services.

4. Sightengine

Sightengine is a platform solely focused on content moderation and image analysis. It boasts 110 moderation classes for filtering out inappropriate or harmful content, including nudity. Sightengine works with images, videos, and text. It also has AI-image and deepfake detection tools, as well as a feature for validating profile photos.