Boost moderation flow with the help of Al

Ensuring that inappropriate content is effectively moderated helps protect users and maintain the integrity of online platforms. Al-driven solutions offer speed and scalability, while human moderators provide nuanced judgment. Combining both approaches often yields the best results, leveraging the strengths of each.

Protect Your Platform

by filtering inappropriate, harmful, or illegal images and videos, such as explicit adult content, graphic violence, hate symbols, drug-related imagery and others.

Scale with Ease

is essential as the volume of visual content continues to grow rapidly.

Trusted by industry leading companies. Meet our customers →

What is

Visual Content Moderation

AI-powered visual content moderation automatically analyzes images and videos using artificial intelligence to swiftly remove inappropriate content. This keeps your platform safe, upholds your brand’s Trust and Safety program, and protects your clients and reputation. Essential for social media, dating apps, marketplaces, and forums, AI-driven moderation ensures your website aligns with your standards.

Content Moderation Challenges

Visual content moderation based on AI and machine learning is proving its power in many different fields. Yet challenges are always there to make us improve.

The goal of constantly improving content moderation tactics is to foster safe online environments that, at the same time, protect freedom of expression and real life context.

Why Imagga Technologies

Since the first major AI revolution in computer vision in 2012, we’ve perfected visual AI models, achieving a balance between scalability, accuracy, and precision. Our robust hardware and scalable cloud infrastructure ensure top performance for every image processed, drawing from over a decade of expertise. Our technology is designed to scale seamlessly with your needs as your user base and content volume increase.

Learn moreEasy & Fast Deployment

Our solutions integrate seamlessly into existing systems delivering real-time image recognition through a simple HTTP request.

Custom Fit for Your Industry

With over 400 custom classifiers trained, we tailor AI models to detect subtle differences that generic solutions miss.

Privacy & Security

Deploy our cloud solution on-premises or within air-gapped infrastructures to meet any privacy standard. Your data stays secure on your servers, ensuring compliance with strict regulations.

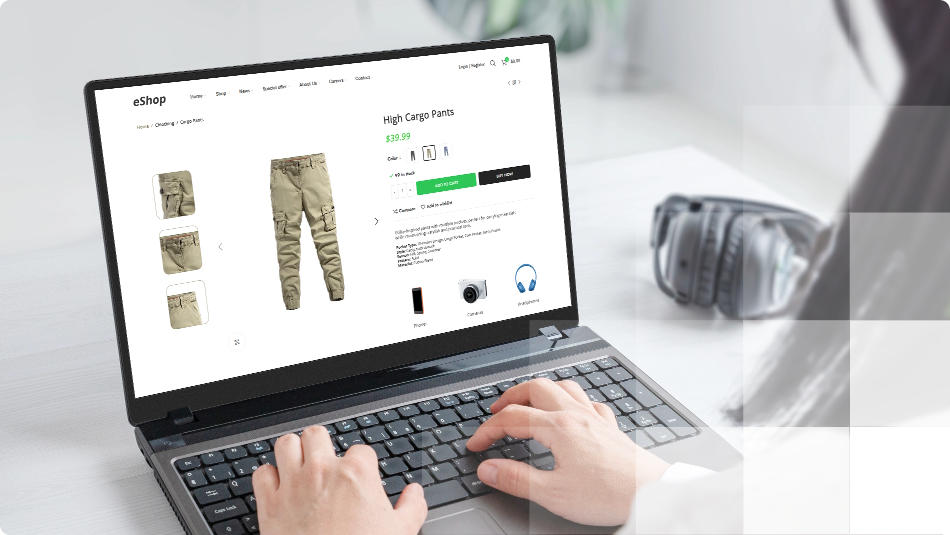

UGS PLATFORMS

Providing reliable and easy to implement content moderation

ViewBug is a platform for visual creators connecting millions of artists in a community with photography tools to help them explore and grow their craft.

Read case studyReady to Get Started?

Let’s discuss your needs and explore how our technology can support your goals.