Many companies across various industries – including dating platforms, online marketplaces, advertising agencies, and content hosting services – have been using Imagga’s Adult Content Detection Model to detect NSFW (Not Safe For Work) visuals, ensuring their platform remains secure and user-friendly.

We are excited to announce that we’ve significantly improved the model, enhancing its accuracy and ability to provide nuanced content classification. We’ve achieved 98% in recall for explicit content detection and 92.5% overall model accuracy, outperforming competitor models with up to 26%.

In this blog post, we’ll explore the advancements of the Adult Content Detection Model, previously called NSFW model, demonstrate how it outperforms previous versions and competitor models, and discuss the tangible benefits it offers to businesses requiring effective and efficient content moderation solutions.

Key features of Imagga’s Adult Content Detection model:

- Exceptional model performance in classifying adult content

- Real-time processing of large volumes of images and short videos

- Integrates into existing systems with a few lines of code

Evolution of the Model

We expanded and diversified the training dataset for the new model significantly. The new model has been trained and tested on millions of images, achieving extensive coverage and diversity of adult content. In addition, a vast number of visually similar images belonging to different categories have been manually collected and annotated. This meticulous process contributes to the outstanding performance of our model in differentiating explicit, suggestive, and safe images, even in difficult cases.

The dataset has been refined by applying state-of-the-art techniques for data enrichment, cleaning, and selection. By enhancing the quality and diversity of our training data, we’ve greatly improved the model’s ability to detect nuanced visuals. As a result, we have achieved much better results compared to the previous version of the model.

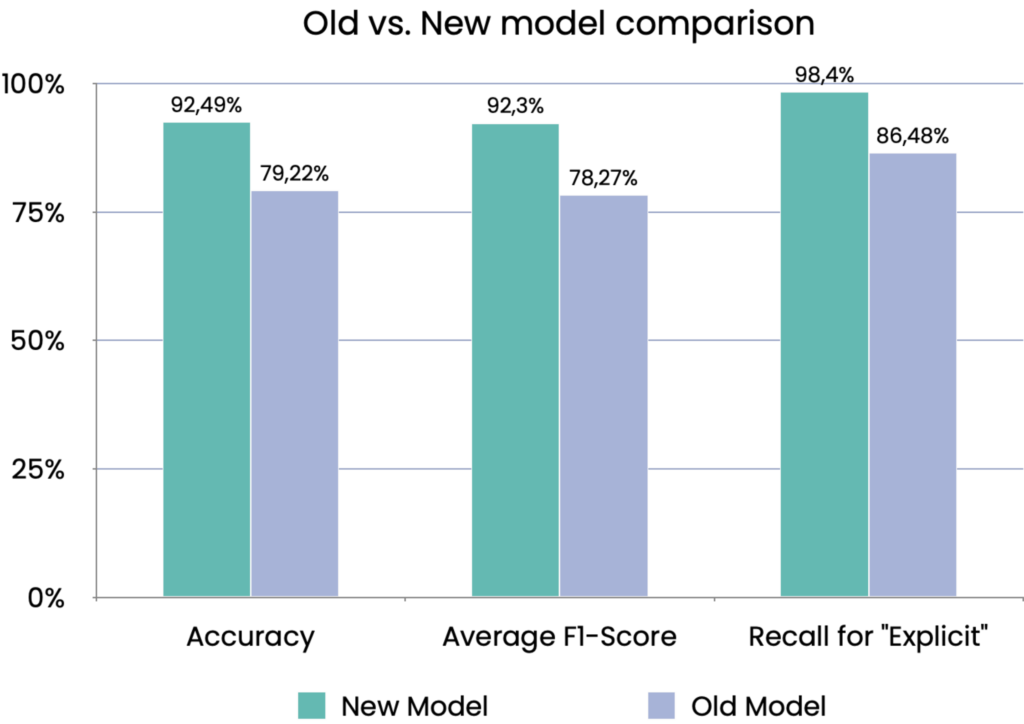

Here’s a performance comparison chart showing the progress of Imagga’s new Adult Content Detection Model compared to the previous version.

The chart highlights substantial improvements across key metrics.

The new version of our Adult Content Detection Model shows a substantial improvement in accuracy and overall performance, meaning it’s now even better at correctly identifying content in each category. One of the most important improvements is in its ability to detect “explicit” content, where we see a significant 12.72% increase in recall.

What does this mean? Recall is a measure of how effectively the model captures all actual instances of explicit content. By increasing recall, the model becomes more sensitive to potentially explicit content. This means it’s more likely to catch borderline or uncertain cases, ensuring that even subtle or ambiguous explicit material gets flagged.

In practice, this makes the model much more effective at detecting a higher percentage of explicit content, reducing the risk of inappropriate material bypassing moderation. This improvement is a key step in helping platforms ensure a safer and more controlled environment for their users.

Benchmarking Against Competitor Models

Test Data and Methodology

To ensure a fair and unbiased comparison, we tested our model against leading competitors – Amazon Rekognition (AWS), Google Vision, and Clarifai, using a carefully curated benchmark dataset.

The images in the test dataset have been collected from a variety of sources. Special attention has been paid to avoid inclusion of samples from the same source as the model’s training data. This aims to ensure that results are not biased towards the training data. For the current release no cartoons or drawings have been included. The model is, however, well responsive to synthetic images with sufficient resemblance to real images.

The benchmark dataset includes a wide range of nuanced and complex scenarios, beyond straightforward cases. The vast majority of images require the model to understand subtle distinctions in context, such as close interactions between people or potentially ambiguous body language and actions. These nuanced situations often blur the boundaries between suggestive and explicit content or between suggestive and safe content, providing a challenging test for content moderation.

To evaluate the models’’ performance comprehensively, we tested how they perform on images taken from different perspectives, with varying quality, and within hard-to-detect cases. The test dataset also includes hard-to-recognize images, with a deliberate inclusion of people of different races, ages, and genders to minimize biases and ensure that the model performs accurately and fairly across diverse demographic representations.

The benchmark dataset is available upon request. To receive it, please contact us at sales [at] imagga [dot] [com]

Dataset Composition

The dataset contains 2904 images, from which:

- 1104 with explicit content

- 952 with suggestive content

- 765 with safe content

Category Definitions

- Explicit: Sexual activities, exposed genitalia, exposed female nipples, exposed buttocks or anus

- Suggestive: Exposed male nipples, partially exposed female breasts or buttocks, bare back, significant portions of legs exposed, swimwear, or underwear

- Safe: Content not falling into the above categories

Competitor Models Categorization

Google Vision

- Returns probability for the existence of explicit content in six likelihood categories ranging from “very unlikely” to “very likely”

- For this comparison, any image rated “possible” or higher was considered explicit

- Does not have a “suggestive” category

Amazon Rekognition (AWS)

- Returns detailed subcategories, which we aggregated to align with our definitions of “explicit” and “suggestive”

Clarifai

- Offers adult content detection classifying into the same three categories ‘safe’, ‘suggestive’, ‘explicit’ as Imagga’s API

Performance Comparison

Imagga’s Model

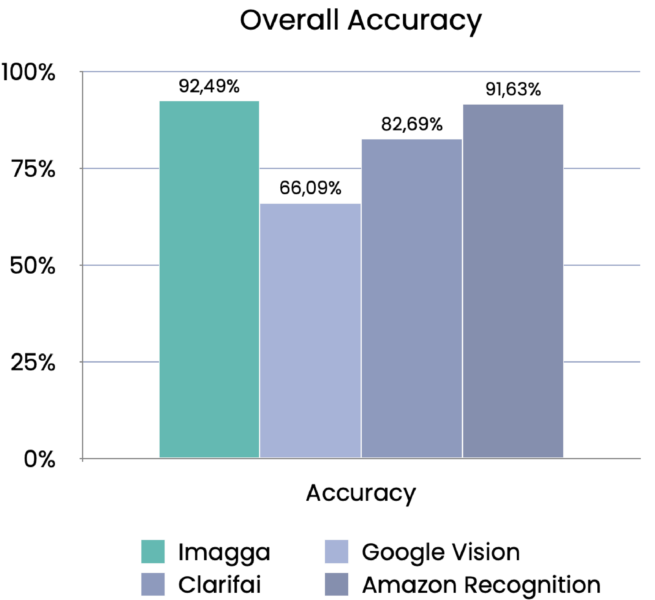

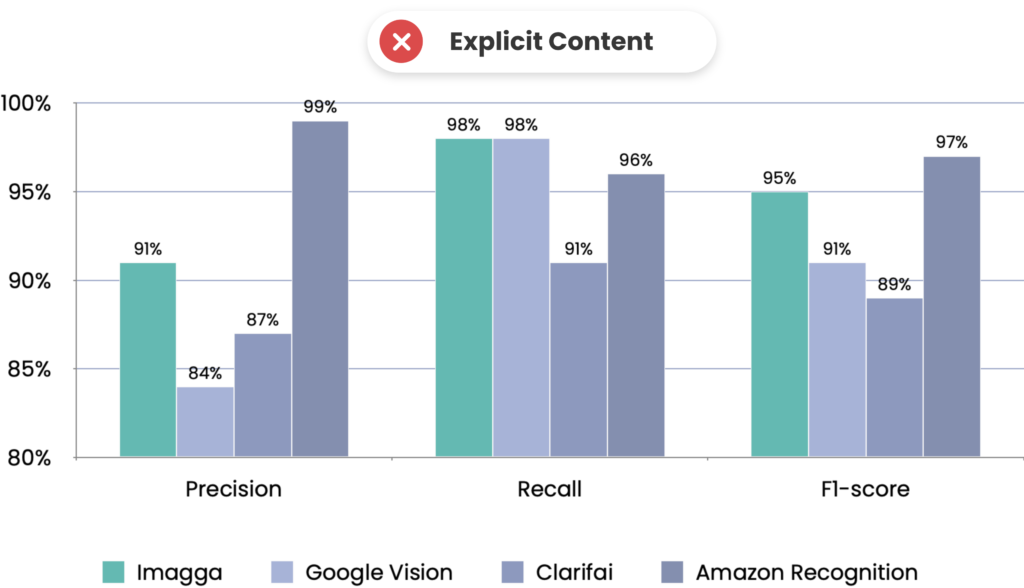

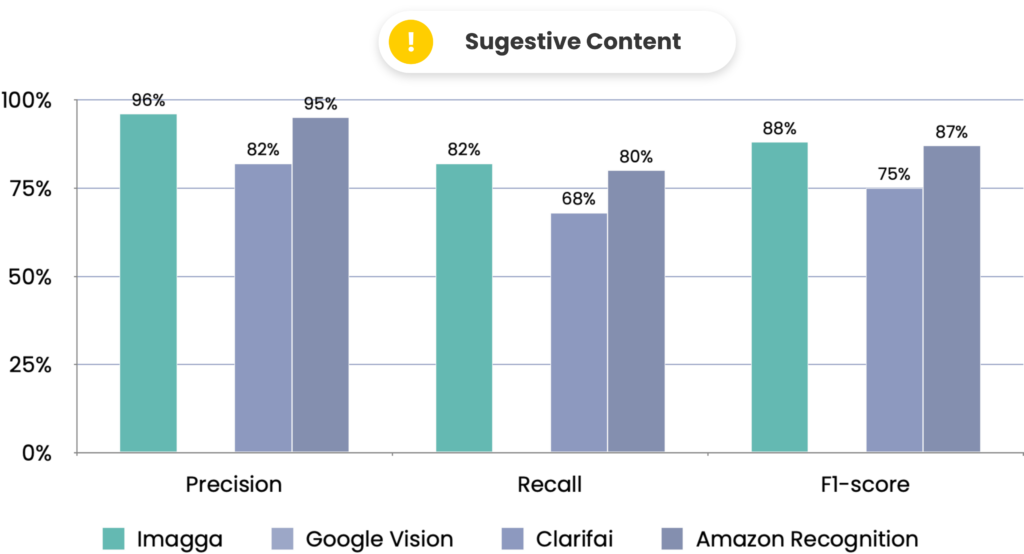

As seen from the figure on the right, Imagga’s latest adult detection model achieved the highest overall accuracy at 92.45%. Tied with Google Vision for the highest recall in the “explicit” category, ensuring most explicit content is correctly identified. It also exhibited the highest F1-scores across all categories, indicating a balanced performance between precision and recall. The model outperforms competitors in the “suggestive” and “safe” categories.

Amazon Rekognition

Slightly higher precision in the “explicit” category, meaning fewer false positives. Amazon’s model has lower recall compared to Imagga, potentially missing more explicit content. Less effective in “suggestive” and “safe” categories.

Clarifai

Moderate performance across categories but lags behind Imagga and AWS. Lower accuracy and F1-scores indicate room for improvement.

Google Vision

While Google Vision demonstrates high recall for “explicit” content, it does not offer a “suggestive” category aligned with our definitions. Consequently, it lacks the ability to distinguish between “explicit” and “suggestive” content. This limitation means that suggestive content is often misclassified as “safe,” resulting in an inability to filter or tag such content appropriately.

How Reliable Adult Content Detection Impacts Online Platforms?

Robust and accurate adult content detection provides significant benefits to online platforms by ensuring a safer, more reliable user experience. By automatically identifying and filtering inappropriate images and videos, platforms can effectively protect users, especially minors, from harmful content. This not only helps safeguard the platform’s reputation but also fosters user trust and confidence in its commitment to safety. Additionally, with regulations around online content continuously evolving, having a detection system that meets compliance standards is critical. Relying on a provider with core expertise in this area further enhances reliability, allowing platforms to streamline moderation processes and focus on growth, knowing their content detection is both effective and expertly managed.

Upcoming Updates

- Providing a classification of the subcategories identifying the explicit action or subject

- Increasing the recall of the suggestive category for more accurate detection of gray-zone adult content

How to get started with the Adult Content Detection?

Learn how to get started with the model with just a few lines of code.

How to Upgrade from the NSFW Model?

Upgrading to the new model is straightforward and can be done in two simple steps:

- Update the categorizer ID in your API calls: Replace “nsfw_beta” with “adult_content”.

- Adjust label handling if applicable: To better reflect the data our model is trained on and to improve moderation outcomes, we’ve updated the classification categories from “nsfw”, “underwear,” and “safe” to “explicit”, “suggestive”, and “safe”, respectively. This means that in case you are handling the labels in your code, you will need to change the label “nsfw” to “explicit” and “underwear” to “suggestive”, while the “safe” label remains unchanged.

Important: The NSFW Beta model will remain active in the API for the next three months before it is deprecated.

Ready for a Reliable Content Moderation Solution?

If you’re seeking a robust, advanced solution for content moderation, Imagga’s enhanced Adult Content Detection Model is here to meet your needs. Our technology is designed with precision and adaptability in mind, and we’d love to learn more about your specific challenges.

Tell us which types of content are most difficult to manage or where you see existing detection models falling short.