Managing a content-heavy website in WordPress comes with its risks – especially when multiple editors or contributors upload visual media.

Even unintentionally, inappropriate or explicit content can make its way into published posts, harming your brand and violating community guidelines.

The Imagga Content Moderation Plugin for WordPress, demonstrating an automated way using image recognition to detect and block explicit content at the point of upload – before it’s ever published.

Use Case: Content Moderation for WordPress Editors & Admins

This plugin is built for WordPress websites with multi-author workflows – such as news portals, community blogs, educational platforms, and corporate sites.

Whenever an editor or administrator uploads images as part of a post or article, the plugin checks those files in real time.

If any image is detected to contain nudity or explicit content, the upload is automatically blocked, and the user receives a clear error message.

This helps you enforce content standards without adding manual review steps.

But it also adds a layer of protection against bad actors.

Unfortunately, WordPress sites are sometimes compromised, and hackers may attempt to upload pornographic or inappropriate content as part of their attack. This can lead to:

- Hidden adult content embedded in fake posts

- Links to shady websites

- Damage to your brand and SEO rankings

By scanning every uploaded image, even by administrators, the plugin can intercept suspicious or explicit uploads immediately, giving you another tool to prevent reputational harm — even in edge cases like security breaches.

How It Works

The plugin integrates directly into the WordPress media upload flow using a system hook. Here’s what happens behind the scenes:

- An editor or admin uploads an image via the WordPress post editor.

- Before the image is saved, the plugin intercepts the upload and sends the image to Imagga’s adult content detection API.

- If the image is flagged as inappropriate:

- The upload is blocked.

- A custom error message is shown (e.g., “Upload blocked: Image contains inappropriate content.”)

- If the image is clean:

- The upload proceeds as normal.

Everything happens in real time, without slowing down the editing experience.

How to Install and Activate the Plugin

Since the plugin is distributed outside the official WordPress plugin marketplace, you’ll need to install it manually.

Manual Installation Steps

- Download the plugin ZIP file from our website.

👉 Download Plugin - In your WordPress admin panel, go to: Plugins → Add New → Upload Plugin

- Upload the ZIP file and click “Install Now.”

- Once installed, click “Activate Plugin.”

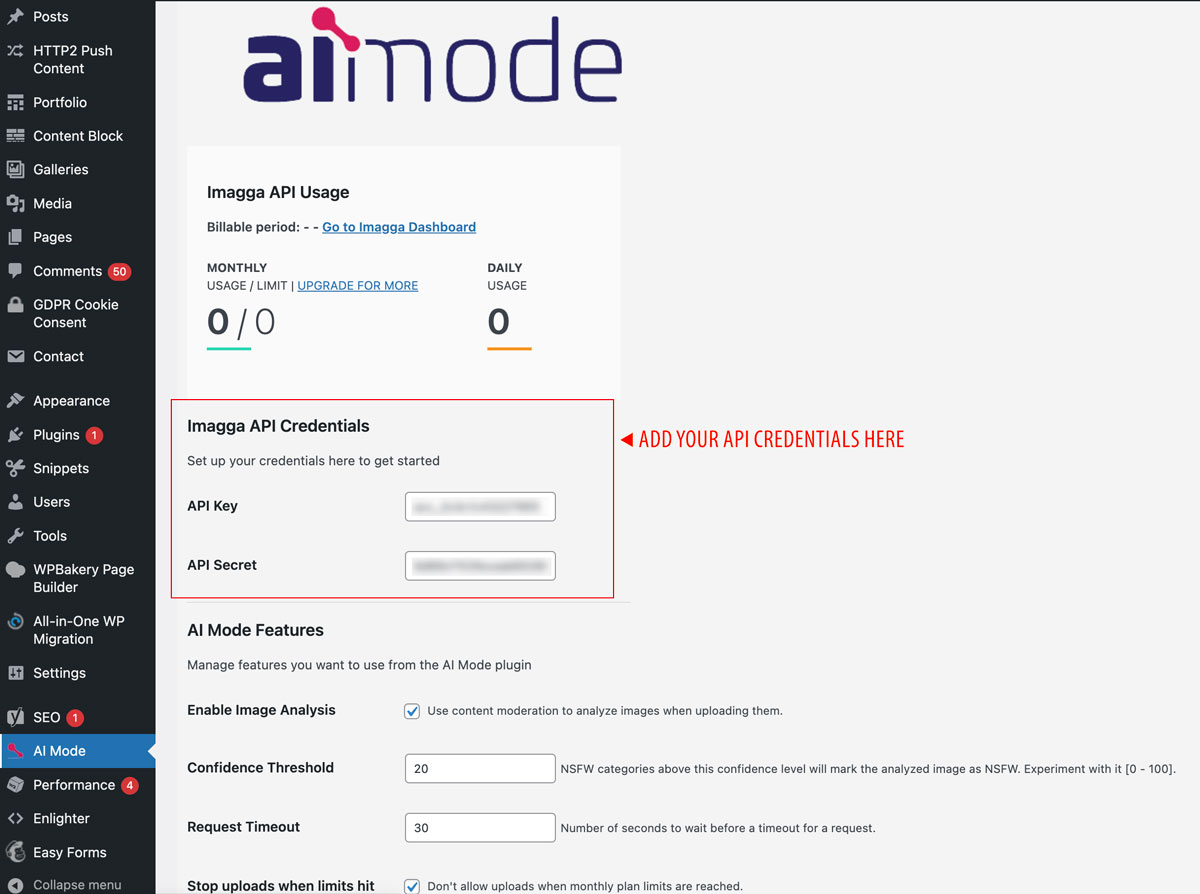

- Go to the plugin settings in the sidebar navigation under AI Mode and enter your API key and Secret to connect with Imagga’s content moderation API. You can get free API credentials by creating an account here. Additionally, you can manage some recognition features, such as the Confidence threshold.

- That’s it! The plugin is now live and will monitor every new image uploaded by your content team.

Why This Matters

This plugin gives you a first line of defense against unwanted content making its way onto your platform.

With minimal setup, you get access to Imagga’s industry-leading AI moderation models, helping you:

- Protect your brand image

- Enforce publishing standards

- Stay compliant with content policies

Prevent moderation overhead or retroactive takedowns

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.