Users increasingly share content where text is embedded inside images — memes, captioned photos, or screenshots of conversations. Unlike plain text, which can be automatically scanned and classified, text inside an image must first be detected and extracted through OCR before it can be analyzed. For years, these posts remained a blind spot for moderation systems. Many filters simply did not “see” the words if they were part of an image, allowing harmful content to slip through.

This loophole gave malicious actors an easy way to bypass moderation by posting prohibited messages as images instead of text. A screenshot of a hateful comment, a meme spreading misinformation, or even a spammy promotional banner could evade detection. The stakes are high. The same harmful categories that appear in regular text such as hate speech, sexual or violent material, harassment, misinformation, spam, or personal data can appear in image-based text but are harder to catch. Effective text in image moderation is now essential for both user safety and platform integrity.

Scenarios Where Text-in-Image Moderation Makes a Difference

The risks of ignoring text in images are not abstract. They play out daily across social platforms, youth communities, and marketplaces.

Harassment and Bullying often take visual form. A user may overlay derogatory words on someone’s photo, turning a simple picture into a targeted attack. If the system only sees the image of a person, it misses the insult written across it. For platforms popular with young users, where cyberbullying thrives in memes, this is particularly critical.

Self-Harm and Suicide Content can also appear in images. A user might share a photo containing a handwritten note or overlay text such as “I can’t go on.” These posts signal that someone may need urgent help and, left unchecked, could even influence others.

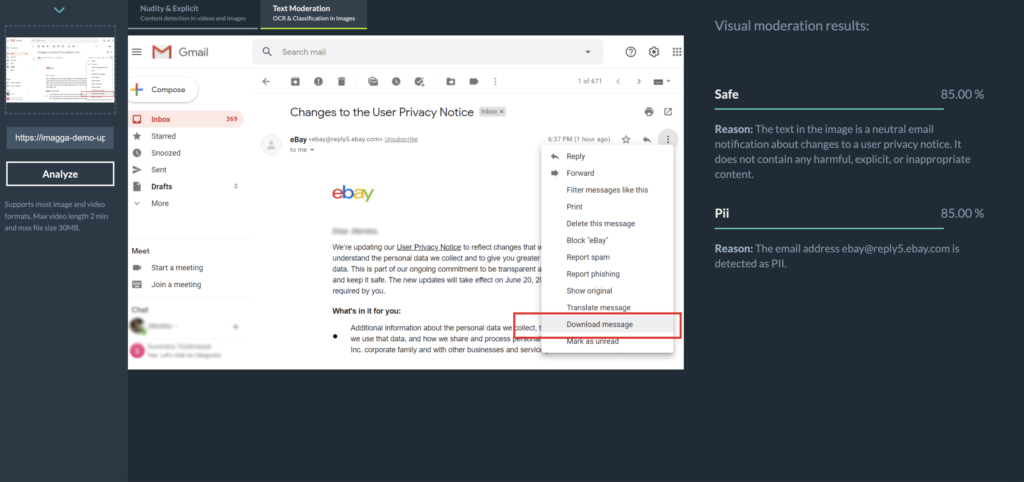

Spam, Scams, and Fraudulent Ads are another area where image-based text is exploited. Spammers embed phone numbers, emails, or URLs in graphics to avoid text-based filters. From promises of quick money to cryptocurrency scams, these messages often appear as flashy images. Comment sections on social networks have been filled with bot accounts posting fake “customer support” numbers inside images, tricking users into engaging.

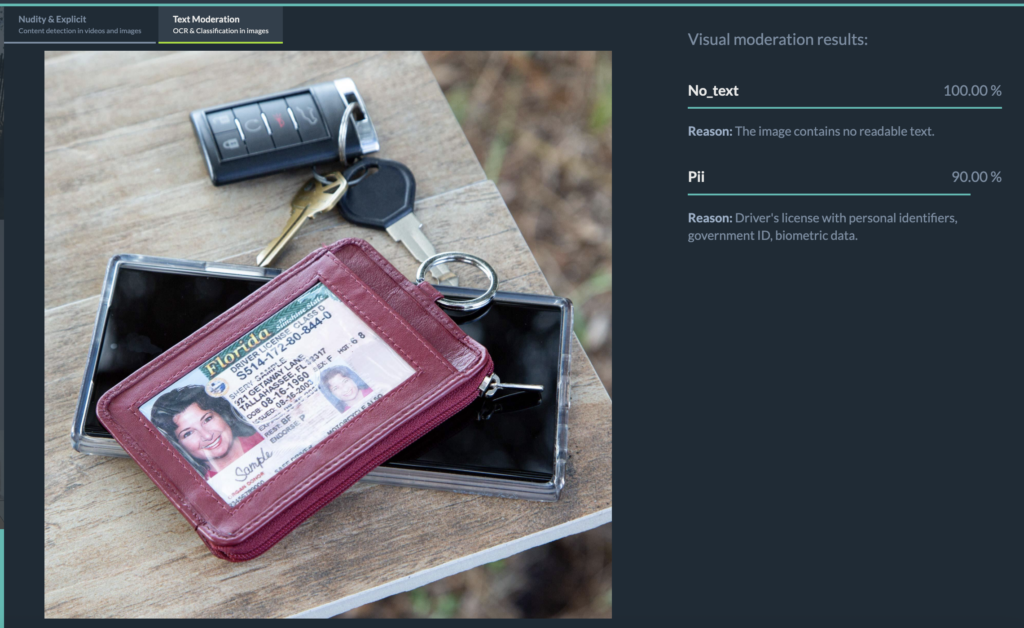

Personal Data and Privacy Leaks are a quieter but equally serious issue. A proud student might share a graduation photo with a diploma visible, exposing their full name, school, and ID number. Users may post snapshots of documents like prescriptions, ID cards, or credit cards, not realizing the risk. Even casual photos of streets can reveal house numbers or license plates. Moderation systems must now recognize and flag these cases to protect user privacy.

Imagga’s Text-in-Image Moderation: Closing the Gap

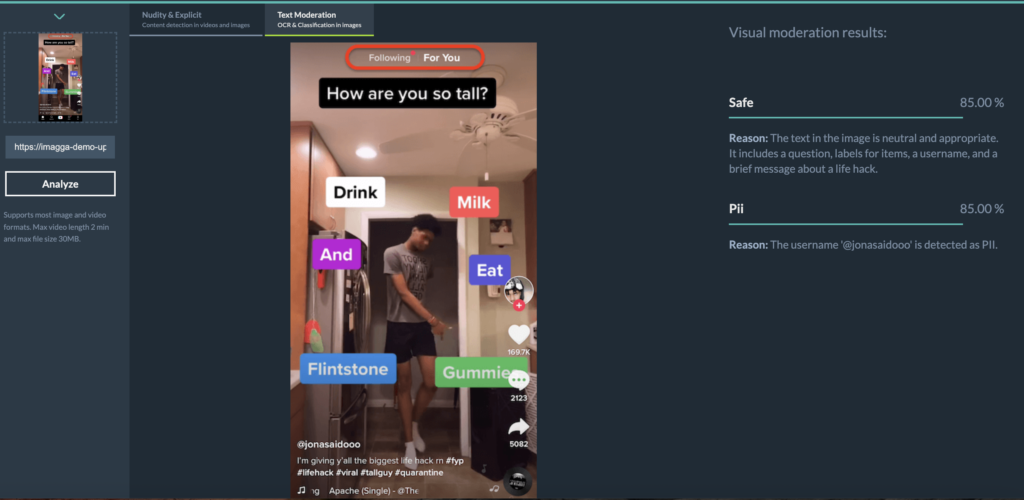

To address these challenges, Imagga has introduced Text-in-Image Moderation. It ensures that harmful, unsafe, or sensitive text embedded in visuals is no longer overlooked.

Building on our state-of-the-art adult content detection for images and short-form video, this new capability completes the visual content safety picture. The system combines OCR (optical character recognition) with a fine-tuned Visual Large Language Model and can be adapted to the needs of the client excluding some categories or including new ones.

Major functionalities include:

- Extract text from images at scale

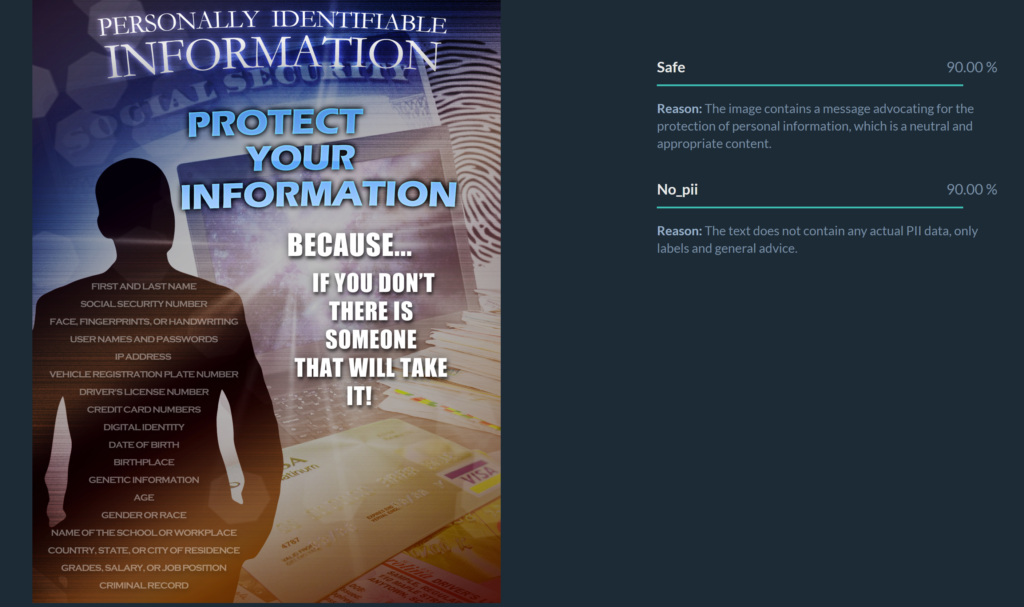

- Understand context, nuance, and metaphors

- Operate across multiple languages and writing systems

- Classify both harmful categories and personal information (PII)

- Capable of understanding text that condemns hate or catches metaphor

Content Categories Covered

The model organizes extracted text into clear categories so that platforms can respond consistently. These include:

- safe content

- drug references

- sexual material

- hate speech

- conflictual language outside protected groups

- Profanity

- Self-harm

- Spam

Furthermore the categories can be customized as per the need of the client. By covering this full spectrum, the system ensures that even subtle risks, such as casual profanity or coded hate speech, are not missed.

PII Detection: Protecting Sensitive Information

Alongside harmful content detection, Imagga’s Text-in-Image Moderation also protects users against accidental or intentional sharing of personal data. The model can identify a wide range of personally identifiable information:

- names, usernames, and signatures

- contact details like phone numbers, emails, or addresses

- government IDs such as passports or driver’s licenses

- financial details including credit cards, invoices, or QR codes

- login credentials, tokens, and API keys

- health and biometric data

- employment and education records

- digital identifiers like IP addresses or device IDs

- company-sensitive data such as VAT numbers or client details.

For example, an image of a girl holding her diploma would be flagged under education-related PII, allowing platforms to take action before sensitive details are exposed publicly. This capability helps ensure compliance with privacy regulations and reinforces user trust.

Additional Advantages of Imagga’s Text-in-Image Moderation

Beyond broad content categories and PII detection, the model is designed to handle the subtleties that often determine whether moderation succeeds or fails.

It reliably identifies small details hidden in images, such as an email address written on a piece of paper or typed faintly in the corner of a screenshot. These elements are easy for the human eye to miss, but they can expose users to spam, scams, or privacy risks if left unchecked.

The system also demonstrates exceptional OCR performance, even in noisy or low-quality environments such as screenshots of chat conversations. Whether text appears in unusual fonts, overlapping backgrounds, or compressed images, the model is trained to extract and interpret it with a high degree of accuracy.

Finally, the moderation pipeline incorporates an awareness of nuance in labeling. Simply detecting a sensitive word does not automatically trigger a harmful classification. For instance, encountering the term “drugs” in a sentence that condemns drug use will not result in a false flag. This context-aware approach prevents overblocking and ensures platforms can maintain trust with their users while still enforcing safety standards.

Completing the Imagga Moderation Suite

Text-in-Image Moderation is not a standalone feature but part of a broader safety solution. It integrates seamlessly with Imagga’s existing tools for adult content detection in images and short-form video moderation, violence and unsafe content classification, and brand safety filters. Together, these capabilities create a comprehensive, end-to-end moderation pipeline designed for today’s user-driven, content-rich platforms.

Conclusion

Text embedded in images is no longer an oversight that platforms can afford to ignore. Whether it appears as a meme, a screenshot, a scam, or a personal document, this content carries real risks for users and businesses alike. Imagga’s Text-in-Image Moderation closes this gap with advanced detection and nuanced understanding, complementing the company’s broader suite of content safety solutions.

Platforms that want to provide safer, more responsible experiences now have the tools to ensure no harmful message goes unseen.

See the Text-in-Image moderation in action in our demo

Get in touch to discuss your content moderation needs.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.