Product Recommendations for E-Commerce with Image Recognition

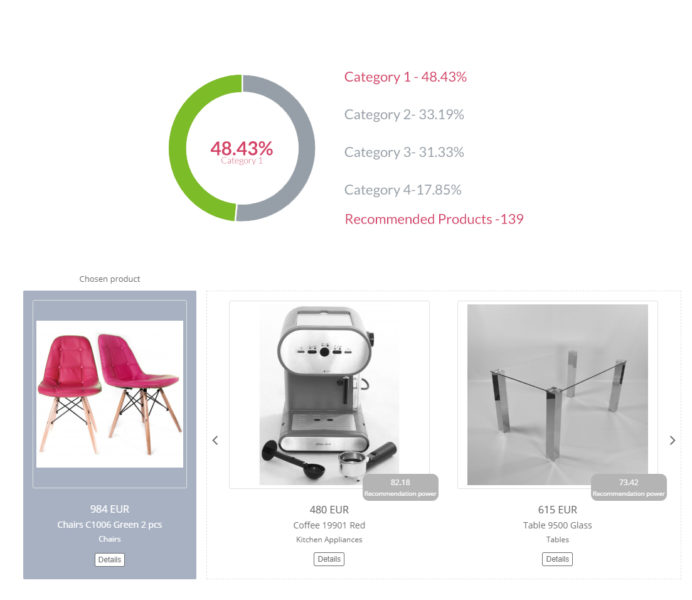

According to Forrester market analysis 10% to 30% of all online sales are done through cross-selling or upselling. Amazon (with 560 million SKUs) has been consistently reporting 35%. Once an e-commerce passes а certain threshold, catalog management and maintenance increases exponentially. One of the most common problems includes: updating existing SKUs, managing multiple sales channels, and working with incomplete data from suppliers. While companies’ focus is on sales, cross-selling and upselling needs to be a functionality that takes minimal effort and continuously improves. Machine learning and in particular иmage рecognition provides this capability for any catalog. Furthermore it decreases the heavy cost associated with building a recommendation engine internally, based on keywords, user abandonment carts, and cookies.

Building Product Recommendation Using Image Recognition

How do you approach building a product recommendation engine? First of all you’ll need a lot of data. There are a bunch of tutorials and walkthroughs which will certainly help you understand how AI is being built but to create one yourself, you would need a skillset from diverse backgrounds:

- Mathematical: applied statistics knowledge, calculus, deep understanding of algebra and algorithms especially unsupervised learning cases

- Computer Science: data structure for deep learning, programming neural networks, and machine learning algorithms building

- Science: a deep understanding of several programming languages, cognitive learning theory, and natural language processing

Probably you are not going to spend years learning science and developing these skills, but you’ll learn from the organizations that are already using a ready solution. Both Fortune 500 companies and government agencies purchase these fubctionaities as a service and let the experts research and apply their expertise.

Every AI vendor does it differently - mostly through an API. You will notice that the generic tagging of some vendors performs better in some use cases rather than others and the accuracy can vary significantly. This is because, for a well-working functionality, the model needs to be trained specifically for those types of objects. For example, if you are trying to build an image recognition solution that would recognize all the different iPhone cases available on the market in terms of brands, sizes, materials, etc. then you need to show the neural net for all possible options and teach it similar to a baby who is learning by seeing.

If AI companies can teach their algorithms on any dataset, they would be breaching a whole bunch of laws for privacy regarding your personal or company data especially GDPR restriction in the EU, which are having an impact on US data as well. Hence the question “Why do I need an AI company when I can learn to build and I hold all the datasets? They will only build a neural network and algorithms”. This is the chicken and an egg problem which we will not explore but you can read more here.

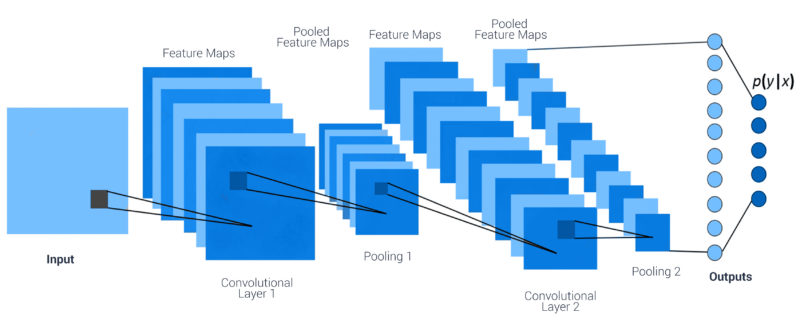

FIGURE: Training a Model

Once you are done building your data sets and are ready for training, you need experts to make sure that the training of the neural net is working properly.

Now that we know that building image recognition software is no easy task how do we make it work for an e-commerce store with +100.000 SKUs?

Use Cases for E-Commerce

Customer-centric suggestions and searches

Users often leave an online store because they don’t find what they are looking for. For example, if I am looking for a non-stick frying pan and the Facebook ad is saying non-stick frying pan for 20$ then entering the landing page I want to see with big words “non-stick frying pan”, this is pretty intuitive.

How can you achieve the same logical mind map for an e-commerce search engine where there are dozens of characteristics of a product?

Search results can be significantly improved through contextualization - using AI software to organize and tag keywords that are particular for the product. The consistency of this tagging is only as good as the training of the neural network and this simple mechanical task can be performed when an image is being uploaded. Users can make mistakes tagging when uploading their product images and try to qualify for keywords that are not relevant but machines can not. As a result, users receive product keywords that are much more suitable and have a higher probability of making a sale.

The goal of the algorithms is to make the search more intuitive for the user based on history and rules set by the programmers. For example, Pinterest uses image recognition to identify items on an image user uploads so that they make product and tag suggestions. Тhis approach can further be extended for e-commerce and remarking purposes.

Social Media Commercialization

Have you noticed Facebook has become incredibly good at suggesting products that you like in one of its platforms? How does this happen? Once you click on an item this click automatically falls under a category. Once an item is added to this category through image recognition new items can easily be suggested just by uploading an image on your Facebook wall. This is because the image is tagged upon upload and immediately gets associations and with every next upload the AI becomes incrementally better at understanding your preferences.

Conclusion

Artificial intelligence can provide businesses with opportunities to make a more personalized experience and recommendations but those are only as good as the services they are using and the data they receive. Today AI can help achieve every e-commerce dream and place personalized items based on consumer behavior instantly, which gives the organization power but can also scare and sometimes overwhelm the user.

Start Learning AI Agents Used and Games. Teach AI to Play Pong

Knowledge published on the internet is free but in the ocean of opinions and points of view, there is no clear chronological pathway on how to learn about the history of AI in Games.

Here we will introduce some basic technical instructions required to begin training Artificial Intelligent agents with games before discussing how this could be used in potential applications. Games and gameplay are an integral part of the history and development of Artificial Intelligence (AI) and can provide mathematically programmable environments that can be used to develop AI programs. As part of this article, we will provide a tutorial on how you can begin experimenting with training your own Artificially intelligent system on your computer with some open source arcade games.

Open AI GYM

The long history of gameplay between humans and artificial intelligent computers demonstrate that games provide a reliable framework for computer scientists to test computational intelligence against human players. But games also provide an additional environment for machine learning to develop intelligence through repeatedly playing and interacting with a game by itself. In 2015 an organization was formed by Elon Musk and Sam Altman to develop Machine Learning algorithms through the use of games. Their organization, Open AI has launched a platform to develop machine learning algorithms with arcade games. This is done to simulate environments for AI models in order to improve and develop ways in which AI systems can interact ‘in the real world’. These systems make uses of image recognition technology in order for the AI agent to interpret the pixels on the screen and interact with the game. Rather than identifying objects like Imagga’s image recognition API, the open AI Gym modules use computer vision libraries (such as open CV) in order to interpret key elements such as the user's score or incoming pixels. In 2015 a series of videos were published showing how neural networks could be used to train and AI agent to successfully play a number of classic arcade games. AI agent that can learn to complete Mario in under 24hours which have led to hundreds of similar attempts to train AI on Super Mario Bros, many of which are continuously live streamed and you can watch here.

The above picture displays a breakdown and explainer from creator Seth Bling on how Mario is learning to play the arcade game through a combination of computer vision, neural networks, and machine learning. The box on the left indicates the computer vision interpretation of the pixels on the arcade game, while the red and green lines indicate the weighting of neural networks assigned to different inputs from the controller (A, B, X, Y, Z etc). The bar at the top indicates the status of Mario and the AI agent playing, fitness refers to how far the agent has advanced through the game and Gen, species and genome all indicate the progress of the neural networks calibrating to make the best possible Mario player. People are building upon the original code written by Seth Bling and competing to see who can engineer and AI agent to complete Mario in the quickest time.

What is the benefit of an AI expert that can complete super Mario in less than 24 hours

The use of games to train AI agents is most obvious in developing software for self-driving cars, a small team even set out to turn GTA to machine learning system after removing all the violence from the game. Games offer a framework for an AI agent to initiate and direct behavior, through gameplay AI agents can develop strategies for interacting with different environments, which can then be applied to real-world situations. But before we get too far ahead, let's look at building one simple AI agent to learn the classic arcade game, Pong. In this tutorial, we are going to take a look at how to get started running your own machine learning algorithm on the classic arcade game Pong!

For this tutorial, we are going to be working with Open AI module 'Gym', open source tool, that provides a library to run your own reinforcement machine learning algorithm. They have good online support documentation and the biggest repository of classic arcade games, so if Pong is not your game and you are more of a Space Invaders or Pacman fan, getting that set up once you have GYM installed is just as easy.

Pong Tutorial

For this tutorial, you will need to be able to use the command line interface (terminal) to install package and be able to execute small python scripts.

To get started, you’ll need to have Python 3.5+ installed if you have a package manager such as Brew.sh you can install Python 3.5 with the command:

brew install python3

Simply install gym using pip:

pip install gym

Once gym is installed we will install all the environments for us to load into our python scripts later on.

Cd into the gym directory:

cd gym pip install -e '.[all]'

To run a simple test you can run a bare-bones instance copied from the GYM website. Save the code below and name it something with the extension .py

import gym

env = gym.make('CartPole-v0').

env.reset()

for _ in range(1000):

env.render()

env.step(env.action_space.sample()) # take a random action

For structure place the file in your GYM directory and from the terminal enter:

python yourfile.py

Now we have a demo up and running it’s time to move on to some classic arcade games. The actual script is quite similar, just make sure to change the ROM that we are loading when we call

gym.make(‘arcade_game’)

In a new text document place the following:

import gym

env = gym.make(''Pong-v0'')

env = gym.make(''Pong-v0'')

env.reset()

env.render()

This will generate an instance of Pong in a viewing window. But wait… nothing is happening? That is because we have only rendered an instance of the game without providing any instructions to the computer script on how we want the computer to interact with the game. For example, we could tell the computer to perform a random action 1000 times:

import gym

env = gym.make('Pong-v0')

env.reset()

for _ in range(1000):

env.render()

env.step(env.action_space.sample()) # take a random action

The script above will tell the application to take 1000 random actions. So you might see the most chaotic fastest version of pong you have ever witnessed. That is because our script is performing a random action, without processing or storing the result from each action, consequently playing like a stupid machine rather than a learning system. To create an algorithm that learns we need to be able to respond to the environment in different ways and program aims and objectives for our program. This is where things get a little more advanced For now try playing around with different games by checking out the list of environments and begin exploring the sample code to get the computer to play the game in different ways. This is the basic framework we are going to be using to build more advanced interactions with arcade games in next tutorial.

If you want to see the next tutorial, we will be looking at implementing AI agents to play the games and develop our reinforcement algorithms so that they begin to become machine learning applications. Share with us what you want to see in the comment section below.

Machine Learning Toolkits

https://blog.openai.com/universe/

https://experiments.withgoogle.com/ai

https://teachablemachine.withgoogle.com/

https://github.com/tabacof/adversarial

How to Efficiently Detect Sex Chatbots in Kik Messenger and Build it for Other Platforms

Ever since 2017, Kik messenger has been plagued by irrelevant messages distributed by automated bots. In particular, it has seen its public groups assailed by a large number of these bots which infuse it with spam-related content. Jaap, computer science and engineering master’s student, annoyed by these relentless and unwelcomed interruptions, decided to tackle the issue by engineering a counter bot called Rage that would identify and remove these bots.

Filtering Bots On Kik

Using proprietary algorithms to identify known spambot name patterns, Rage also uses Imagga’s adult content filtering API (NSFW API) to scan profile pictures as well. The result worked so well that friends soon wanted it. With word of mouth only, his bot was installed on over 2,000 groups in just three days. Now his bot is used in +3000 chats rooms, containing over 100.000 people, and some 20.000 images are being processed every month.

How To Stop Kik Bots

Major project issues - Neural networks are expensive: the cheapest is AWS g2.2 series which costs $0.65/hour. For a student, it is a hefty sum to invest in GPU instances. Therefore Jaap looked into using a third-party company that would provide him with a more affordable out of the box solution. While Google came up as first in a search, he selected Imagga because of the already tested accuracy compared to other solutions on the market.

Putting it all together - Since Kik spambots use racy profile pictures, Rage bot’s detection algorithm works a lot better using Imagga NSFW API than it would be just applying name matching. When someone joins a chat, his or her name is scanned for known spambot name patterns while Imagga’s NSFW content moderation analyzes his or her profile picture. If the safety confidence level is less than 40%, the user is considered a spambot and is removed from the chat.

Since Kik profile pictures are public, Rage bot only needs to send image links directly to Imagga Content Moderation API from which it returns its result.

"confidence": 100,"name": "safe"

Up until August 2018, Jaap and his bot have stopped over 20K spambots from plaguing Kik Messenger. Detected bots who have under a certain level of “confidence” are removed automatically, but the Rage bot is not stopping here. One of the most recent features is the 48 mode, which detects the number of people in a chat and removes inactive users.

Building and deploying - Imagga's NSFW classification were set up and running in a day. Using the 2,000 free API calls a month demo, Jaap was able to quickly implement, test and judge if this was the right tool for him. As a content moderation solution, it can be installed and run the same day with no downtime and deliver accurate content moderation. Then, if you need more API calls, Imagga has very affordable pricing.

New Bulgaria University: Computer Vision Technology Course

Since its foundation, Imagga has been striving to create the best possible image recognition technology. Meaning computer vision has been our core focus long before it became the $20 Billion industry it is 2018.

One of our goals is to give back to the community and captivate the young minds in Bulgaria, so together with New Bulgarian Univesity, we organized a course for the M.Sc students which has reached a record high in student satisfaction. The course focus is on understanding core computer vision technologies, building neuron networks and creating machine learning algorithms on a basic level. Thus allowing students to get an overall understanding of the skills and technology requirements to build an artificial intelligence project in 2018.

During the course, Imagga - CEO, Georgi Kadrev, covers image processing and analysis basics that every junior computer vision programmer should comprehend. Georgi is also a professor at Sofia University and has extensive background teaching. Because of it, he uses managed discussions for more advanced topics like machine learning, classification, and feature extraction. This allows him to build a course program where the knowledge of his students develops proportionately with each lesson.

Lectures are divided into two sections one been lead by Georgi Kadrev, CEO - Imagga, and the other by Georgi Kostadinov, Core Technology Lead – Imagga. This combination of mind allows the course to give both technological and business perspective for anybody who wishes to excel in the AI game.

Our goal is to inspire and make bright young students to use our Free API and build creative projects. For us, it is important that you share your project ideas and capitalize on them. This stimulating Bulgarian entrepreneurship and students inventing their own jobs rather than working on building somebody else's dream.

GTC Conference 2018 Munich - Georgi Kadrev Part of AI Panel

This year’s European edition of NVIDIA’s GTC was held October 9-12th in the beautiful town of Munich, Germany. GTC has established itself as one of the leading events covering the latest trends not just regarding GPU hardware but also the many areas where the hardware computation power enables various practical A.I. use cases. This naturally includes the Imagga’s specialty - image recognition and its applications in various industries.

The hardware advancement, of course, is the practical enabler of modern A.I. so it was exciting to witness the announcement of the DGX-2 with the impressive 2 PFLOPS throughput and significant memory optimizations

As one of the early adopters of DGX in Europe, we were invited to join a panel of industry and academic experts taking advantage of the DGX family of machines. Our CEO Georgi Kadrev has the chance to share how image-oriented and/or image-dependent business can solve problems by taking advantage of the automation that ML-based image recognition brings. He specifically addressed how the DGX Station has helped us to train one of the biggest CNN classifiers in the world - PlantSnap which recognizes 300K+ plants - in just a matter of days.

Georgi was also invited to join the private DGX user group luncheon where the object of discussion was the present and the future of the DGX hardware family and the software and support ecosystems starting to shape around it.

Being an Inception alumnus ourselves, it was inspiring to also see the new generation of early-stage startups taking advantage of GPU and A.I. at the Inception Awards ceremony. Well crafted pitches and interesting vertical applications were presented during this startup oriented track of GTC. Already turning into a tradition, NVIDIA’s rock-star CEO Jensen Huang spent almost an hour afterward talking with entrepreneurs about the ups and downs and the visionary drive laying behind every successful technology venture.

With a lot of positive impressions and new friendships, we are looking forward to returning to Munich for the next GTC EU the latest. In the meantime - if you haven’t had the chance to meet us there, please don’t be a stranger. We are looking forward to learning more about your business and brainstorm how image recognition can help make it even more successful.

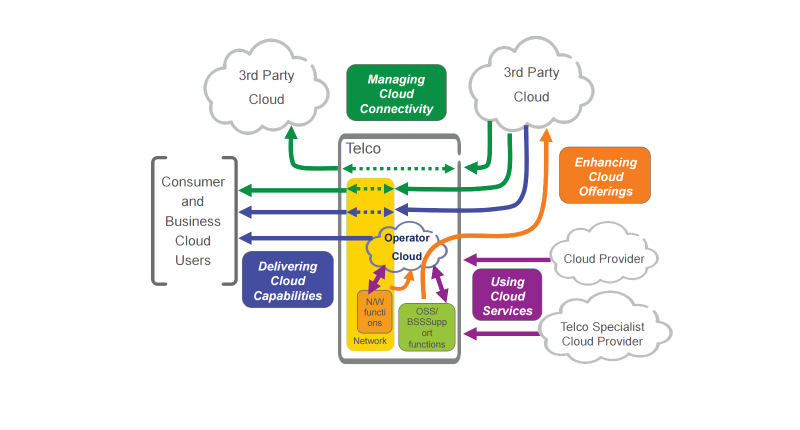

Create a Personal Cloud Storage Service In The Telecom Industry

If you are a telecom company then you have probably noticed the need to reach users across different ecosystems in order to increase and diversify your revenue streams. Increased use of advanced wearable technologies has replaced your more traditional revenue generating channels like SMS, voice calling and upselling. Wearables are not the only thing changing your game, there are literally hundreds of predictions based on forecasts, historical data as well as more technical ones that reveal your future challenges, after carefully reading a few dozen of those there are certain patterns that emerge. Overall it can be said that these are the most common prospects for the telecom industry:

- Content Triumph

Connecting is getting cheaper and cheaper. Moore’s Law tells us this is an inevitable cause and effect of society. Via connectivity, you are able to capture a fraction of the information value chain, while content and services are capturing increasingly more. Many experts expect that if telecom companies neglect to adapt by 2020, content companies like Viber and Snapchat will be able to acquire telecoms.

- IoT is The New Traffic Game

Nowadays, you can extract personal data and patterns from devices as well as humans, thus the reason IoT has become such a hype. That hype is changing your business model. Through Thingification, the big guys have access to trillions of pieces of new connected data: meaning the information is organically connected, no need for human intervention. With the growing demand for an application that improves consumers quality of life, like calories counters, we are quickly creating an entire ecosystem that is far more knowledgeable about people than the people themselves.

How to adapt as a telecom operator

Both national and multinational telecoms are coping by building open platforms as a way to connect to their users. This marriage between a telecom and internet company is the future that most industry leaders are betting on. You can learn best from one of our use cases with Swisscom, who uses automated image tagging for their MyCloud.

How do you monetize this trend

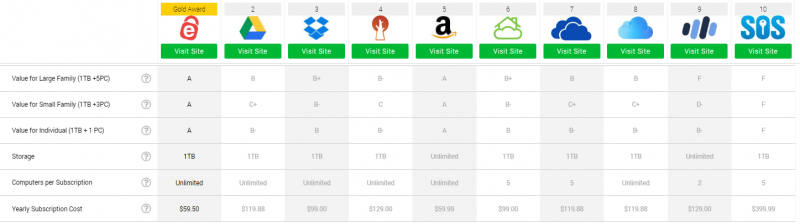

File sharing services like Google Drive and Dropbox are becoming more and more popular due to the rising demand for file sharing services. Personal devices can only hold a limited amount of data the cloud is practically unlimited.

Storing documents on file sharing services like Dropbox and Google Drive has become a common practice online in the last five years. In that time, as people create, edit and hoard older data files, they find they are running short of the free space.

With more and more people opting for either a tablet-only existence or switching from a traditional desktop computer with multiple internal drives to a laptop with a much smaller SSD drive, finding an alternate storage system is important. Тhis is a common issue customer are facing with improving technology, the problem of storage space is getting increasingly important and the large smartphone providers are not addressing it, probably on purpose, just so that they generate additional revenue. As a result, cloud storage companies are gaining from this and are offering a very economical solution to this problem. Learn directly from a use case:

SWISSCOM/IMAGGA - Enhancing Swisscom myCloud with automated image organization

SWISSCOM/IMAGGA - Enhancing Swisscom myCloud with automated image organization

What are you providing to users through personal clouds storage

As a domestic telecom company, you have the opportunity to move up the information communication (ICT) value chain through the integration of cloud services. Because of the direct relationship with consumers and domestic infrastructure, there are several clear advantages international competitors can not offer:

- Secure Local Data Centers- Each European country has data privacy laws preventing any export of personal data to another country. Switzerland, for example, has one which prevents telecoms from selling or providing the data to any foreign government. The same applies to the USA.

- Cloud Services- Storage of pictures, music, text and files, are only the basic service one can provide users. There are numerous applications, the only limitation is the capacity and processing power of servers. Norwegian telecom Telenor, for example, offers Microsoft 365 as a subscription service directly on their cloud.

Conclusion

Personal clouds are developing into a serious content provider, despite that there isn’t a single service emerging as a clear-cut market leader. Overall it is anybody's game, nevertheless, the industry is catching up and the time to act is today. There are more than enough example, the most interesting one I have seen has been with Bulgarian Telecom Mtel and their HBO GO service.

Services satisfying self-actualization boost revenue, which in terms create loyalty. so“more is more”, meaning the more I offer the more I get. It all boils down to providing content that is satisfying, generating more reasons to provoke shares, visits, and purchase. That is the way market opportunities become major revenue streams.

How do you use personal cloud storage to satisfy needs?

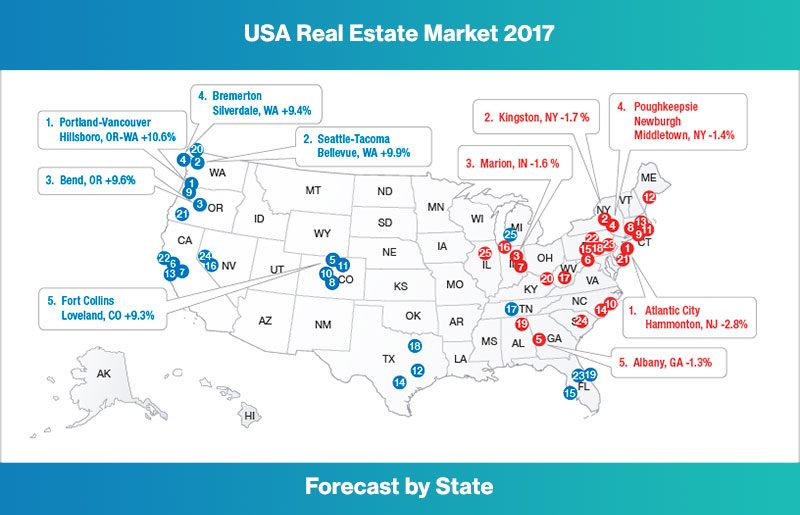

How AI Changes Real Estate For Sale Listing: Skyrocket the Role of Real Estate Agents

Nowadays, Real Estate Agents offer a ton of options to their visitors to make the right property decision. Listings can have filters like neighborhood medians and cost calculators but what they fail to do is make their properties more searchable. This is where artificial intelligence can give a boost in both traffic and guide users through listings.

First, let's define AI: It is the ability of machines to solve problems through learning over time. It allows machines to make logical decisions similar to a human. Why is this useful? Because a human brain can process only a limited amount of data while a machine is limited only by processing power. Thus, if I am having a purchasing decision dilemma than artificial intelligence can help me. Let's look at 5 uses of AI that can influence the real estate industry:

Artificial Intelligence Search

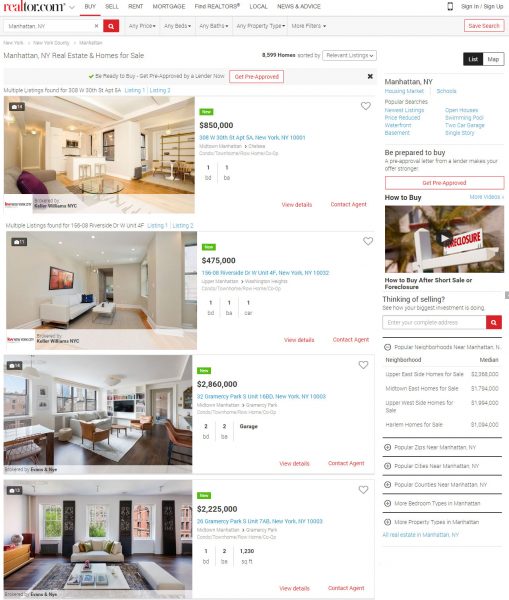

AI allows more specific search criteria rather than the traditional approach: location, zip code, area, bedrooms, bathrooms etc. With AI you can search based on criteria such as return on investment and forecasted property value. This aspect of AI-generated query allows real estate agents to have a much later involvement in the decision making process and brings in more qualified customers.

How many factors can you elicit with AI?

According to Google Rank Brain, there are more than 10.000 A.I. criteria that can be applied in searches, they can change significantly a traditional real estate website. Here is an example of a website using some AI in its sidebar search criteria:

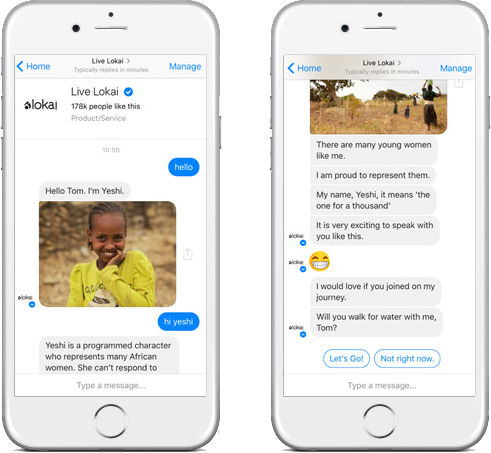

AI Powered Chat Bots

Probably the most mature technology from this list AI chat box have been in development for a while but it is only until recently that they reached a wider audience. They can perform simple task like booking an appointment, giving you detailed property information, verify contact details and even give insights about which properties will be available next. Naturally, this prediction requires a comprehensive knowledge of the market as well as a lot of data but as chat bots are getting more and more application in consumer-related industries, this process becomes more accurate. As this technology continues to develop, users will be much more inclined to purchase once they reach an agent, meaning the sales funnel will become a much more automated process. By 2020, A.I. chat boxes are expected to handle most of the education phase in marketing departments. One of the best chat bots on the market is done by Lokai raising awareness about Ethiopian women.

Maybe this is not enough to understand the power of chat bots. Watch this video to see a natural language processing and artificial intelligence making conversation with a potential buyer:

Image Recognition Recommendation

Agencies can recommend properties based on what buyers have already looked at. This can be achieved by taking a picture when you visit a property and uploading it into Homesnap. They pick up the area, the type of house and your budget based on the property to give you an accurate listing which they can automatically send a newsletter or simple notification email. As a result, speeding up the buying process by reducing the need for a broker to search through properties and send you relevant listings. That leads us to think that in the future, most of the jobs in a real estate agency will be automated. This brings us to our next point.

Automating Jobs

According to an Oxford University study, AI will have a significant impact on automating most of the jobs in a real estate agency. Their study reveals what positions will be replaced by AI and by 2020 if their estimations are correct, those are:

Real Estate Association Managers: 81%

Real Estate Sales Agents: 86%

Appraisers and Assessors of Real Estate: 90%

Real Estate Brokers: 97%

If you are thinking that automating the entire real estate brokerage experience will make the purchase of a home an emotionless experience than you might be right. At the end of the day, people want to make the best purchasing decision and according to a study by Inman, this point has already been reached. In their study, clients preferred a bot-generated listing. Furthermore, they had a hard time which listings were human-generated or bot-generated.

How can bots suggest listings?

By using a random forecast approach, AI can use previous searches, preferences, other users search patterns and many more criteria to give much a more accurate result and improve over time.

“ What if semantic technologies combined with cognitive computing and natural language processing help agents do their job better? There is way not be left out."

These capabilities seem to indicate a radical change in the industry. Despite the rigidity of the technology and the seeming difficult of clients to comprehend that they are communicating with a bot, accurate listings is justifying the adoption. As artificial intelligence becomes more accurate with time, the user experience will as well. The role of the broker will be diminished but not become redundant, since, purchasing a home is a very emotional experience and there will always be the need for a broker to give that extra push.

Announcing V3.0 Tagging With Up to 45 % More Classes

We are happy to announce the upcoming update of our Tagging technology! We have been looking to make this change so that you can rely more on your API calls and get more precise results to help you better analyze and classify your visual data.

The updated version will become active on 28th of August and you can expect up to 45% more classes* with overall 15% improved precision rate and 30% better recall.

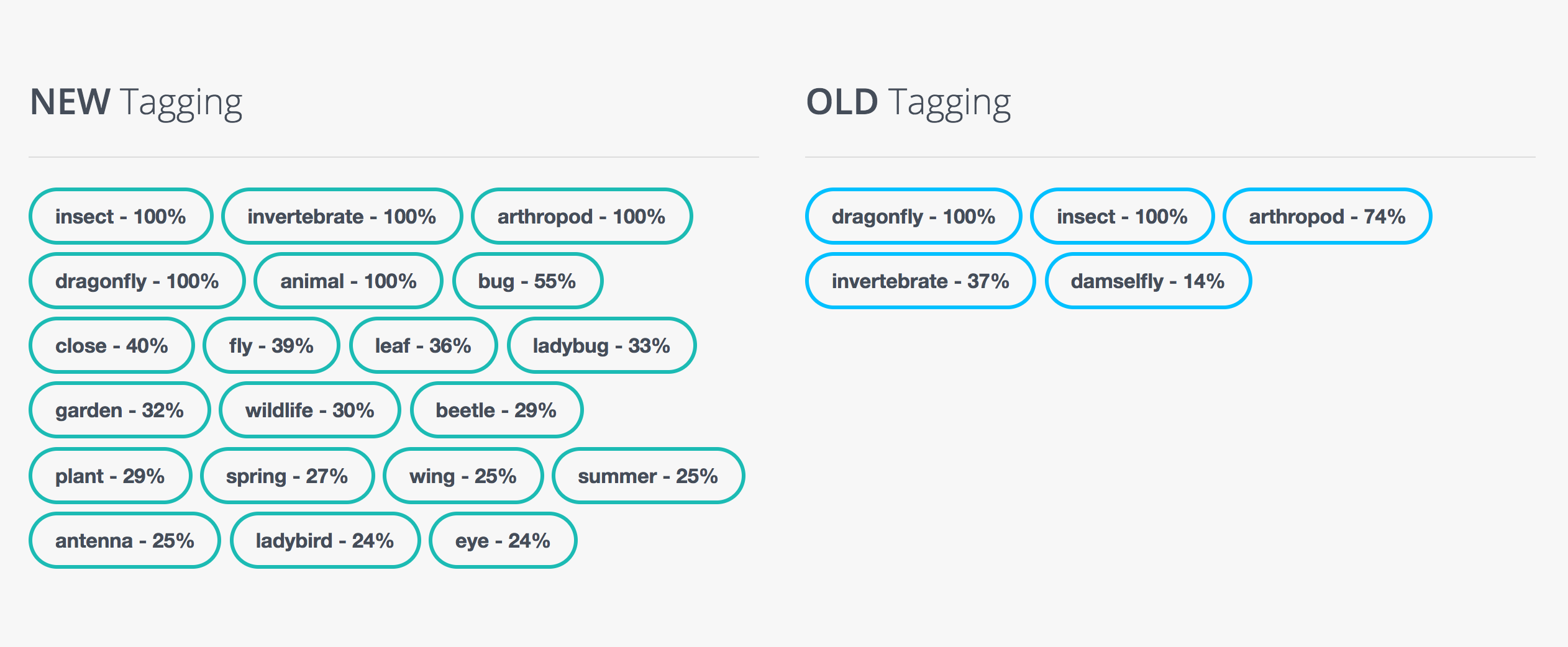

NEW vs. OLD Tagging Comparison

Because we believe that you need to see it with your eyes rather than just hear about it and test it on your own, we have decided to make this comparison with one of our Demo images.

Clearly in this case you can notice 46% more classes and the clear improvement of the precision in keywords accuracy. Prior to the update the most significant keyword was insect, while now it is dragonfly, small increase in recall but significant increase in intrinsic value.

Important For Current Users:

Our old tagging version will remain the default one for a period of 14 days after the launch. To upgrade you will need to change your endpoint parameter to version=3. Follow this example if you are uncertain how to do this.

If your current request url looks like this:

http://api.imagga.com/v1/tagging?url=http://pbs.twimg.com/profile_images/687354253371772928/v9LlvG5N.jpg

To make a request to the new version, it must looks like this:

http://api.imagga.com/v1/tagging?url=http://pbs.twimg.com/profile_images/687354253371772928/v9LlvG5N.jpg&version=3

After this, for a period of 7 days the new Tagging version will become the default one, but all users will still be able to make requests to the old version by adding the parameter for version=2. At the end of that period there will be only version 3 and you won't be able to add version parameters to your calls.

What is your experience with version 2 and 3? Did you find much of a difference when testing with your dataset?

* Some classes may have changed names.

How Image Recognition Powered Image Searches can Improve Instagram

Searching on Instagram has not changed for a while and that is why you are probably using your friends to find what you need. Compared with Google Plus or Facebook, it is a lot harder to find images on a certain topic unless you are familiar with the hashtags system and its complexity.

Why is it difficult to find relevant content on Instagram?

If you have searched on Instagram then you have probably noticed it is done only through predefined and confirmed criteria like usernames, hashtags, locations etc. Finding images based on visual analysis is not possible, creating a lot of ambiguity about the locations and actual descriptions of the images. If Instagram was able to understand what was in a picture, it would make searching images much easier for users, significantly improving discoverability of content.

Instagram has become a social channel that people use to monetize content (no surprise here). However, where it fails is in helping profiles that post content to be more discoverable, relying instead on user-defined parameters to make images searchable. Youtube automatic playlist has certainly done great in that direction by creating suggestions and by actively pushing longer content, but that strategy cannot work for Instagram. There is another direction that can create the same result: image recognition.

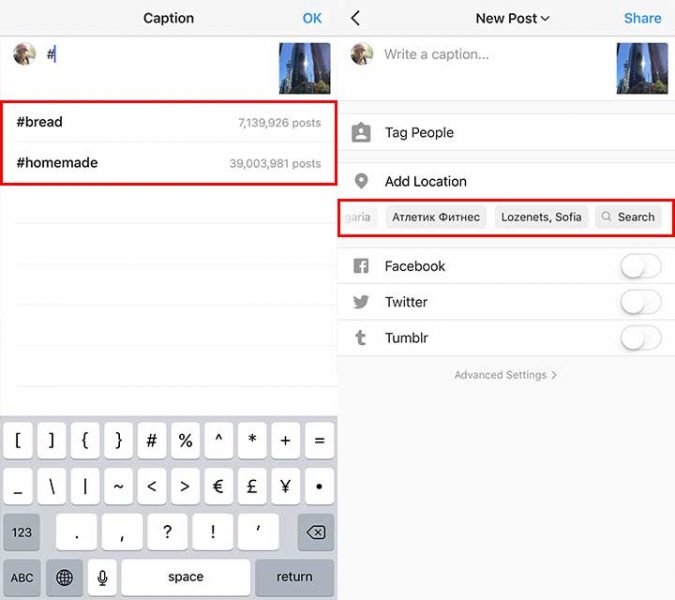

Let's take a look at the content sharing and uploading experience currently available on Instagram:

It is clear that you can only apply hashtags already used in the past and the suggestion for tagging is based on locations you have already visited. However, the system can not recognize that this is a picture of Downtown Oakland, New Zealand has taken just now or that it is a building, skyscraper etc.

How can Instagram benefit from using image recognition?

Object recognition allows machines to determine objects, positions, color, nuances in an image and suggest highly relevant keywords. Once this process is complete, there is a variety of improvements that can be achieved to aid social media.

Suggest Related Keywords

Through object recognition, we are able to recognize the different objects in a picture and distinguish background from foreground. Furthermore, with over 95% accuracy, custom training can increase the depth and definition of the recognition.

Usually, auto-tagging experiences difficulty in recognizing in-depth categories such as material, environment and much more. However, with further training of the model, we have achieved extremely high accuracy, resulting in keywords that surpass user expectations as it returns almost always only relevant content. It is estimated that 80% of all posted images lack relevant hashtags. In online shopping, this is especially useful as it allows users to find matching clothes and styles based on pre-defined keywords that rely not only on users but also designers, fashion experts or even celebrities. The same application in social media terms will allow hashtags and even simple search terms to provide accurate results.

"Storytelling is evolving, and we are the real-time view into the world."

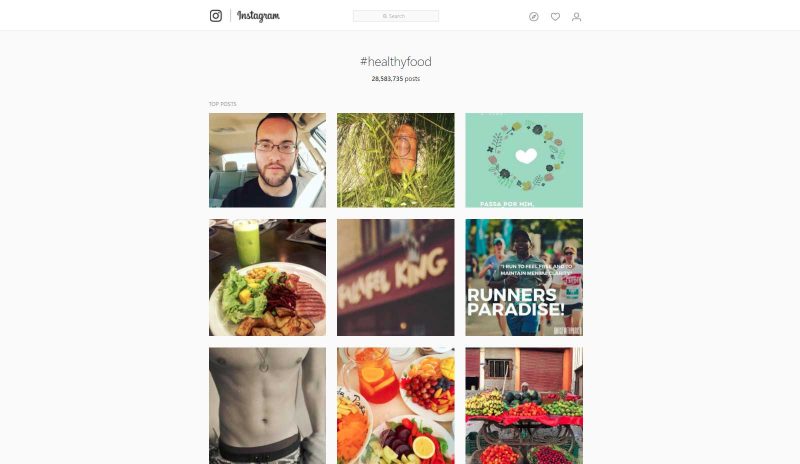

Improve Search Results

Search results are based on human input on images and do not always show accurate results. People add hashtags which have some perceived value for the image, making its results subjective and unrelated.

Have you ever tried to think like a machine so that Google returns the right information?

That is because searches use static formulas to determine search results, like the one used in most search engines. Artificial intelligence can revolutionize this process. Instead of the static formula through machine learning, artificial intelligence, and big data, keywords will give websites visitors a higher chance of discovering relevant content and makes user-generated content much more discoverable. Many of the problems related to search will be gone like black-hat tactics, exact keyword phrase matches, blacklisting etc.

Leave a comment below if we are forgetting some application that can benefit social media companies.

Managing Digital Assets for Enterprises: Media, Marketing & Asset Management

Digital Asset Management (DAM) is a concept still considered irrelevant for high transaction volume industries. They are reluctant to adopt agile cloud solutions, relying instead on outdated costly and inefficient on-premise architectures. Furthermore, organizations are using several different solutions for email resource, creative visuals, web banners management and classic media. This decentralization creates a chaotic workflow when running a marketing department. Regardless, the dogma “if something ain’t broke don’t fix it” is very much active here, making adopting DAM as a service a slow evolution. Solutions such as IntelligenceBank aim at being the center of the digital asset universe and their solution is most commonly applied towards enterprise wide, content marketing, media, and production.

DAMs Impact

- Eliminates Redundancies in Digital Content & Eliminates Re-Creation Costs:

Departments creating multiple copies of the same resource or different versions of the same resources is a waste of time and money for the company. DAM solutions eliminate costly redundancy by creating an easily manageable central repository for all digital assets.

- Improves Campaign Management Overview:

Campaign resources are usually decentralized on a local hard drive, making it impossible for any process overview. DAM solutions such as IntelligenceBank allow you to keep all resources centralized and easily accessible from anywhere.

- Improves Campaign Consistency:

DAM solutions allow campaigns to be in one centralized location, greatly facilitating the management of all digital assets, along with their rights and usages.

DAM Impact with Image Recognition

- Creates Keywords and Metadata:

Digital assets need to be organized and defined in a manner that is consistent across the organization, departments and teams. Image recognition allows to quickly classify, categorize and describe visual content with predefined classifiers, assuring perfect consistency.

- Facilitates On-Boarding Process for New Customers:

Users are reluctant to relocate their visual library because of the labor-intensive process of organizing them in the new system. With image recognition and auto-tagging, this process is easily solved at scale, making onboarding as quick as uploading the files in the cloud.

- Improves Content Discoverability for Humans and Machines:

In a library with millions of subjects and classes, only the curator has a complete overview of resources. Using image recognition, every user has an access, thanks to a seamless unified classification and auto-indexing of all visual content.

What Solutions Image Recognition offer for DAM?

As soon as users upload their creative files to their DAM, Imagga’s artificial intelligence software analyse their content and automatically pre-populates the metadata field with suggested relevant keywords. They can then easily decide which ones to keep according to their internal nomenclature.

Using deep learning, Imagga also offers the option for users to train their own custom categorizer, precisely tailored to their content. For example, a brand might wish to have their products images been auto taged with names, but with SKU as well. Or a library specialized in mushrooms might need every species identified by their scientific, latin names.

The new tagging system is already live and you can try the 3.0 by registering as a free user.