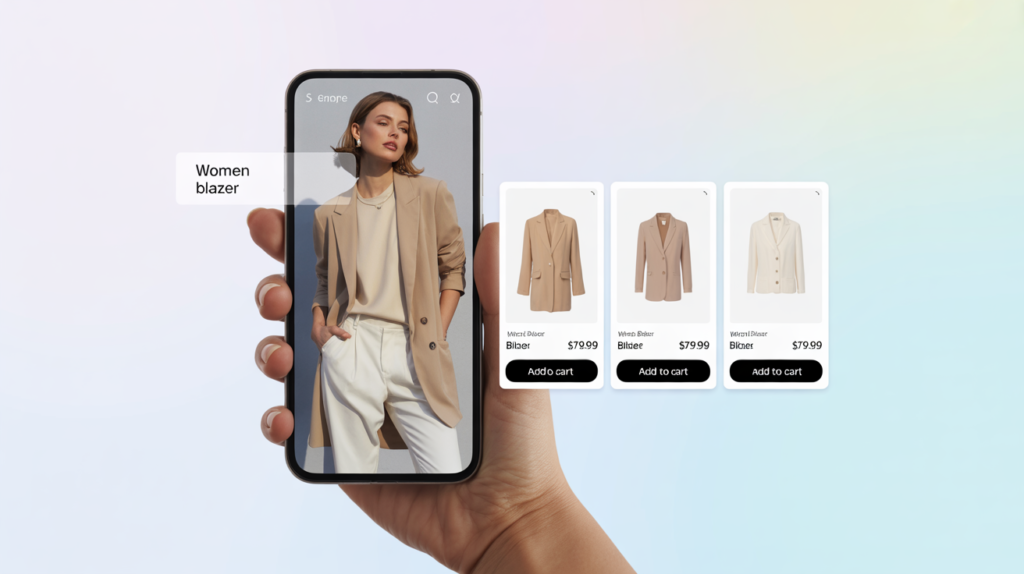

In an increasingly visual-first digital world, users don’t want to describe what they see — they want to show it. Visual search bridges that gap, transforming user-uploaded images into powerful discovery experiences. From finding the perfect chair to identifying the kind of rare plants they encounter or surfacing similar products, this technology is changing how people explore and buy online.

And it is not just for end users. It can be incredibly powerful in backend operations. Visual search enables faster product search by matching scanned or photographed items with existing product images in your catalog, making inventory handling more efficient.

If you’re building a platform that needs smart, intuitive product discovery, whether customer-facing or internal, and especially in retail, fashion, furniture, or lifestyle, it’s time to consider adding search by image. Let’s explore how it works, how to implement it using a plug-and-play API like Imagga Visual Search, and the benefits it can deliver for your business.

What Is Visual Search?

Visual search is a technology that allows users to search for information using images instead of text.

At its core, it uses computer vision — a subset of AI that helps machines “see” and interpret visuals. Much like the human eye identifies and recognizes objects that catch your attention, computer vision enables systems to spot and analyze items in images. The system identifies objects, extracts features (like color, shape or texture for example), and compares them to a database to find the most visually similar items.

Think of it as reverse image search, but smarter, faster, and purpose-built for product discovery, not just metadata matching.

What’s the difference between reverse image and visual search?

Reverse image search is typically used to find the source of an image or locate exact or near-exact copies across the web. It compares the uploaded image to a database of indexed images, returning matches based on overall similarity or file attributes — tools like Google Images or TinEye are common examples.

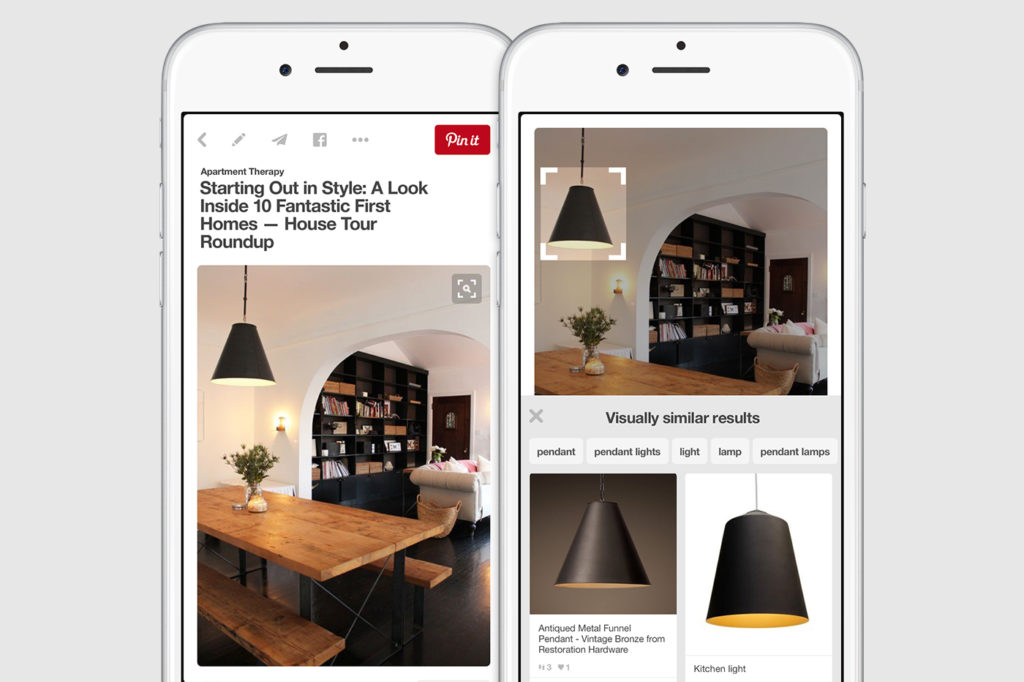

Visual search, on the other hand, goes a step further, using advanced computer vision and AI to analyze specific visual features, such as shape, color, texture, and context — and then finds visually similar items, even if they’re not exact matches. This makes the technology ideal for product search, where a user might upload a photo of a chair or outfit and expect to see lookalike products, not just the original image.

In short, reverse image search is about finding the same image; visual search is about understanding and matching what’s in the image so that it can show you visually similar images.

How Your Users Already Use Visual Search (And Why You Should Catch Up)

Your users are already used to this way of finding products — just not on your platform.

Google Lens helps them identify plants, clothes, pets, and restaurants. Pinterest and Amazon let them tap or upload photos to discover similar products. These are some of users’ favorite apps for visual search and discovery, offering quick and integrated solutions across devices.

Fashion and furniture brands use it to suggest visually similar styles based on what someone’s browsing.

These platforms have trained users to expect camera-first interaction. If your product discovery still relies on drop-down filters and keyword tags, you’re making users work harder than they should.

Identifying Objects and Products with Visual Search

One of the most compelling advantages of the technology is its ability to recognize real-world objects and instantly suggest matches — without the need for perfect descriptions or category filtering.

Whether a user snaps a photo of a chair they saw in a café or uploads an image of a product they own, Imagga’s visual search can identify similar items from your catalog. This goes beyond product titles or metadata — it looks at the actual visual features of the item (like shape, texture, or color) to make a match.

You can use this to:

- Match photographed furniture, clothing, or accessories with similar SKUs

- Help users identify and explore unknown products in your database

- Let internal teams or warehouse staff scan and identify items visually, even without barcodes or tags

- Enable users to identify plants or use animals find features, such as discovering the species of animals they encounter through visual search tools

Visual Search for Product Discovery and Inspiration

Beyond identification, visual search is a powerful tool for serendipitous discovery — especially in verticals like fashion, furniture, and home decor where aesthetics drive decisions.

What is a serendipitous discovery? Serendipitous discovery is the process of unexpectedly finding something valuable or appealing while searching for something else—or nothing at all. In the context of visual search, it refers to uncovering relevant or inspiring products through image-based exploration, even without a clear or specific intent.

With Imagga’s visual search API integrated into your app or website, users can:

- Upload a photo of an outfit and get instant style matches from your product catalog, helping them find the look they want

- Take a picture of a friend’s apartment and find complementary furniture or decor, or discover items that match the look of something that catches their eye

Building a Visual Search Backend That Scales

For teams ready to move beyond basic tagging and manual cataloging, Imagga provides the technical foundation for building a powerful, scalable visual product search backend. Unlike general-purpose AI services, Imagga is purpose-built for developers who want tight control over the image-matching process inside their own applications.

Using Imagga’s Visual Search API, you can index your entire product catalog not just by keywords or categories, but by the visual features of each item — color, texture, shape, and more. This allows you to create a backend that responds in real time to user-uploaded images or camera input, returning the most visually relevant products from your catalog.

Product Search Optimization

Optimizing product search with visual tools like Google Lens is transforming the way users interact with the world around them. Instead of typing out long descriptions or struggling to find the right keywords, users can simply open their camera app or upload a photo to instantly search for products, identify plants, or even recognize animals they spot in the park. The Google app, available on select Android devices and all your devices, brings this powerful image search capability right to your fingertips, making it easier than ever to discover details about the things you see every day.

With the product search feature, users can snap a picture of a stylish outfit, a unique chair, or a piece of home decor in a friend’s apartment and quickly find similar clothes, furniture, or accessories online. The Google Lens icon within the app lets you search for products, landmarks, and even dog breeds using just your camera or an image from your gallery. This seamless integration means you can access visual search on your phone, computer, or web browser—whenever and wherever inspiration strikes.

But visual search isn’t just about shopping. The ability to copy and paste text from images means you can quickly find explainers, videos, and answers to homework questions in subjects like math, physics, history, biology, and chemistry. Whether you’re stuck on a tricky problem or want to learn more about a plant you found on a walk, the app helps you access information and learn about the world in a whole new way.

The product search feature is designed to help you check and compare products, making it easy to find the perfect item for your needs—be it furniture, home decor, or clothing. With support for multiple languages and availability in countries like the Netherlands, Google Lens ensures that users everywhere can benefit from smarter, faster product discovery.

By leveraging the power of visual search, you can refine your results, ask questions, and get answers instantly—without having to type a single word. Whether you’re looking to identify a landmark, find the perfect outfit, or solve a science question, the Google Lens app shows you how to use your camera to search, learn, and shop more efficiently. This makes visual search an essential tool for anyone who wants to make smarter decisions and navigate the world with ease.

Visual Search Use Cases (You Can Launch Today)

Here are just a few powerful ways platforms are using visual search:

- Fashion eCommerce: Let users upload a photo of an outfit and see similar styles in your catalog

- Home Decor & Furniture: Snap a picture of a chair, table, or lamp and get lookalike pieces

- Secondhand Marketplaces: Use image matching to clean duplicates and suggest pricing

- Plant/Animal Identification Apps: Recognize species and link to learning resources

- Digital Asset Management: Organize and retrieve visuals using image similarity, not filenames

You can find more real-world examples for visual search applications here.