What is OCR in Content Moderation?

OCR (Optical Character Recognition) is applied in content moderation to identify and analyze text inside images, making sure that harmful or inappropriate content doesn’t circulate hidden in screenshots, memes, and other user-generated visuals.

Online conversations are not what they used to be — we don’t write so much, but rather share our thoughts, opinions and jokes through screenshots, memes, and other images.

While this image-based communication gives us a lot of creativity, it comes with its risks. Offensive or fake texts can sneak in such visuals and travel unhindered around in the digital space.

There’s a huge difference between moderating regular text and text within images. As traditional text analysis doesn’t work for the latter, this is where the crucial role of OCR for text-in-image moderation kicks in.

What’s the Difference Between Text Moderation and Text-in-Image Moderation?

Both text moderation and text-in-image moderation aim to spot inappropriate text content.

Text moderation is the traditional method through which digital platforms filter and analyze plain text. It may be coming from user posts, comments, chats, or the like. Text moderation is a straightforward process, as algorithms are already used to processing it in large quantities.

Text-in-image moderation, on the other hand, is more challenging. It is the moderation tool for handling text within images that uses OCR to extract text from visual content. Then it can be screened in the traditional way to check whether it meets moderation policies. It’s worth noting also that unlike classical image moderation, text-in-image moderation doesn’t focus on moderation of visuals.

To have a complete grasp of different moderation modes, it’s important to differentiate between types of content and embedding. Without text-in-image moderation, filters can be bypassed through text included in visuals — which can lead to the wide distribution of harmful or inappropriate content.

How Is OCR Used in Content Moderation?

Optical Character Recognition gives computers the skill to recognize text that is embedded within images. Here is how OCR naturally complements the text-in-images moderation process with a crucial tool.

The first step of this type of moderation is text extraction. OCR processes an image and turns text into data that can be read by machines. This is done with a high level of accuracy and is not impeded by the presence of colors, different fonts, or complex backgrounds.

Once the text has been ‘read’, it can be analyzed for harmful and inappropriate language, hate speech and other serious issues, such as harassment and bullying, self-harm and suicide content, spam, scam, fraudulent ads, and personal data and privacy leaks.

Think about harmless-looking memes on social media — which may easily contain repulsive, harmful or illegal text. Without OCR in action, it would slip unnoticed. The same goes for, say, counterfeit products with fake branding on ecommerce websites.

Advanced OCR solutions are applied together with Natural Language Processing (NLP) algorithms to help understand context, intent, and cultural nuances. On the basis of this textual and contextual analysis, the moderation system can take action on certain content, such as flagging for review and removal. OCR thus helps moderation platforms to bypass the blind spot of text embedded in images.

The Challenges of OCR in Moderation

Optical Character Recognition is a powerful tool that has immense benefits for content moderation. Yet there are some challenges that moderation platforms have to keep in mind.

Image Quality

Various factors of image quality can affect the precision of OCR ‘reading’, including low resolution, noisy backgrounds, and distorted fonts. For example, memes usually contain warped, bold, or shadowed letters that can be difficult to process. As OCR models advance, they have to be continuously trained on new data to recognize distortions and work with poor image quality.

Language Diversity

If only harmful text could be solely in English… But the reality is that content moderation has to work effectively in numerous languages, recognizing various alphabets, scripts, and even emojis and slang. State-of-the-art OCR solutions have to be multilingual, so they can handle Latin, Cyrillic, Arabic, and Asian languages, among others. They also need constant updating to stay current with the novelties of internet language.

Speed and Scale

With the sheer amount of user-generated content like memes and screenshots uploaded online daily, digital platforms have a lot of work in terms of text-in-image moderation. Automated content moderation can stay on top of these content volumes, and the same counts for OCR. It can handle massive amounts of content in real time.

Bypass Tricks

Word distortion, symbols, and text split on different images — these are just some of the evasion tactics that some users apply, so they can share harmful text-in-images content. OCR systems need constant updates and anti-evasion tools, so they can keep catching harmful content despite bypass tricks.

The Benefits of OCR for Moderation Teams

The advantages of embedding OCR in the content moderation process are not theoretical — they are tangible.

Moderation teams get concrete benefits that make their work easier and more effective:

Increased Detection Accuracy

Harmful or inappropriate text would go unnoticed without OCR — so its use automatically improves the precision of content moderation. All the memes, screenshots and other user-generated content that contains such text goes through additional screening.

Closed Gaps of Potential Exploitation

Including harmful text within visuals is a common evasion tactic used by bad actors. OCR doesn’t allow this inappropriate content to slip through. As social media struggle with memes and other images that go against their policies, OCR offers a viable solution for text-in-images moderation that significantly reduces the violations.

Enhanced Trust and Safety

People lose trust in a digital platform when they notice harmful content that spreads freely. That’s why effective content moderation that embeds OCR ensures digital spaces’ safety, which in turn also boosts user loyalty and brand reputation. This helps moderation teams hit their Trust and Safety program goals.

Improved Regulatory Compliance

Content moderation regulations such as the EU’s Digital Services Act (DSA) are getting stricter, and digital businesses have to adhere to them to stay on track and avoid penalties. Applying OCR in the content moderation workflow allows moderation teams to hit regulatory compliance goals, guaranteeing accurate moderation of text-in-images.

Imagga’s Text-in-Image Moderation

Imagga’s Text-in-Image Moderation is built on our advanced adult content detection model for images and short-form videos and is embedded within these solutions. By providing effective moderation of text embedded in visuals, it closes a significant gap in visual content safety tools, completing our powerful Imagga Moderation Suite.

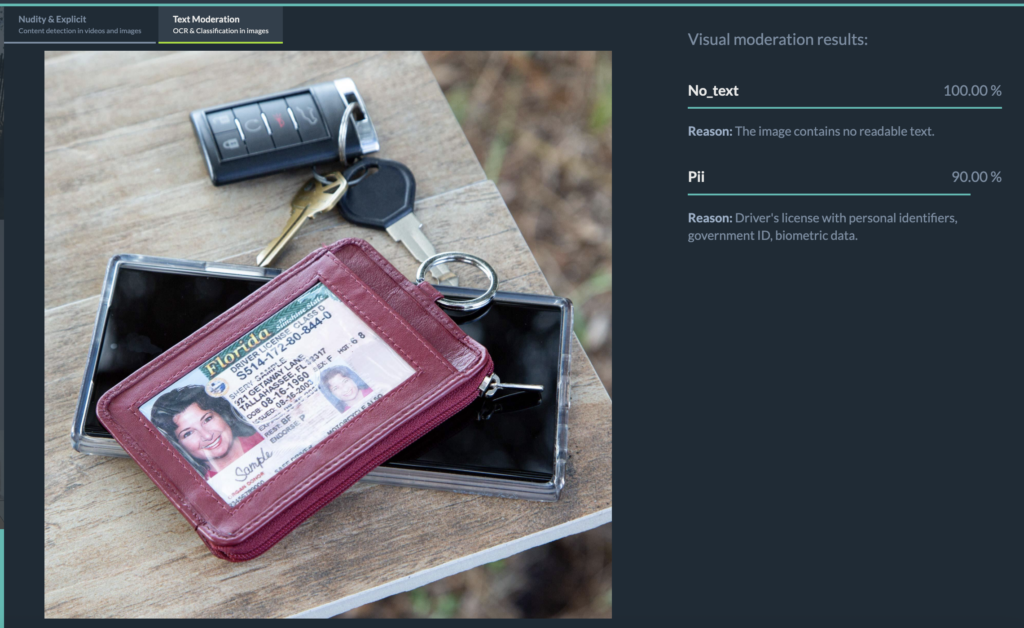

Our system employs OCR together with a precisely calibrated Visual Large Language Model, combining exact character recognition with contextual insights. Extracted text is organized in a set of categories, including safe content, drug references, sexual material, hate speech, conflictual language, profanity, self-harm, and spam.

Besides text extraction at scale, our Text-in-Image Moderation is trained to understand context, nuance and metaphor. It operates in multiple languages and writing systems.

In addition, it provides PII detection, protecting users’ sensitive information. It spots any sharing of personal data such as names, usernames, contact details, financial data, and the like — whether it has been accidental or intentional.

The Text-in-Image Moderation can be fine-tuned to meet the particular needs of digital businesses, such as customizing, including or excluding certain types of content in the moderation process.

Want to check out how it works? Take a look at Imagga’s Text-in-Image moderation in action in our demo.

Get Started with OCR for Effective Text-in-Image Moderation

Memes, screenshots, and all types of visual content are here to stay in our digital environment, so effective moderation of text in images is a must.

That’s why text-in-image moderation powered by OCR is not simply a nice perk. It’s a crucial tool for getting a hold of harmful or inappropriate text embedded within visuals that would otherwise slip through the cracks.

Effective text-in-image moderation is essential for digital businesses that want to thrive. It helps them safeguard their brand reputation, provide user safety, keep their regulatory compliance, and support long-lasting customer relations.

Imagga is a trusted partner in this process. Our AI-powered OCR technology helps businesses identify moderation issues in visual content — and deal with them in quick and smart ways.

Ready to get started? Get in touch with our AI experts today.

FAQ: OCR for Text in Images

What is OCR?

OCR stands for Optical Character Recognition. It’s a technology that empowers computers to recognize text embedded within visuals, such as words in memes, screenshots, and other user-generated visuals.

What is text-in-image moderation?

Text-in-image moderation is a type of content moderation that entails identifying and filtering harmful or inappropriate text hidden within images. These may include memes, screenshots, scanned documents, and even video frames.

What’s the difference between text moderation and text-in-image moderation?

Text moderation is the process of screening text content like posts, comments, and chats in order to ensure it’s safe and legal. Text-in-image moderation entails using OCR to ‘see’ text within images, so that it can be analyzed in the same way for safety and compliance.

Why is OCR important for content moderation?

Offensive language, misinformation, hate speech, and other types of inappropriate text can be easily hidden within user-generated visuals. Traditional text moderation doesn’t catch it. That’s why OCR is crucial for text-in-image moderation.

What types of content can OCR tackle?

OCR can extract text from different types of visuals, including memes, screenshots, scanned documents, and even video frames.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.