In 2023, the average person spent 17 hours per week watching online video content. Unfortunately, not all of it is appropriate – or even safe – and the problem is only growing. TikTok alone removed nearly 170 million videos from its platform in the first quarter of 2024 due to policy violations.

Find more statistics at Statista

Without proper video moderation, harmful, illegal, or offensive content can spread unchecked, damaging user trust and a platform’s reputation. Research also shows that exposure to violent or harmful content can reduce empathy and increase aggression, anger, and violent behavior.

Watching unmoderated video content is like drinking water from an unfiltered source – the more you consume, the higher the risk of exposure to harmful elements. Just as contaminated water can lead to physical illness, repeated exposure to violent, misleading, or harmful videos can negatively impact mental health, distorting perceptions of reality and increasing aggressive tendencies. Video moderation is especially important for protecting vulnerable groups, such as children, from exposure to inappropriate or harmful content.

If you run a platform with user-generated videos, ensuring safe, compliant, and appropriate content is not just an option – it’s a necessity. But video content moderation is far more complex than moderating images or text. This article explores what video moderation is, how it works, and why it’s critical for digital platforms running user generated content.

What is Video Moderation?

Video moderation is the process of analyzing and managing user-generated video content to ensure compliance with platform guidelines, regulatory standards, and community expectations. It helps digital platforms and businesses maintain a safe, engaging environment by detecting harmful, inappropriate, or non-compliant content, often focusing on the analysis and processing of various media types such as images, videos, audio, and text.

As a subset of content moderation, video moderation is more complex due to its multi-layered nature, requiring advanced tools and cross-modal analysis to assess speech, visuals, and contextual meaning across dynamic formats like live streams and pre-recorded videos. Moderating audio content in videos is especially important, as it requires the ability to review, transcribe, and analyze audio for potential policy violations.

Types of Video Content

Video content on digital platforms comes in many forms, each presenting unique challenges for content moderation. User-generated content is at the heart of most social media platforms, where individuals upload and share their own videos with a global audience. This type of content is dynamic and unpredictable, making it essential for platforms to have robust moderation tools in place to filter out inappropriate content such as hate speech, violence, or adult content.

Live streams are another rapidly growing media type, allowing users to broadcast video in real-time. Moderating live streams requires specialized tools that can detect and respond to violations as they happen, since inappropriate content can reach viewers instantly and cause immediate harm to a platform’s reputation.

Pre-recorded videos, in contrast, offer platforms the opportunity to review and moderate content before it goes public. This allows for more thorough analysis and the ability to apply moderation criteria consistently, reducing the risk of unwanted content slipping through.

No matter the type—user generated, live, or pre-recorded — effective content moderation is essential to ensure that all videos comply with platform rules and community standards. By implementing strong moderation processes, platforms can protect their users, maintain a positive environment, and uphold their brand reputation in the competitive world of social media.

Types of Video Moderation

Automated content moderation relies on AI-powered tools that analyze videos for inappropriate content, such as violence, nudity, hate speech, or misinformation. These systems use machine learning algorithms to scan visuals, speech, and text overlays for violations of platform guidelines. While AI moderation is highly scalable and efficient, it often struggles with understanding context, sarcasm, and nuanced content.

Human moderation involves trained moderators manually reviewing flagged content to ensure compliance with community standards. Unlike AI, human reviewers can assess tone, context, and intent, making them essential for cases that require deeper understanding. However, this approach is labor-intensive, costly, and exposes moderators to mental health issues.

Community moderation is another method where users help flag inappropriate content for review. This is a cost-effective strategy that encourages user participation, but it heavily depends on active engagement from the community and may result in delays before action is taken.

The hybrid approach combines AI automation with human oversight, leveraging the strengths of both. AI handles bulk moderation and flags potential violations, while human reviewers refine the results, ensuring accuracy. Most companies opt for this method to strike a balance between efficiency and reliability. Additionally, human moderators serve a dual purpose. Beyond making decisions where AI falls short, they provide valuable feedback to improve machine learning models over time. By labeling edge cases and correcting AI mistakes, human reviewers help train AI systems, making them more effective and reducing reliance on manual intervention in the long run.

With advancements in AI-powered moderation, companies are increasingly relying on automation to manage video content at scale. A prime example is TikTok, where the percentage of videos removed by automation has steadily increased, reflecting the platform’s growing dependence on AI tools to maintain content integrity.

Find more statistics at Statista

Why is Video Moderation Important?

Moderation prevents exposure to harmful content, including violence, exploitation, hate speech, and misinformation, fostering a safe online environment. Platforms must also adhere to GDPR, COPPA, the Digital Services Act, and other regional laws. Failure to comply can lead to fines, legal issues, or even platform shutdowns. Unmoderated content can damage a platform’s reputation, causing user distrust, advertiser pullbacks, and potential bans from app stores. Additionally, video moderation helps filter spam, scams, and inappropriate monetized content, ensuring advertisers remain confident in platform integrity. Moderation also covers user-generated posts, messages, reviews, and comments, which may be limited or removed if they violate platform policies. A well-moderated platform promotes a positive and engaging user experience, encouraging content creators to stay active and attract more users.

Why Video Moderation is More Difficult Than Image or Text Moderation

Video content moderation is particularly challenging because typically each second of video contains 24 to 60 frames, requiring platforms to process thousands of frames per minute. Unlike static images, video requires analysis of moving visuals, background context, speech, and text overlays, making AI moderation more resource-intensive. Moderating live streams adds another layer of complexity, as content must be analyzed and filtered in real-time while the stream is being broadcast to viewers. The cost of moderation also scales with the number of frames per second being analyzed, making AI moderation expensive and human review even more time-consuming.

Additionally, bad actors constantly evolve tactics to bypass moderation filters, such as altering videos, changing speech patterns, or inserting harmful content subtly, further complicating the moderation process.

Artificial Intelligence in Video Moderation

Artificial intelligence has become a game-changer in the world of video moderation. By leveraging machine learning, platforms can analyze vast amounts of video content quickly and accurately, identifying potential issues such as hate speech, violence, or adult content. AI-powered moderation tools are designed to detect unwanted or offensive content in real-time, flagging videos that may violate platform guidelines.

Once a video is flagged, human moderators step in to review the content and make a final decision. This combination of artificial intelligence and human review ensures that moderation is both efficient and nuanced, as human moderators can interpret context and intent that machines might miss. The use of AI not only speeds up the moderation process but also helps protect users from exposure to harmful or offensive content, safeguarding the platform’s reputation.

As moderation tools continue to evolve, the partnership between machine learning and human moderators will remain essential for effective content moderation, allowing platforms to detect and address potential issues before they escalate.

Best Practices for Effective Video Moderation

An effective video moderation strategy involves a combination of AI and human review to balance scalability and accuracy. AI is excellent for bulk moderation, but human oversight ensures that content is interpreted correctly in complex situations. Clear content guidelines should be established to help both AI and moderators make consistent enforcement decisions. Transparency in moderation is also key, ensuring that users understand why content is removed or flagged and providing avenues for appeals or clarifications.

To maintain an efficient moderation system, platforms should invest in regularly updating their AI models to adapt to evolving content trends and moderation challenges. Staying ahead of emerging threats, such as new tactics used by bad actors to bypass filters, is crucial. By continuously monitoring trends and refining moderation policies, platforms can create safer environments for users and content creators alike.

Case Studies and Examples

Many leading companies have successfully implemented video moderation solutions to protect their platforms and users from inappropriate content. For example, YouTube employs a sophisticated combination of AI-powered moderation tools and human reviewers to detect and remove videos containing hate speech, violence, or other offensive content. This approach allows YouTube to efficiently moderate millions of videos while ensuring that nuanced cases receive careful human review.

Another example comes from a social media platform that integrated a video moderation API to monitor live streams. By using advanced moderation tools, the platform was able to detect and flag hate speech in real-time, preventing offensive content from reaching its users and maintaining a safe online community.

These case studies highlight the importance of effective content moderation in today’s digital landscape. By leveraging the right tools and strategies, platforms can protect their users, uphold their reputation, and foster positive online communities.

Safeguarding Platforms and Users with Imagga’s Content Moderation Solution

As harmful, misleading, or inappropriate videos continue to pose risks to user safety and platform integrity, companies need reliable solutions that can scale with demand while ensuring compliance and trust.

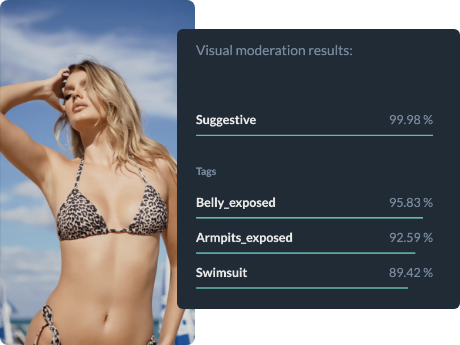

Imagga’s AI-powered content moderation solution offers an advanced, scalable approach to tackling the challenges of video moderation. By leveraging cutting-edge machine learning models, Imagga automates the detection of harmful content in video streams, ensuring a safer and more compliant platform.

If your platform relies on user-generated content, now is the time to strengthen your moderation strategy. Imagga’s intelligent moderation tools provide the precision, efficiency, and scalability needed to keep your platform safe and trusted in an evolving digital landscape.