Imagga's New Adult Content Detection Model: Setting a New Standard in Content Moderation

Many companies across various industries – including dating platforms, online marketplaces, advertising agencies, and content hosting services – have been using Imagga's Adult Content Detection Model to detect NSFW (Not Safe For Work) visuals, ensuring their platform remains secure and user-friendly.

We are excited to announce that we've significantly improved the model, enhancing its accuracy and ability to provide nuanced content classification. We’ve achieved 98% in recall for explicit content detection and 92.5% overall model accuracy, outperforming competitor models with up to 26%.

In this blog post, we'll explore the advancements of the Adult Content Detection Model, previously called NSFW model, demonstrate how it outperforms previous versions and competitor models, and discuss the tangible benefits it offers to businesses requiring effective and efficient content moderation solutions.

Key features of Imagga’s Adult Content Detection model:

- Exceptional model performance in classifying adult content

- Real-time processing of large volumes of images and short videos

- Integrates into existing systems with a few lines of code

Evolution of the Model

We expanded and diversified the training dataset for the new model significantly. The new model has been trained and tested on millions of images, achieving extensive coverage and diversity of adult content. In addition, a vast number of visually similar images belonging to different categories have been manually collected and annotated. This meticulous process contributes to the outstanding performance of our model in differentiating explicit, suggestive, and safe images, even in difficult cases.

The dataset has been refined by applying state-of-the-art techniques for data enrichment, cleaning, and selection. By enhancing the quality and diversity of our training data, we've greatly improved the model's ability to detect nuanced visuals. As a result, we have achieved much better results compared to the previous version of the model.

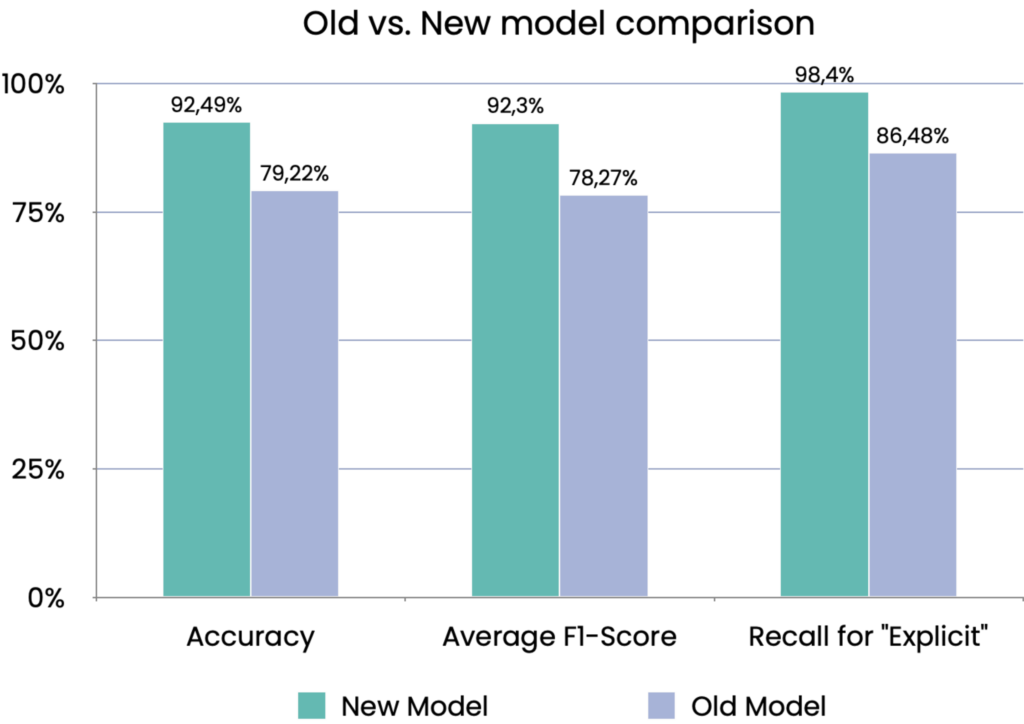

Here's a performance comparison chart showing the progress of Imagga's new Adult Content Detection Model compared to the previous version.

The chart highlights substantial improvements across key metrics.

The new version of our Adult Content Detection Model shows a substantial improvement in accuracy and overall performance, meaning it’s now even better at correctly identifying content in each category. One of the most important improvements is in its ability to detect "explicit" content, where we see a significant 12.72% increase in recall.

What does this mean? Recall is a measure of how effectively the model captures all actual instances of explicit content. By increasing recall, the model becomes more sensitive to potentially explicit content. This means it's more likely to catch borderline or uncertain cases, ensuring that even subtle or ambiguous explicit material gets flagged.

In practice, this makes the model much more effective at detecting a higher percentage of explicit content, reducing the risk of inappropriate material bypassing moderation. This improvement is a key step in helping platforms ensure a safer and more controlled environment for their users.

Benchmarking Against Competitor Models

Test Data and Methodology

To ensure a fair and unbiased comparison, we tested our model against leading competitors – Amazon Rekognition (AWS), Google Vision, and Clarifai, using a carefully curated benchmark dataset.

The images in the test dataset have been collected from a variety of sources. Special attention has been paid to avoid inclusion of samples from the same source as the model’s training data. This aims to ensure that results are not biased towards the training data. For the current release no cartoons or drawings have been included. The model is, however, well responsive to synthetic images with sufficient resemblance to real images.

The benchmark dataset includes a wide range of nuanced and complex scenarios, beyond straightforward cases. The vast majority of images require the model to understand subtle distinctions in context, such as close interactions between people or potentially ambiguous body language and actions. These nuanced situations often blur the boundaries between suggestive and explicit content or between suggestive and safe content, providing a challenging test for content moderation.

To evaluate the models’’ performance comprehensively, we tested how they perform on images taken from different perspectives, with varying quality, and within hard-to-detect cases. The test dataset also includes hard-to-recognize images, with a deliberate inclusion of people of different races, ages, and genders to minimize biases and ensure that the model performs accurately and fairly across diverse demographic representations.

The benchmark dataset is available upon request. To receive it, please contact us at sales [at] imagga [dot] [com]

Dataset Composition

The dataset contains 2904 images, from which:

- 1104 with explicit content

- 952 with suggestive content

- 765 with safe content

Category Definitions

- Explicit: Sexual activities, exposed genitalia, exposed female nipples, exposed buttocks or anus

- Suggestive: Exposed male nipples, partially exposed female breasts or buttocks, bare back, significant portions of legs exposed, swimwear, or underwear

- Safe: Content not falling into the above categories

Competitor Models Categorization

Google Vision

- Returns probability for the existence of explicit content in six likelihood categories ranging from "very unlikely" to "very likely"

- For this comparison, any image rated "possible" or higher was considered explicit

- Does not have a "suggestive" category

Amazon Rekognition (AWS)

- Returns detailed subcategories, which we aggregated to align with our definitions of "explicit" and "suggestive"

Clarifai

- Offers adult content detection classifying into the same three categories ‘safe’, ‘suggestive’, ‘explicit’ as Imagga’s API

Performance Comparison

Imagga's Model

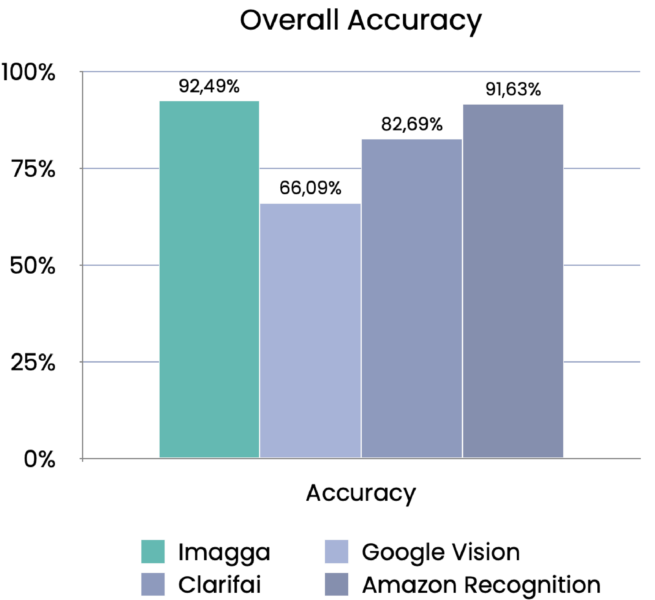

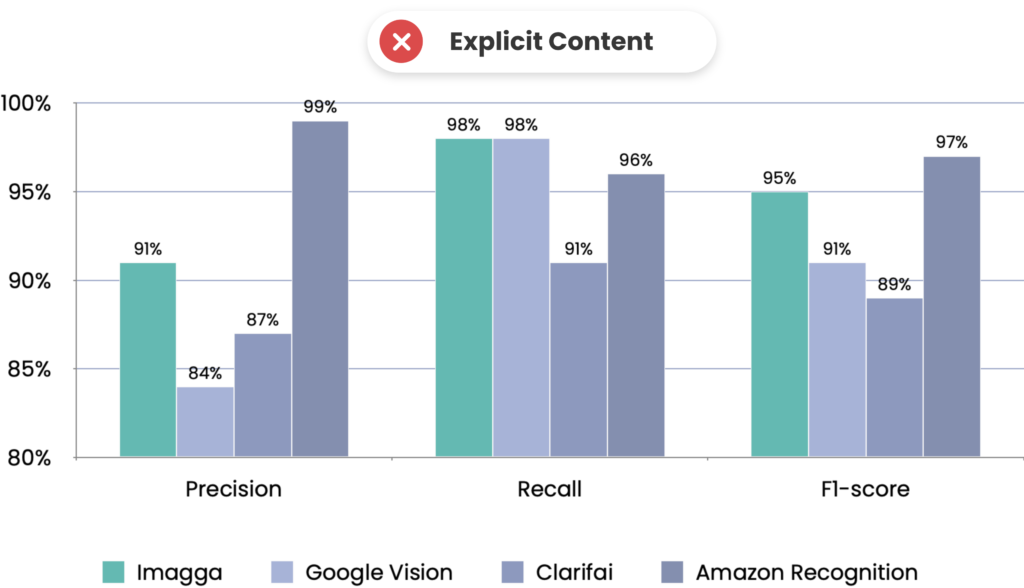

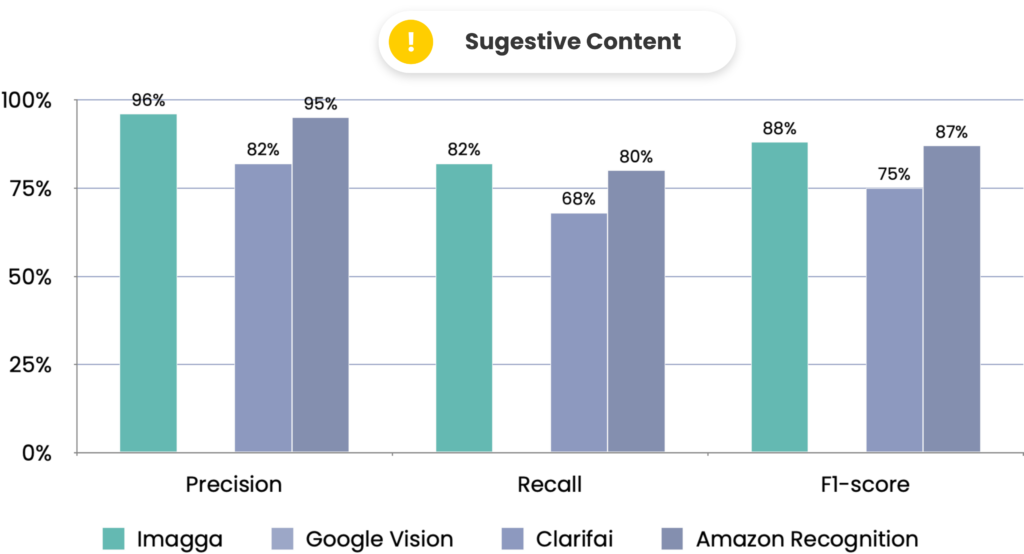

As seen from the figure on the right, Imagga’s latest adult detection model achieved the highest overall accuracy at 92.45%. Tied with Google Vision for the highest recall in the "explicit" category, ensuring most explicit content is correctly identified. It also exhibited the highest F1-scores across all categories, indicating a balanced performance between precision and recall. The model outperforms competitors in the "suggestive" and "safe" categories.

Amazon Rekognition

Slightly higher precision in the "explicit" category, meaning fewer false positives. Amazon’s model has lower recall compared to Imagga, potentially missing more explicit content. Less effective in "suggestive" and "safe" categories.

Clarifai

Moderate performance across categories but lags behind Imagga and AWS. Lower accuracy and F1-scores indicate room for improvement.

Google Vision

While Google Vision demonstrates high recall for "explicit" content, it does not offer a "suggestive" category aligned with our definitions. Consequently, it lacks the ability to distinguish between "explicit" and "suggestive" content. This limitation means that suggestive content is often misclassified as "safe," resulting in an inability to filter or tag such content appropriately.

How Reliable Adult Content Detection Impacts Online Platforms?

Robust and accurate adult content detection provides significant benefits to online platforms by ensuring a safer, more reliable user experience. By automatically identifying and filtering inappropriate images and videos, platforms can effectively protect users, especially minors, from harmful content. This not only helps safeguard the platform’s reputation but also fosters user trust and confidence in its commitment to safety. Additionally, with regulations around online content continuously evolving, having a detection system that meets compliance standards is critical. Relying on a provider with core expertise in this area further enhances reliability, allowing platforms to streamline moderation processes and focus on growth, knowing their content detection is both effective and expertly managed.

Upcoming Updates

- Providing a classification of the subcategories identifying the explicit action or subject

- Increasing the recall of the suggestive category for more accurate detection of gray-zone adult content

How to get started with the Adult Content Detection?

Learn how to get started with the model with just a few lines of code.

How to Upgrade from the NSFW Model?

Upgrading to the new model is straightforward and can be done in two simple steps:

- Update the categorizer ID in your API calls: Replace “nsfw_beta” with “adult_content”.

- Adjust label handling if applicable: To better reflect the data our model is trained on and to improve moderation outcomes, we've updated the classification categories from "nsfw", "underwear," and "safe" to "explicit", "suggestive", and "safe", respectively. This means that in case you are handling the labels in your code, you will need to change the label “nsfw” to “explicit” and “underwear” to “suggestive”, while the “safe” label remains unchanged.

Important: The NSFW Beta model will remain active in the API for the next three months before it is deprecated.

Ready for a Reliable Content Moderation Solution?

If you’re seeking a robust, advanced solution for content moderation, Imagga's enhanced Adult Content Detection Model is here to meet your needs. Our technology is designed with precision and adaptability in mind, and we’d love to learn more about your specific challenges.

Tell us which types of content are most difficult to manage or where you see existing detection models falling short.

Synthetic Data for Content Moderation – Solution or Threat?

In this blog post, we explore the benefits and risks associated with using synthetic data to develop Image Recognition models and share our approach to using Generative AI (GenAI) to augment and de-bias datasets.

In today's digital world, where harmful content can be created and shared quickly and widely, the need for effective content moderation is urgent and complex. However, there is a challenge– AI systems used for content moderation require large, diverse, and high-quality training datasets to work well. Yet, gathering such data is complicated by ethical, legal, and practical issues. Online content comes in many different styles, contexts, and cultural meanings, making it hard to capture every type of harmful or inappropriate content. At the same time, strict privacy laws and ethical guidelines limit how we can collect and use sensitive data, like images of violence or explicit material.

This creates a dilemma: for AI models to effectively moderate content, they need access to comprehensive datasets that cover the wide range of content found online. However, getting this data is nearly impossible without violating privacy, facing legal issues, or introducing bias. Because of this, the industry struggles to balance the need for comprehensive data with the responsibility to protect privacy and ensure fairness. This is where synthetic data comes into play, offering a promising but not entirely risk-free solution to these limitations.

What is good data?

Training AI models require data that meets several essential criteria to ensure effectiveness, reliability, and fairness. The data must be relevant to the specific task, high-quality, and representative of the scenarios the AI will encounter in real-world applications. For example, training an AI model to recognize objects in CCTV (“closed-circuit television,” commonly known as a video surveillance technology) footage requires datasets that mimic CCTV images rather than high-quality photos from professional cameras.

Additionally, good data must be diverse, covering a wide range of possible cases, including rare or unusual scenarios, to enable the AI to generalize well across different situations.

The data must be balanced and free from bias to avoid skewed outcomes, well-labeled for clarity, legally and ethically sourced, and kept up to date to reflect current trends and environments.

Meeting all these criteria is fundamental to developing AI models that are robust, fair, and effective.

The Paradox of Data Collection for Content Moderation AI

While it's clear that high-quality, diverse, and unbiased data is crucial for training effective AI models, achieving this standard presents a significant paradox, especially when it comes to training AI for content moderation. The challenge lies in the availability, complexity, and ethical dilemmas involved in gathering such comprehensive datasets.

Internet content is highly varied in style, context, and cultural significance, making it nearly impossible to capture every variation of what might be considered harmful or inappropriate. Furthermore, ethical and legal restrictions make it difficult to collect data involving sensitive or potentially harmful content, such as images of violence or explicit material. Even when data is available, privacy concerns and regulations like GDPR often limit its use.

Hence the paradox we mentioned: the very conditions needed to train a content moderation AI effectively are almost impossible to meet in practice. As a result, the industry must look for innovative approaches–synthetic data–to bridge the gap between what's needed and what's realistically achievable.

Synthetic Data to the Rescue

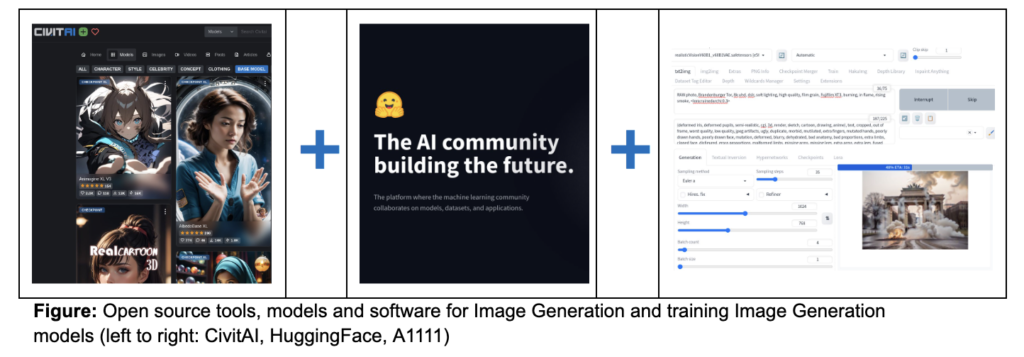

The rise of GenAI has revolutionized how synthetic images are created for training and evaluating AI models. With innovations like Stable Diffusion, it is now possible to generate highly realistic and diverse synthetic images that closely mimic real-world content. These technologies are continuously evolving, enhancing not just the realism of the images, such as Stable Difusion XL, but also improving the speed of image generation, such as SDXL Turbo. Other improvements, like LoRA, offer more flexible and efficient training options by reducing computational demands, making it easier to fine-tune models.

Additional tools have been developed to give users more control over the output of these models, such as ControlNet for guiding image composition and Inpainting for editing specific parts of an image. Meanwhile, software like A1111 has been developed for easier and more user-friendly applications of the technologies, comfyui for image generation, and kohya_ss for training.

A vibrant open-source community further accelerates the development and accessibility of these technologies. Developers frequently share their models on platforms like Hugging Face and Civit.ai, allowing others to leverage the latest breakthroughs in synthetic image generation.

This collaborative environment ensures that cutting-edge tools and techniques are available to a wide range of users, fostering rapid innovation and widespread adoption.

The Risks of Relying on Synthetic Data

While synthetic data presents an attractive solution, it is not without risks. One of the primary concerns is the potential for "model collapse," a phenomenon where AI models trained extensively on synthetic data begin to produce unreliable or nonsensical outputs due to the absence of real-world variability.

Synthetic data might not accurately capture subtle contextual elements, leading to poor performance in practical scenarios. There is also a risk of overfitting, where models become too specialized in recognizing synthetic patterns that do not generalize well to real-world situations.

The quality of synthetic data depends on the generative models used, which, if flawed, can introduce errors and inaccuracies.

One of the biggest challenges with visual synthetic data is ensuring that the generated images appear realistic and exhibit the same visual characteristics as real-world images. Often, humans can easily recognize that an image has been artificially generated because it differs in subtle but noticeable ways from real images.

This issue extends beyond just the accurate generation of objects and contextual elements—it includes finer details at the pixel level, colors, noise, and texture. Furthermore, certain scenarios are particularly challenging to replicate. For instance, images captured with a wide-angle lens have specific distortions, and those taken in low light conditions might show unique noise patterns or motion blur, all of which are difficult to accurately mimic in synthetic images.

Researchers must develop evaluation metrics to create synthetic data that accurately reflects the complex patterns and relationships found in real-world data.

The Case for Synthetic Data in Content Moderation: De-biasing and Data Augmentation

Our engineering team has been utilizing these technologies within our tagging system, adapting their use to achieve specific outcomes. In some cases, it is beneficial to allow more flexibility in the underlying models to produce less controlled images. For example, by using text-to-image techniques, we can create a more balanced Image Recognition model by generating a diverse set of synthetic images that represent people of various races.

Figure: Result of prompt RAW photo, medium close-up, portrait, solder, black afro-american, man, military helmet , 8k uhd, dslr, soft lighting, high quality, film grain altering words related to the race

Successfully generating synthetic data involves several steps, including extensive experimentation and hyperparameter tuning, beyond just the training and image creation stages.

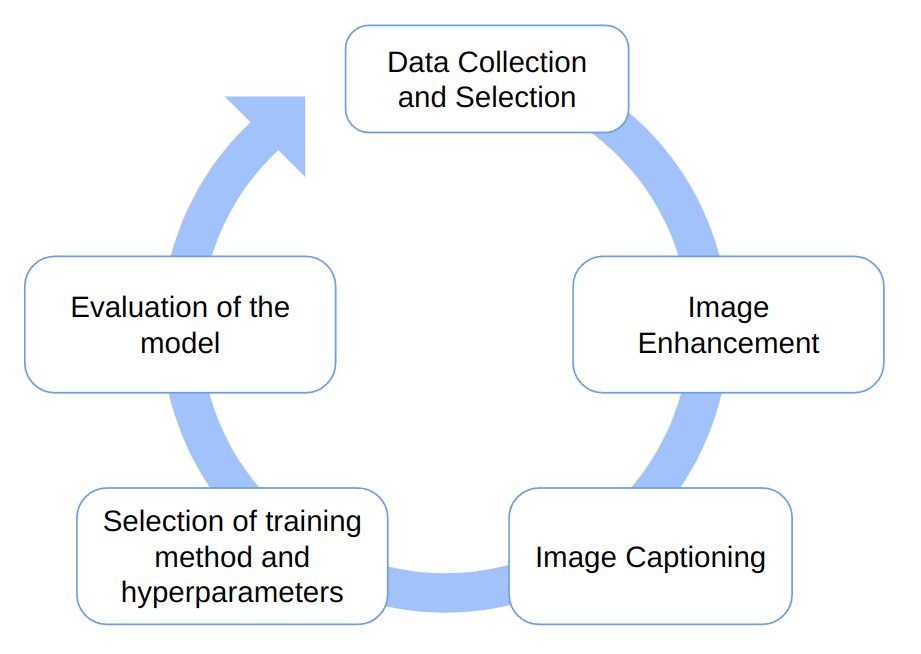

There are multiple methods for training a custom Generative AI model, each with unique strengths and weaknesses—such as DreamBooth, Textual Inversion, LoRa, and others. Following a well-defined process is essential to achieve optimal results.

This is the process our engineering team followed:

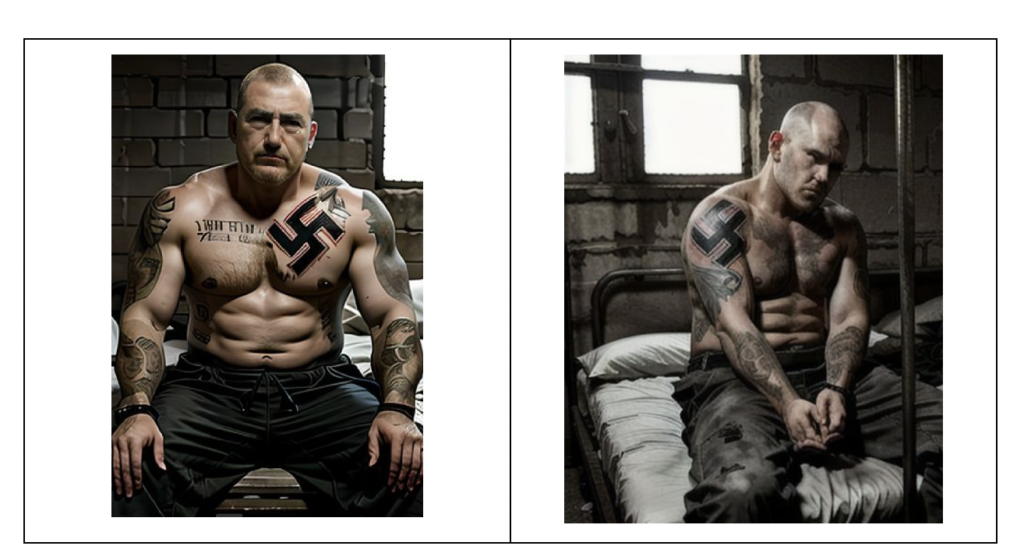

At Imagga, we leverage the latest GenAI technologies to expand our training datasets, enabling us to develop more effective and robust models for identifying radical content. This includes identifying notorious symbols like the Swastika, Totenkopf, SS Bolts, and flags or symbols associated with terrorist organizations such as ISIS, Hezbollah, and the Nordic Resistance Movement. Since this type of content cannot be generated using standard open-source models or services, custom training is required to build these models effectively.

To develop our Swastika Recognition model, we utilized the above-mentioned approach by starting with a set of around twenty images. Below is a subset of these images.

The resulting model was capable of generating images containing the Swastika in various appearances while preserving its distinct geometric characteristics.

The new model has been applied both independently and in combination with other models (e.g., generating scenes like prison cells). Furthermore, it has proven effective not only in text-to-image generation but also in image-to-image tasks using techniques like inpainting.

The dataset has been further enriched with synthetic images generated using techniques like Outpainting, with LinearArt serving as a controlling element.

While advancements in GenAI have enabled us to expand our training data and enhance the performance and generalization of our Image Recognition models, several challenges remain. One major is that creating effective GenAI models and developing a reliable pipeline for image generation requires extensive experimentation. This process is not only complex but also resource-intensive, requiring considerable human effort and computational resources.

Another critical challenge is ensuring that the generated images do not degrade the performance of the Image Recognition model when applied to real-world data. This requires carefully balancing AI-generated images with real-world images in the training dataset. Without this balance, the model risks overfitting to the specific features of AI-generated images, resulting in poor generalization to actual images. Additionally, AI-generated images or their components may need to undergo domain adaptation to make them resemble real-world images more closely. This process can be particularly challenging, as not all characteristics of an image are visible to the human eye and require more sophisticated adjustments.

Conclusion

The use of synthetic data in training AI models for content moderation offers a potential solution to the limitations of real-world data collection, enabling the creation of robust and versatile models. However, the integration of synthetic data is not without its risks and challenges, such as maintaining realism, preventing bias, and ensuring that models generalize well to actual scenarios. Achieving a balance between synthetic and real-world data, along with continuous monitoring and refinement, is essential for the effective use of AI in content moderation.