What is Content Moderation?

Content moderation is the process of screening and filtering any type of user-generated content (UGC) uploaded and shared online to determine whether it’s appropriate for publication based on predefined rules. The monitored content can be images, videos, audio, text, and livestream. While inappropriate content categories can vary from platform to platform based on the targeted users, there is content that is undoubtedly harmful and illegal and should be blocked no matter the website hosting them.

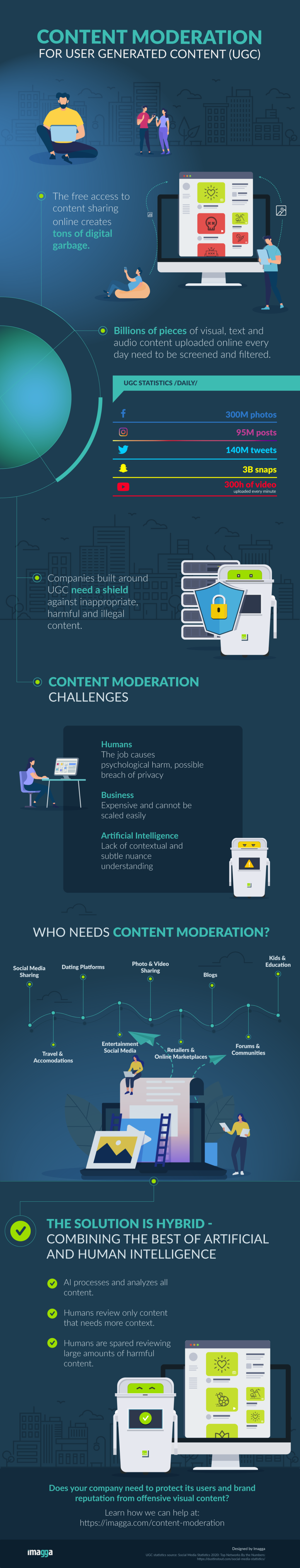

Billions of people have come online in the past decade, gaining unprecedented access to information. The ability to share their ideas and opinions with a wide audience has grown businesses, raised money for charity, and helped bring about political change. At the same time, the UGC economy has also made it easier to find and spread content that could contribute to harm, like terror propaganda, abuse, child nudity exposure, and others. The free access to content sharing online creates tons of “digital garbage”. Billions of users share text, image, video and audio content online on a daily basis. Some of it is inappropriate, insulting, obscene, or outright illegal. If left unsupervised, user-generated content may cause major harm to brands and vulnerable groups.

“The free access to content sharing online creates tons of digital garbage.”

Companies of various industries and sizes need content moderation as a shield against online bullies. Content Moderation can be performed by people, by AI-powered visual recognition algorithms or by hybrid moderation solutions combining humans and AI.

Why is Content Moderation So Hard?

The complexity of content moderation lies in the enormous volume of UGC and its exponential growth as existing platforms scale and new ones appear. Companies lack the processes and tools to handle the relentless pace at which content circulates on the web. Unlike the content volumes which grow exponentially, the content moderation teams do so at a very slow linear pace. Additionally, the content moderation job is excruciating and has detrimental emotional and mental effects on employees, causing people to quit and content moderation companies to close business.

The alternative solution, AI-powered visual recognition algorithms, while capable to process and filter enormous amounts of content with high precision, cannot deliver where cultural or other context is needed.

Additionally, the margin for error is intolerable. Even if a given platform does a great job filtering 99.99% of the inappropriate content and misses just 0.01%, the missed content (false negative) can do significant damage traumatizing the audience and hurting the company reputation; while the incorrectly blocked unrelated content (false positives) raises issues for censorship.

User Generated Content Statistics

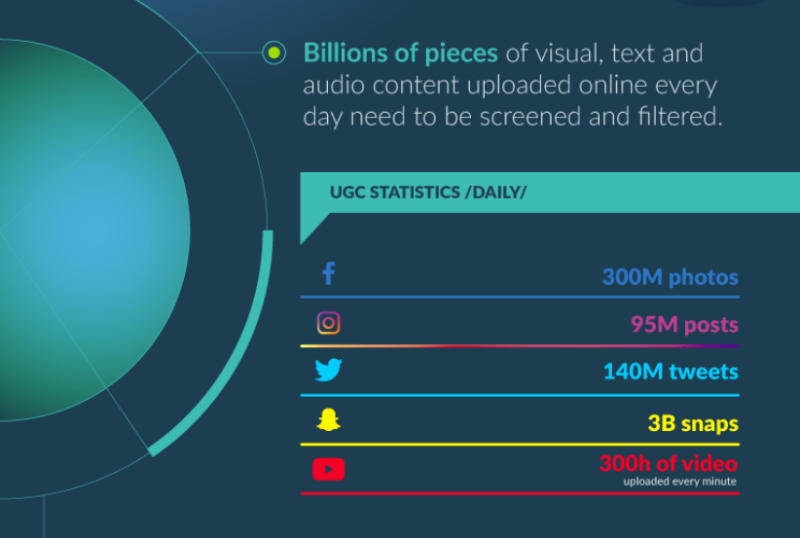

Billions of visual, text, and audio content pieces uploaded daily online need to be screened and filtered. Let’s take a look at the number of photos1, videos and tweets shared on some of the biggest social media platforms:

Facebook – 300 million photos uploaded daily

Instagram – 95 million posts daily

Twitter – 140 million tweets daily

Snapchat – 3 billion daily snaps

Youtube – 300 hours of video are uploaded every minute

Who Needs a Content Moderation Solution?

The topic has become a top priority for the largest social platforms amid scandals related to human content moderators’ harsh work reality, escalating content-related crises and increased public and political concerns2.

Тhe reality is that any online platform that operates with UGC is facing the same problem. There will be closer scrutiny on how companies are moderating content as regulators around the globe are paying closer attention to the subject.

Here’s a list of online platform types that need a solution:

- Social media giants like Facebook, YouTube, LinkedIn, Instagram, Pinterest, Snapchat, Twitter

- Travel and accommodation platforms such as Airbnb and TripAdvisor

- Dating platforms such as Tinder and Grindr

- Entertainment social media such as 9Gag and 4chan

- Artwork communities like DeviantArt and Behance

- Mobile photos and video sharing platforms such as Flickr and 500px

- Stock photography websites such as Unsplash and Pixabay

- Crowdsourcing platforms such as KickStarter and Indiegogo

- Retailers and online marketplaces such as Ebay and Etsy

- Information sharing such as Reddit and Upworthy

- Blog hosting platforms such as Medium and Tumblr

- All blogs and websites that allow user comments in any form, text including

- Audio social networks such as SoundCloud

- Customer review websites such as Yelp

- All marketing campaigns relying on user-generated content

- All online communities and forum websites

Human Content Moderation

Because of the rise of social media, content moderation has become one of the largest and most secretive operational functions in the industry. An army of moderators all over the world screens through the Internet for violence, pornography, hate speech, and piles of inappropriate or illegal content.

There are two major problems with moderation done by people.

The first one is ethical. Evidence keeps piling up, showing that people exposed daily to disturbing and hurtful content undergo serious psychological harm3. Burnout and desensitization are only on the surface, followed by PTSD, social isolation, and depression, according to Sarah T. Roberts, author of Behind the Screen book4. The Verge published an investigation5 into the working conditions at Cognizant in Phoenix, a top content moderation company that later in the same year left that business6. Employees have been diagnosed with post-traumatic stress syndrome.

The other problem is related to productivity and scale but should not and cannot be examined in isolation of the ethical aspect.

- Manual moderation is too expensive and too delayed in time to support near-real-time and live streaming moderation.

- Content volumes grow exponentially while moderation teams do so at a very slow linear pace.

- It’s hard to train huge masses of people and change requirements dynamically, which makes it impossible to change policies or add overnight a new type of content that needs to be recognized

- People overlook things easier than A.I. If a specific piece of content has been flagged as inappropriate, the machine would never mistake it for appropriate again (unless explicitly corrected). This gives the opportunity to stop the spreading of inappropriate content – something that is a major problem for manual moderation

- There is a potential privacy issue when real people examine the content of other real people

“Unlike the content volumes which grow exponentially, the content moderation teams do so at a very slow linear pace.”

AI Content Moderation

AI–powered visual recognition algorithms, or AI content moderation, hold the promise of addressing both the ethical and economic side of the problem. The machine algorithm can do the heavy lifting, processing and categorizing a gigantic amount of data in real-time. It can be trained to recognize visuals in any harmful content category such as pornography, weapons & torture instruments, brawls & mass fights, infamous & vulgar symbols, horror & monstrous images, and many more and achieve an extremely high precision rate.

AI-powered visual recognition algorithms are exceptionally strong at spotting weapons in images and videos, detect nudity or partial nudity, detect infamous people, landmarks, and symbols.

One area where AI models fall short is the contextual understanding of the content and its distribution channels. A video showing an armed conflict between military and civilians might be broadcasted by a TV channel as an important piece of news. But the same video can be viewed as harmful and removed when shared by a user and accompanied by commentary applauding the violence outside of the context in which the violence is being used3.

A Hybrid Solution: Combining the Best of Artificial and Human Intelligence

Combining human judgment with artificial intelligence holds enormous potential for handling the tons of violent, pornographic, exploitative, and illegal content generated online daily. The algorithms, processing the majority of the content, and sending just a small fraction of it to human moderators, can significantly reduce the workload for hundreds of thousands of psychologically harming content moderator positions. Furthermore, it is vastly more productive, easier to scale, and less expensive for companies when most of the data is processed by AI.

As one of the pioneers in the image recognition space, the Imagga data science and engineering teams have deep expertise in developing AI solutions for visual analysis. Learn how Imagga technology is leveraging the best of artificial and human intelligence to handle every aspect of the moderation needs of companies of any size and industry.

Content Moderation Infographic

…

Sources:

1. Social Media Statistics 2020: Top Networks By the Numbers: https://dustinstout.com/social-media-statistics/

2. THE CONTENT MODERATION REPORT: Social platforms are facing a massive content crisis — here’s why we think regulation is coming and what it will look like: https://www.businessinsider.com/content-moderation-report-2019-1

3. The Problem With AI-Powered Content Moderation Is Incentives Not Technology: https://www.forbes.com/sites/kalevleetaru/2019/03/19/the-problem-with-ai-powered-content-moderation-is-incentives-not-technology/#6caa805c55b7

4. Behind the Screens book: https://www.behindthescreen-book.com

5. The Trauma Floor – The Secret Lives of Facebook Moderators in America: https://www.theverge.com/2019/2/25/18229714/cognizant-facebook-content-moderator-interviews-trauma-working-conditions-arizona

6. Why a Top Content Moderation Company Quit the Business Instead of Fixing its Problems: https://www.theverge.com/interface/2019/11/1/20941952/cognizant-content-moderation-restructuring-facebook-twitter-google