The Image Recognition Market is Growing

Self-driving cars, face detection, social media content moderation — it’s already difficult to imagine our world without image recognition powered by Artificial Intelligence. Digital image processing has become an essential element of the operations and progress for companies across industries, locations, and sizes — and for each of us as individuals, too. The Image recognition market is rapidly growing!

In the last decade, the AI image recognition market, based on ever-evolving machine learning algorithms with growing accuracy, is making impressive steps. In 2023, the global market was evaluated at $53.29 billion. The projections are that it will reach 12.5% compound annual growth rate until 2030 — to hit the impressive $128.28 billion.

But what is driving this massive development?

It’s the perfect storm of diverse factors: major technological advancements, availability of funding, the ubiquity of mobile devices and cameras, and last but not least, shifts in consumer expectations about interactive visual user experience.

The result is that numerous big industries have already implemented image recognition in various contexts — from retail, ecommerce, media and entertainment to automobile, transportation, manufacturing and security. The prospects are that adoption of image recognition will continue on a massive scale across verticals.

In the sections below, we go over the basics about image recognition, the growth statistics and projections, and the industries driving the change, as well as Imagga’s pioneering role in the field.

The Basics About Image Recognition and Computer Vision

Before we delve into the numbers about the image recognition market growth, let’s get some definitions about the technology — and the nuances involved in image recognition and computer vision and their place within the broader fields of Artificial Intelligence and machine learning.

While the two terms are very close and sometimes even used interchangeably, there are some differences to note.

Image recognition entails the identification and categorization of visual content, including objects, text, people, actions, colors and other attributes within an image or photo. The typical actions that can be executed with the help of image recognition tools include image tagging, image classification, detection and localization, as well as feature identification and extraction. They’re often powered by technology called convolutional neural networks (CNNs).

Computer vision, on the other hand, is a broader term. It includes not only image recognition, but also the process of grasping context and gathering data from visuals. The main techniques in AI computer vision include object detection, image classification, and image segmentation, as well as progressive new ones like generation of visuals, restoration, and 3D vision.

The Numbers Behind the AI Image Recognition Market Growth

What stands behind the $53.29 billion global market value of image recognition? Let’s delve into the findings of Maximize Market Research’s AI Image Recognition Market- Forecast and Analysis (2023-2029) and of Grand View Research’s Image Recognition Market Size, Share & Trends Analysis Report covering the period 2017-2030.

Naturally, the biggest share is North America, which made up 34.8% of the market in 2022 because of cloud-based streaming services’ expansion in the U.S. It also has the highest growth momentum at the moment, compared to Europe, Asia Pacific, Latin America, and the Middle East and Africa.

The most quickly growing market is Asia Pacific, with a compound annual growth rate of 16.7% until 2030. The driving forces there include increased use of mobile devices and online streaming, as well as security cloud solutions and ecommerce. As for Europe, the projections until 2030 are promising major growth too, fueled by technological advancements in obstacle-detection for automobiles.

The regional market penetration is the highest in North America, followed by Europe and Asia Pacific.

In terms of field of application, the five main areas where image recognition has a major role include marketing and advertising (biggest share), followed by scanning and imaging, security, visual search, and augmented reality (with the smallest share).

As for segments, the facial recognition one was the most prominent in 2023, driven by the increased security needs in the banking, retail and government sectors. In the next few years, the sectors projected to be the main driving forces for the image recognition market growth are marketing and advertising, as well as the service segment. In marketing and advertising, the need for improved user experience and better ad placement are key. As for the service segment, customization of image recognition tools for specific business needs is central.

The main techniques in wide use include recognition of faces, objects, barcode and QR, patterns, and optical characters. In 2022, facial recognition accounted for 22.1% of the image recognition market, driven by the growing requirements for safety and security. Pattern recognition is expected to grow significantly, with its use in areas like detection of anomalies, recommendation systems, and more.

Industries and Verticals Driving the Expansion

The expansion of the image recognition market is driven by the technological adoption in a couple of key industries and verticals, as observed in the Image Recognition Market Size, Share & Trends Analysis Report by Grand View Research and the AI Image Recognition Market- Forecast and Analysis (2023-2029) by Maximize Market Research.

The major ones include media and entertainment, retail and ecommerce, banking, financial services and insurance (BFSI), automobile and transportation, telecom & IT, government, and healthcare, as well as education, gaming, and aerospace and security.

In the last few years, the retail and ecommerce segment had the biggest market share of more than 22%. This was largely due to the use of image recognition in online shopping, through which customers can search for items by making a photo of their desired object, shop the look, and similar functionalities. They have found wide adoption in fashion, furniture and other ecommerce sections.

The other major segments were media and entertainment, followed by banking, financial services and insurance where image recognition is widely used for ensuring security and identification, and automobile and transportation, driven by the use of image recognition in self-driving vehicles.

In 2023, the revenue share was the biggest in retail and ecommerce, while the lowest was in healthcare. As for the forecast for the compound annual growth rate until 2030, the banking, financial services and insurance segment has the lowest projection, while healthcare has the highest.

Imagga: A Pioneer and Major Player on the Image Recognition Arena

Imagga has been working on cutting edge AI technology in the field of image recognition for more than 14 years now. We’re proud to be one of the pioneers and to continue our inventive approach to automating visual tasks for businesses across industries. We’re also happy to be on the list of key players in the field in the AI Image Recognition Market- Forecast and Analysis report by Maximize Market Research, alongside IBM, Amazon, Google, Microsoft, and more.

Our product portfolio is constantly expanding and currently includes technology that powers up image and video understanding, content moderation, and pre-trained computer vision machine learning models.

Imagga’s image recognition technology is packaged in easy-to-integrate APIs. They can be easily rolled out in the cloud or on premise, depending on the customer’s needs. We also offer custom model training which is essential for building custom models tailored to the specificities of the client’s data.

Imagga’s mission is to provide companies with the most powerful tools to get the greatest value from their visual content. We help businesses improve their workflows, employ automation of visual tasks, and gain deeper insights into their visual data.

The Exponential Development of Content Moderation

Image recognition is at the core of AI content moderation — a particular use of visual processing and identification that has gained significant traction in recent years.

With the unseen growth of social platforms and websites, as well as ecommerce and retail digital channels, the amount of user generated content posted online every second is simply unimaginable. But not all content is appropriate, legal, or safe from harm. That’s why many different types of online businesses have turned to content moderation — so they can provide user-friendly and safe platforms for shopping and socializing to their customers.

Automated content moderation has brought a major change in the field. It has a high rate of efficiency and protects human content moderators from being exposed to horrific visual content on a daily basis. In a nutshell, AI content moderation offers visual content screening and filtering at scale, full legal compliance, protection of content moderators, multilingual moderation, and an unseen level of productivity.

While the most known field where content moderation is king is social media, its uses are numerous. Dating platforms, travel booking websites, gaming platforms, all types of online marketplaces and ecommerce, fashion apps and websites, video platforms, forums and communities, educational platforms, networking websites, and messaging apps — this is just a short list of the applications that content moderation has today.

Unleash the Power of Image Recognition for Your Business

With its solid pioneering role in image recognition, Imagga has been the trusted partner of all types of companies in integrating the capabilities of machine learning algorithms in their workflows.

Want to learn how you can boost your business performance and tools with image recognition? Get in touch with us to explore our ready and tailor-made solutions for image tagging, categorization, visual search, face recognition, and more.

8 Prominent Use Cases of Visual Content Moderation Today

Visual content moderation is everywhere in our online experiences today, whether we realize it or not. From social media and online gaming to media outlets and education platforms, the need to make digital environments safe for everyone has brought about the necessity for filtering user-generated content—especially the abundant images, videos, and live streamings.

Content moderation has changed dramatically over the last decade thanks to machine learning algorithms. A while ago, most content had to be reviewed by human moderators, which was a slow and potentially harmful process for the people involved. With the rapid growth of online content, it became apparent that content moderation needs a technical boost.

Artificial intelligence has contributed to a massive upgrade in the moderation process. It has brought scalability that can match the sheer amount of content constantly uploaded online. Moderation platforms powered by the evolution of image recognition have additionally revolutionized the filtering process for visual content, as they can automatically remove the most harmful, inappropriate, and illegal items.

To get an idea of visual content moderation's current applications, let’s review eight of its most prominent use cases and how it’s improving users' online environments.

1. Social Media Platforms

Visual content moderation is of utmost importance for social media platforms. They are often under scrutiny because they handle inappropriate content, such as violence, extremism, hate speech, explicit content, and more.

Moderation for social media platforms is especially challenging due to a few key factors — the sheer amount of user-generated content, real-time publishing and circulation, and nuances in context. It takes time and adjustments for algorithms to attune to spotting cultural differences in content. The same goes for finding the right approach and applying different tolerance levels towards sensitive content depending on the cultural background.

Striking the balance between being lenient with harmful content and unnecessary censoring is a daunting task. The scalability and growing accuracy of computer vision is thus of immense help in content moderation for images, video, and live streaming.

2. E-commerce Platforms and Classified Ads

The application of visual content moderation in e-commerce and classified ads is wide today. These platforms collect a vast amount of user data—not only through user-generated content. They also gather information through cookies, user profiling, and preference tracking, which feeds into the analysis of their user base and respective strategies.

Some of the biggest issues that such platforms face include controlling and removing inappropriate and counterfeit product listings, as well as scams and fraud by bad actors.

AI-powered image content moderation provides a way to handle the diversity and volume of product listings and to ensure the policy compliance of all posts and user accounts.

3. Online Gaming and Virtual Worlds

Just like social media and e-commerce, online gaming, and virtual world platforms deal with abundant amounts of user-generated content.

All the different elements in the virtual environment, such as user avatars, gaming assets, and exchanges between users, require moderation to prevent exposure to harmful and inappropriate content.

Live streams are a particularly challenging aspect of visual content moderation for online gaming and virtual worlds. Real-time moderation is specific and requires a robust and well-planned moderation approach that AI can offer.

4. Online Forums and Community Platforms

Online forums and community platforms are other types of platforms that rely heavily on user-generated content, which automatically means extensive content moderation.

Online forums are often thematic, while community platforms can be attached to a certain brand, game, or product.

In both cases, users contribute text and visual content in their exchanges with other community members. Content moderation thus aims to make the online environment safe while also providing users with the freedom to express themselves and communicate.

5. Dating Platforms

Dating websites and apps need to be particularly careful in their functioning because users expect a truly safe environment for their personal exchanges.

As with other online platforms, user-generated content must be screened to protect the community and allow free and safe communication.

Visual AI is of immense help in moderating the visual content shared among dating platform users.

6. Education and Training Platforms

Upholding quality standards is key for the success of education platforms, and like all other online platforms, they are susceptible to a number of content risks.

Stopping plagiarism and copyright infringement, as well as monitoring the quality and compliance of educational content are thus of utmost importance for educational platforms.

This requires robust visual content moderation, having in mind that a large part of educational materials today are in the form of videos — and AI-powered content moderation is the logical answer.

7. News and Media Outlets

News and media outlets are facing unprecedented challenges in the digital age. Fake news, doctored content, and misinformation are abundant, creating a constant sense of uncertainty about what we can accept as true.

To protect the truth in these interesting times, news channels and media platforms have to rely also on content moderation for their digital outlets — both for their own content and for user-generated content.

Platforms that allow user comments on news content have to moderate large amounts of data to filter out misinformation, hate speech, and spam — and computer vision is a trusted tool in this process.

8. Corporate Compliance and Governance

Content moderation is not applied only in user online platforms but has its place in corporate management, too.

Data protection is of utmost importance for large companies that need to handle large amounts of data and have big teams.

Visual content moderation based on AI comes in especially handy in spotting sensitive data being shared or distributed in breach of privacy policies.

Learn How Visual Content Moderation Can Help Your Business

Visual content moderation is a must-have tool for ensuring user safety across various types of industries today.

Powered by artificial intelligence and machine learning algorithms, Imagga’s computer vision platform is a trusted partner in optimizing visual content moderation.

To learn how you can embed it in your business and get started, just contact us.

5 Innovative Uses of Image Recognition in Healthcare

Image recognition in Healthcare powered by Artificial Intelligence holds immense promise for revolutionizing the healthcare field—and it is already delivering on this promise. Through accurate object detection and ever-improving image classification and segmentation, AI-powered image recognition is the leading source of innovation in medical services.

The most groundbreaking uses of computer vision today are in medical diagnosis. With the precision of image recognition in processing medical imagery and identifying various conditions, the overall accuracy of diagnostics and early detection can rise exponentially. The same goes specifically for cancer and tumor detection, whose early discovery is of the utmost importance for saving lives and improving patients' conditions.

Yet another revolutionary use of image recognition-powered tools is in surgical assistance. Robotic guidance and analysis based on AI allows for unseen levels of precision and speed in performing operations, all the while reducing hospital stay and time needed for patient recovery.

Image recognition also offers great advancements in inpatient treatment and rehabilitation. It allows flexible and tailor-made solutions for each individual and saves time and resources for medical institutions and practitioners. Computer vision capabilities also bring a powerful boost to human error prevention in medical diagnostics and treatment, along with improvements in specialist training and excellence.

While privacy considerations around the use of AI in medicine are important and should always be addressed and resolved, the possibilities that image recognition offers in healthcare are massive. In fact, making the best use of the life-saving power that AI-based image recognition tools offer can be an important milestone for humanity.

Let’s go through five of the most promising and innovative uses of image recognition in healthcare that are not science fiction — but are already in use in various places across the globe.

#1. Powerful Medical Diagnostics Based on AI

Speed and precision are crucial when it comes to saving people’s lives. Diagnostics is the first step to preventing detrimental conditions, prolonging life, and improving health. With the help of image recognition, medical specialists today are able to dramatically improve the accuracy of their diagnostics and the time needed for the process.

Medical imaging based on AI, also known as medical image analysis, is especially helpful in the diagnostics process. It is being used in magnetic resonance imaging (MRI), X-ray, ultrasound, and other methods. Medical image analysis renders a visual model of internal tissues and organs, making detecting abnormalities easier and faster. This allows medical specialists to get better visual data and thus make informed diagnosing decisions.

Besides the actual image recognition capabilities, AI-powered medical platforms can process large amounts of data in minimal time and use deep learning to acquire new knowledge — which complements the well-informed human judgment necessary in diagnostics. In addition, image recognition tools access and create large databases of medical cases that are irreplaceable in the process of comparing images and medical conditions in order to help diagnostics. Thus, AI-powered algorithms are better equipped to spot patterns and detect even minor condition changes — which in turn helps medical practitioners in their clinical decision-making and consecutive patient treatment.

Some of the common uses of computer vision tools in medical diagnostics include detecting cardiovascular diseases, musculoskeletal injuries, and neurological diseases, among others.

#2. Early Cancer and Tumor Detection Using Image Recognition

Medical tools based on image recognition can detect and analyze anomalies in computerized tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET) scans. Machine learning algorithms trained with enormous amounts of images can identify, compare, and process large data chunks to provide quick and precise detection of malignant formations.

Computer vision can help medical specialists, especially radiologists and pathologists, improve the early and accurate detection of different types of cancer and tumors. Platforms powered by image recognition can spot anomalies faster and more efficiently, thus helping medical practitioners in their assessment and diagnosis.

Image recognition tools based on AI are already being used to detect and diagnose skin and breast cancer. They are particularly good at differentiating between cancerous skin lesions and other skin problems that are not life-threatening. Computer vision has also brought innovation and precision in breast cancer diagnosis, as it can identify cancerous areas faster than the rest of the tissue.

#3. Precise Surgical Guidance Assisted by Image Recognition

Robot surgical guidance is another highly promising use of image recognition in healthcare. Based on the patient's medical records, robots process and analyze data so that they can assist surgeons on the operating table. Using AI, robots draw information from previous surgeries to provide surgeons with guidance and the best applicable techniques.

The benefits of using robotic guidance are already clear. They include precision in incisions and faster hospital rehabilitation for patients. Robot surgical guidance has also been shown to reduce medical complications and the invasiveness of surgery procedures. Overall, robotic surgery assistance is boosting the safety and accuracy of surgeries in which it has been applied because it provides surgeons with a much higher level of control over the process.

Robot-guided surgeries have already been practiced in a number of medical fields and in very complicated cases, including eye surgeries, heart interventions, and orthopedics, among many others. One of the biggest benefits that they bring to surgeons is the precise localization of the surgical area based on image analysis of CT, MRI, ultrasound, PET, and other techniques. This allows for better preoperative planning based on the patient’s exact 3D modeling and further feeds into improved guidance during the actual performing of incisions.

#4. Better Patient Personalized Treatment and Rehabilitation

Image recognition provides innumerable benefits in providing personalized treatment for patients, as well as effective rehabilitation and chronic illness care.

An individual approach to diagnostics and personalized treatment is difficult to achieve without the help of technology because they are too time-consuming. Creating treatment plans that are specifically prepared to cater to the needs of the patient can be much more effective and can be realized with the help of AI-powered tools.

In the field of rehabilitation, computer vision tools allow medical practitioners to provide care and advice virtually so that patients can recover at ease in their homes. Specialists can provide the needed physical therapy consultations, while also tracking patients’ progress. In addition, image recognition technologies are also being used in fall prevention systems for elderly and injured patients.

Virtual nursing assistants are another innovation that can further improve personalized treatment and rehabilitation. Virtual assistants can communicate with patients to help them with their medication, as well as to direct them to the most appropriate medical service providers. This extra care, available around the clock, can reduce hospital visits and provide better rehabilitation support to patients.

AI-based image recognition applications are also very helpful in monitoring chronic diseases by tracking health metrics like vital signs, levels of activity, nutrition, and many other factors. Patients can thus stay on top of the data and take the necessary steps to alleviate symptoms. The data can then be used by medical practitioners to spot patterns and adjust treatment based on them.

#5. Improved Error Prevention and Medical Excellence with AI

Decreasing the level of human error in medical services is a major area in which image recognition can provide significant innovation and improvement. For example, using convolutional neural networks in brain tumor detection has resulted in a reduction in human error and a boost in early identification and treatment.

Besides reducing misdiagnosing and various other errors that lead to worsened health or even death of patients, AI-powered systems can also be useful from an administrative point of view. They can provide a repository for the diagnoses and processes for each patient, thus allowing for a higher degree of accountability and transparency in decisions and treatment.

Image recognition has also found an important place in specialist training. One such area is surgeon training. Surgical platforms based on simulation offer a practical and effective way for novel surgeons to train their skills, gain confidence, and get feedback on their performance.

In addition, image recognition models are used to help junior medical staff in their training and in actual diagnosing. Computer vision can assist them in deep analysis and accurate interpretation of patients’ scans so that they are sure they are not missing important details. Senior doctors can also use such capabilities to monitor and guide the work of medical staff in training. This can lead to a reduction of stress and improved confidence for junior doctors and the overall improvement of diagnostics.

Image recognition allows for great improvements in various other areas to boost medical excellence. Some examples include tracking hospital hygiene and upholding high standards, preventing diseases and infections in hospitals, applying lean management techniques in healthcare, and many more.

Imagga: Exploring The Power of Image Recognition in Healthcare

Imagga offers all-in-one image recognition solutions that find powerful applications in a wide variety of fields, including healthcare. Our tools are based on the robust object and shape recognition and image classification, as well as power-up diagnostics, scanning, prevention, and more.

Get in touch to learn more about how our machine learning-powered image recognition can boost your healthcare services.

Check out Kelvin Health, our medical spin-off, to explore the capabilities of Thermography AI for easy diagnostics in a variety of medical contexts.

Beyond Human Vision: The Evolution of Image Recognition Accuracy

Technologies based on Artificial Intelligence are all the rage these days — both because of their stunning capabilities and the numerous ways in which they make our lives easier and because of the unknown future that we project they may bring.

One particular stream of development in the field of AI is image recognition based on machine learning algorithms. It’s being used in so many fields today that it’s challenging to start counting them.

In the fast-paced digital world, image recognition powers up crucial activities like content moderation on a large scale, as required by the exponentially growing volume of user-generated content on social platforms.

It’s not only that, though: image recognition finds great uses in construction, security, healthcare, e-commerce, entertainment, and numerous other fields where it brings unseen benefits in terms of productivity and precision. Think also about innovations like self-driving cars, robots, and many more — all made possible by computer vision.

But how did image recognition start, and how did it evolve over the decades to reach the current levels of broad use and accuracy that sometimes even surpass the capabilities of human vision?

It all started with a scientific paper by two neurophysiologists in the distant 1959, which was dealing with research on cat’s neurons…

Let’s dive into the history of this field of AI-powered technology in the sections below.

1950s: The First Seeds of the Image Recognition Scientific Discipline

As with many other human discoveries, image recognition started out as a research interest in different other fields.

In the last years of the 1950s, two important events occurred that were far away from the creation and use of computer systems but were central to developing the concept of image recognition.

In 1959, the neurophysiologists David Hubel and Torsten Wiesel published their research on the Receptive Fields of Single Neurons in the Cat's Striate Cortex. The paper became popular and widely recognized, as the two scientists made an important discovery while studying the responses of visual neurons in cats and in particular, how their cortical architecture is shaped.

Hubel and Wiesel found that the primary visual cortex has simple and complex neurons. They also discovered that the process of recognizing an image begins with the identification of simple structures, such as the edges of the items being seen. Afterward, the details are added, and the whole complex image is understood by the brain. Their research on cats thus, by chance, became a founding base for image recognition based on computer technologies.

The second important event from the same year was the development of the first technology for the digital scanning of images. Russel Kirsch and a group of researchers led by him invented a scanner that could transform images into numbers so that computers can process them. This historical moment led to our current ability to handle digital images for so many different uses.

1960s and 1970s: Image Recognition Becomes an Official Academic Discipline

The 1960s were the time when image recognition was officially founded. Artificial intelligence, and hence image recognition as a significant part of it, was recognized as an academic discipline with growing interest from the scientific community. Scientists started working on the seemingly wild idea of making computers identify and process visual data. These were the years of dreams about what AI could do — and the projections for revolutionary advancements were highly positive.

The name of the scientist Lawrence Roberts is linked to the creation of the first image recognition or computer vision applications. He put the start of it all by publishing his doctoral thesis on Machine Perception of Three-Dimensional Solids. In it, he details how 3D data about objects can be obtained from standard photos. Roberts’ first goal was to convert photos into line sketches that could then become the basis for 3D versions. His thesis presented the process of turning 2D into 3D representations and vice versa.

Roberts’ work became the ground for further research and innovations in 3D creation and image recognition. They were based on the processes of identifying edges, noting lines, construing objects as consisting of smaller structures, and the like, and later on included contour models, scale-space, and shape identification that accounts for shading, texture, and more.

Another important name was that of Seymour Papert, who worked at the AI lab at MIT. In 1966, he created and ran an image recognition project called “Summer Vision Project.” Papert worked with MIT students to create a platform that had to extract the background and front parts of images, as well as to detect objects that were not overlapping with others. They connected a camera to a computer to mimic how our brains and eyes work together to see and process visual information. The computer had to imitate this process of seeing and noting the recognized objects — thus, computer vision came to the front. Regretfully, the project wasn’t deemed successful, but it is still recognized as the first attempt at computer-based vision within the scientific realm.

1980s and 1990s: The Moves to Hierarchical Perception and Neural Networks

The next big moment in the evolution of image recognition came in the 1980s. In the following two decades, the significant milestones included the idea of hierarchical processing of visual data, as well as the founding blocks of what later came to be known as neural networks.

The British neuroscientist David Marr presented his research "Vision: A computational investigation into the human representation and processing of visual information" in 1982. It was founded on the idea that image recognition’s starting point is not holistic objects. Instead, he focused on corners, edges, curves, and other basic details as the starting points for deeper visual processing.

According to Marr, the image processing had to function in a hierarchical manner. His approach stated that simple conical forms can be employed to put together other complex objects.

The evolution of the Hough Transform, a method for recognizing complex patterns, was another important event around this period. The algorithm was foundational for creating advanced image recognition methods like edge identification and feature extraction.

At the beginning of the 1980s, another significant step forward in the image recognition field was made by the Japanese scientist Kunihiko Fukushima. He invented the Neocognitron, seen as the first neural network categorized as ‘deep’. It is believed to be the predecessor of the present-day convolutional networks used in machine learning-based image recognition.

The Neocognitron artificial network consisted of simple and complex cells that identified patterns irrespective of position shifts. It was made up of a number of convolutional layers, each triggering actions that served as input for the next layers.

In the 1990s, there was a clear shift away from David Marr’s ideas about 3D objects. AI scientists focused on the area of recognizing features of objects. David Lowe published the paper Object Recognition from Local Scale-Invariant Features in 1999, which detailed an image recognition system that employs features that are not subject to changes from location, light, and rotation. Lowe saw a resemblance between neurons in the inferior temporal cortex and these features of the system.

Gradually, the idea of neural networks came to the front. It was based on the structure and function of the human brain — with the idea of teaching computers to learn and spot patterns. This is how the first convolutional neural networks (CNNs) came about, equipped to gather complex features and patterns for more complicated image recognition tasks.

Again, in the 1990s, the interplay between computer graphics and computer vision pushed the field forward. Innovations like image-based rendering, morphing, and panorama stitching brought about new ways to think about where image recognition could go.

2000s and 2010s: The Stage of Maturing and Mass Use

In the first years of the 21st century, the field of image recognition reshifted towards object recognition as a primary goal. The first two decades were a time of steady growth and breakthroughs that eventually led to the mass adoption of image recognition in different types of systems.

In 2006, Princeton Alumni Fei-Fei Lin, who later became a Professor of Computer Science at Stanford, was conducting machine learning research and was facing the challenges of overfitting and underfitting. To address them, in 2007, she founded Imagenet, an ameliorated dataset that could power machines to make more accurate judgments. In 2010, the dataset consisted of three million visual items, tagged and categorized in over 5,000 sections. Imagenet served as a major milestone for object recognition as a whole.

In 2010, the first Imagenet Large Scale Visual Recognition Challenge (ILSVRC) brought about the massive evaluation of object identification and classification algorithms.

It led to another significant step in 2012 — Alexnet. The scientist Alex Krizhevsky was behind this project, which employed architecture based on convolutional neural networks. Alexnet was recognized as the first use of deep learning. This brought about a significant reduction in error rates and boosted the whole field of image recognition.

All in all, the progress with Imagenet and its subsequent initiatives was revolutionary, and the neural networks set up back then are still being used in various applications, such as the popular photo tagging on social networks.

2020s: The Power of Image Recognition Today

Our current decade is a witness to a powerful move in image recognition to maximize the potential of neural networks and deep learning algorithms. With their help, deep learning algorithms are constantly evolving and gaining higher levels of accuracy, as well as pushing further the advancement of the whole field with a focus on classification, segmentation, and optical flow, among others.

The industries and applications in which image recognition is being used today are innumerable. Just a few of them include content moderation on digital platforms, quality inspection and control in manufacturing, project and asset management in construction, diagnostics and other technological advancements in healthcare, automation in areas like security and administration, and many more.

Learn How Image Recognition Can Boost Your Business

At Imagga, we are committed to the most forward-looking methods in developing image recognition technologies — and especially tailor-made solutions such as custom categorization — for businesses in a wide array of fields.

Do you need image tagging, facial recognition, or a custom-trained model for image classification? Get in touch to see how our solutions can power up your business.

Trust and Safety Basics for Content Moderation

The rise of digital communities and online marketplaces has brought immense changes in the ways we interact with each other, purchase goods, and seek various professional services.

On one hand, all the different social platforms with user-generated content allow us to communicate with peers and larger social circles and share our experiences through text, audio and visuals. This has expanded and moulded our social lives dramatically.

At the same time, the digital world has taken over the ways in which we look for and buy products and services. Many of us have embraced online shopping, as well as the sharing economy — from cab rides to apartments.

While many of these advancements are undoubtedly making our lives easier and often more enjoyable, the shift to digital brings about some challenges too. Among the most significant ones is ensuring the safety of online users. Protecting people from fraud, scam and misuse, inappropriate and harmful content, and other types of digital risks has thus become essential for digital platforms of different kinds.

The mass adoption and success of a social community or marketplace today is directly linked to the level of trust that people have in it. As users get more and more tech-savvy and gain experience online, they need to feel that their privacy and security are in good hands.

This is where Content Moderation Trust and Safety programs become essential, and solid content moderation is a key element in them. In the following sections, you can find an overview of Trust and Safety principles for today’s dynamic digital landscape — and the role of moderation in ensuring their efficacy.

What Is a Trust and Safety Program?

Trust and Safety programs are not simply tools to meet legal standards and regulatory requirements. They are company plans which aim at positioning a digital platform as a trustworthy place which can offer a high level of protection to its users.

In essence, a Trust and Safety program consists of precise guidelines on how to bring down the risks from using a platform to a minimum. The major threats include exposure to disturbing, inappropriate or offensive content, scams, fraud, bullying, harassment, insults, and similar.

1. The Importance of Trust and Safety Programs

Putting in practice an effective Trust and Safety program is essential for the reputation and positioning of digital platforms today — from social media and online marketplaces to dating platforms and booking websites. People are increasingly more aware of the risks they can face online and prefer to opt in for websites and apps that have a solid track record.

For digital platforms, complying with solid Trust and Safety requirements is the key to increasing user base, minimizing churn, and boosting the loyalty of current users. In business terms, Trust and Safety practices ultimately have a strong impact on the bottomline. Online businesses based on social communities and user-generated content rely heavily on the level of trust for the growth of their revenue, scaling, and global expansion.

Protecting users on digital platforms is not only a smart business decision, though. It’s also a question of moral obligations towards vulnerable groups and the community as a whole. In more and more places around the world, safety and privacy are legal requirements that online businesses have to observe rigorously.

2. Essentials for Trust and Safety Programs

When it comes to crafting working Trust and Safety programs that truly deliver on their promises, there are a number of considerations to keep in mind.

First and foremost, a functional program should be able to address the wide variety and the growing amount of potential violations. They’re not only abundant, but keep changing, as violators seek innovative ways to fulfill their goals and go around protection mechanisms. It’s also important to note that risks vary depending on the communication channels — which means different strategies may be necessary to address the growingly diverse safety and privacy threats.

Additional considerations include the variety of languages that your digital platform boasts. With multilingual support, the challenges grow. In addition, even in a common language like English, inappropriate behavior and content can take many different shapes and forms. There are also cultural differences that can affect how trust and safety should be upheld.

Content Moderation in the Context of Trust and Safety

One of the most powerful tools that Trust and Safety managers of digital platforms have in their hands is content moderation.

The process of moderation entails the monitoring of content — text, images, video, and audio — with the aim of removing illegal and harmful items that pose risks to different groups and jeopardize the reputation of an online brand. Through these functions, content review is essential to ensuring the trust of users in and the required level of safety for guaranteeing protection from illegal and harmful items and actions.

With effective content moderation, digital platforms can protect their users from:

- The sale of unlawful and dangerous goods

- Dissemination of hateful and discriminative ideas

- Radical and criminal behavior of other users

- Exposure to gruesome visuals

How to Boost Your Content Moderation Efforts

With the rapid growth of digital platforms and the immense amounts of content that need to be reviewed to ensure Trust and Safety, relying on manual content moderation can be daunting. In fact, it may prove practically impossible to ensure on-the-go moderation when the volume of content that goes online grows exponentially.

Automatic content moderation powered by Artificial Intelligence is proving as the most appropriate and effective solution to this conundrum. It doesn’t mean fully replacing moderation teams with machines — but involves the use of powerful platforms to minimize and simplify the work for human moderators.

The automatic algorithms can remove items that are in direct contradiction with the rules and standards of a digital platform. This saves a ton of time and effort that otherwise a moderation team has to invest in sifting through the piles of content. When there are items that are contentious and the thresholds for removal are not reached, the moderation platform directs them for manual review.

In addition to dramatically increasing the productivity and speed of the moderation process, automatic content moderation spares content moderators from having to look at the most disturbing and gruesome content. This contributes positively to the parameters of the job which is known for being high-risk and traumatizing for many.

Imagga Helps You Deliver on Trust and Safety

Crafting and enacting a Trust and Safety program for your digital platform gets easier with effective content moderation. Imagga’s CM solution provides you with the right tools to protect your users from harmful and illegal content.

With Imagga, you can handle all types of content, including text, images, video and even live streaming. The platform will monitor all posted content and will automatically remove items that are not compatible with your community guidelines. You can set thresholds for content flagging for items that need to be processed by human moderators.

Our content moderation solution allows you to set content retention policies that further assist you in meeting Trust and Safety requirements, as well as official regulations.

Want to get started? You can refer to our in-depth how-to guide or get in touch straight away to check out how Imagga can boost your Trust and Safety efforts.

How Platforms Can Keep Their Online Communities Safe? Our 4 Best Practices

The digital world has steadily become an indispensable part of our real lives — and with this, the numerous risks posed by online interactions have grown exponentially.

Today online platforms where people can communicate or share user-generated content need to step up their protective measures. That’s necessary for ethical, reputational, and legal reasons.

For one, digital platforms have a responsibility to safeguard the interests of their users — and especially of vulnerable groups among them. Protecting online communities and providing an inclusive environment is imperative from the point of view of branding too. There’s also a growing body of national and international legislation requiring concrete safety measures from online platforms.

Whether you’re just launching an online platform, or are looking for ways to boost the safety measures of an existing one, there are tried-and-tested practices you can implement. At their core, they’re targeted at preventing intentional and unintentional harm to your users and at protecting their privacy, safety, and dignity.

How To Keep Your Online Communities Safe

We’ve compiled a list of proven tips for setting up a protection plan for your online community. They can help you get ahead of the threats — and minimize the necessary efforts.

1. Check Your Platform Design for Risks

It’s way more effective and cheaper to take preemptive actions towards risks — rather than waiting for harm to happen and addressing it only then.

That’s a solid guiding principle for your platform’s protection plan. You can apply it already during the design stage when you’re defining the features and functions you’d like to include.

Most importantly, you can plan the functionalities of your platform with safety in mind. This includes considering:

- How ill-meaning users can abuse the platform and harm or defraud others — and how you can prevent that

- How safety principles can be implemented in the creation of features and functions

- The types of harmful content that can be or is most likely to be generated on your particular platform

- How the interaction between the different functionalities can give rise to risks and harms for users

- How to set up an effective reporting and complaints system

- Ways to automate the processes, taking care of users’ safety and minimizing the risk of exposure to unsafe content

2. Analyze Vulnerable Groups at Risk

The next essential step in your protection plan is to identify the groups using your platform that are at a higher risk from online harm.

Most commonly, one of these groups is that of younger users. Naturally, they shouldn’t be exposed to unsafe and adult content. They should also be protected from the potential advances of older users. In some cases, you may even want to limit the age for the users of your platform.

There are different ways to address the needs of younger users, such as:

- Setting up a trustworthy system for verifying the age of users

- Creating access restrictions for younger users

- Establishing rules for interacting with underage users and enforcing restrictions through technology

Children are just one of many potential vulnerable groups. Your analysis may show you other types of users who are at an increased risk of harm while using your online platform. These may include differently-abled users, members of minorities, and others.

Depending on the precise risks for each vulnerable group, it’s important to have tailored plans for protection for each of them. It’s also a good idea to improve the overall safety of your platform — so you can deliver an inclusive and protected environment for everyone.

3. Set Up a Trust and Safety Program and a Dedicated Team

Building and implementing a sound Trust and Safety program has become an essential prerequisite for online platforms that want to protect their users and be in legal compliance.

In essence, your Trust and Safety program is the command center of all your efforts to protect your users. It has to contain all your guidelines and activities intended to minimize risks connected with using your platform.

Some crucial aspects to consider include:

- The wide variety of potential violations that can be committed

- The dynamic nature of potential violations which evolve with technological changes

- The different nuances in harmful and inappropriate user content and interactions

- The most effective approaches to content moderation as a central part of your program

Online platforms that take their Trust and Safety programs seriously have dedicated teams making sure that community rules are respected. This means having an overall approach to safety, rather than simply hiring a content moderation person or team.

Your safety team should:

- Know thoroughly the major risks that users face on your platform

- Be knowledgeable about your protection practices

- Have the right resources, training, and support to implement your Trust and Safety program effectively

4. Don’t Spare Efforts on Content Moderation

Finally, we get to the essential protection mechanism for online platforms with user-generated content and communication: content moderation. It is an indispensable tool for keeping your community safe.

Content moderation has been employed for years now by platforms of all sizes — from giants like Facebook and Twitter to small e-commerce websites. It entails the practice of filtering content for different types of inappropriate and harmful materials. Moderation can span text, visuals, video, and live streaming, depending on the specifics and needs of a platform.

While content moderation is the key to a safe and inclusive online platform, it takes its toll on the people who actually perform it. It’s a burdensome and traumatic job that leaves many moderators drained and damaged. Yet, it’s indispensable.

That’s why automatic content moderation has become an important technological advancement in the field. Powered by Artificial Intelligence, moderation platforms like Imagga take care of the toughest part of the filtering process. Image recognition and text filtering allow the fast, safe, and precise elimination of the most disturbing content, whether it’s in written posts, photos, illustrations, videos, or live streaming.

Naturally, there’s still a need for human input in the moderation process. But that’s possible too, by combining automatic filtering with different levels of engagement by human moderators. The blend between effective automation and expert insights gets better with time too, as the AI algorithms learn from previous decisions and improve with each moderation batch.

Automating Your Content Moderation: How Imagga Can Help

Most safety measures are time-consuming and need specific members of staff to apply them.

The good news is that the labor-intensive and traumatic content moderation can be easily automated — taking it to unseen speed and safety levels. This is what Imagga’s AI-powered automatic content moderation platform can deliver.

The moderation solution can be tweaked to match the exact needs of your platform at any given moment. For each of your projects that need content monitoring, you can set up priority levels, batch sizes, content and retention policies, and categories management. Most importantly, you can control the threshold levels for human moderation when borderline cases have to be reviewed by a moderation expert.

You can also easily modify the settings for your moderators by assigning them to different projects, and setting custom rules for them to follow, among others. The moderation process for your staff is simplified and as automated as possible.

With Imagga, you can either provide the platform to your in-house moderation team, or you can hire an external team from us that will skillfully complement your protection measures. In both cases, the AI moderation algorithms do the heavy job, complemented by the minimum possible input from human moderators.

Ready to give it a go? You can get in touch with us to find out how Imagga can help you protect your online community with its powerful content moderation solutions.

Benefits of AI Content Moderation | Which Top Business Challenges Does It Solve?

User-generated content has transformed the internet — and online platforms where people can share text, visuals, videos and live streams are growing by the minute.

This unseen scale of content creation in the last decade is impressive. It certainly brings new ways for self-expression for users, as well as new opportunities for businesses. At the same time, digital platforms can easily — and unwillingly — become hosts of certain users’ malicious intentions. From pornography and violence to weapons and drugs, harmful and illegal content gets published online all the time.

This brings numerous challenges for online businesses relying on user-generated content, making moderation a requirement for ensuring a safe online environment. Platforms have to protect users and uphold their reputation — while making growth possible and complying with legal regulations. In this article we'll go over the benefits of using AI content moderation compared to manual moderation.

Benefits Of AI Content Moderation

The complex task of managing content moderation at scale and in the rapidly evolving digital environment is made easier by moderation platforms powered by Artificial Intelligence. Solutions based on machine learning algorithms help businesses handle many of the challenges for which manual content review simply won’t do the job.

Here are the most notable benefits of AI content moderation for your platform.

1. Enabling Content Moderation at Scale

The biggest challenge for digital platforms based on user-generated content — from travel booking and dating websites to e-commerce and social media — is how to stay on top of all that content without automatic censoring and delays in publishing.

The need for review of content is undoubtful, and platforms of all types and sizes are looking for viable solutions to execute it. However, manual moderation comes with a high price tag — both in terms of financial investment, as well as for human moderators’ well-being. It’s also quite challenging to enforce for certain types of content, such as live streaming, where moderation has to occur in real time and should include video, audio and text.

Automatic content moderation complements the manual review approach and can increase its accuracy. It makes the process faster, saving tens and thousands of work hours. What’s more, AI-powered systems can go through massive amounts of information in record time, ensuring the speed and agility that modern digital platforms require. As algorithms learn from every new project — when human moderators take final decisions on dubious items and from the processing of huge volumes of content — AI can truly enable effective scaling for digital businesses.

2. AI Handles What People Shouldn’t

It’s not only expensive to hire and sustain a large moderation team when the amounts of posted content grow. It’s also a great risk for moderators who sift through the sheer volumes of posts and get exposed to the most disturbing and harmful content out there.

The reports on the harm of content moderation on the psychological well-being of people are numerous. Stress, post-traumatic disorders, and desensitization are just some of the dire effects that moderators suffer from. The most popular social media like Facebook and Instagram have been criticized a lot about this in recent years.

AI-powered moderation solutions don’t fully replace humans. Instead, they just take care of the hardest part of the job — the first round of screening. Moderation platforms can automatically remove content that is immediately recognized as illegal, graphic, noncompliant with a platform’s rules, or harmful in any other way. Only items that are questionable remain for manual moderation. This reduces the workload for content moderators and makes their job more manageable. This is probably one of the biggest benefits of using AI for content moderation.

3. Automatic CM Ensures Legal Compliance

Providing high-quality and timely content moderation is not only a matter of protecting users and your platform’s reputation as a secure online place. More and more legal requirements are enforced to safeguard people from exposure to harmful content.

There are specific regulatory frameworks that digital platforms have to comply with, depending on where a business entity is based. The European Union’s Digital Services Act is setting the tone on the Old Continent, as are some individual states too. The US is also moving forward with requirements for effective content moderation that protects users.

Automating big chunks of your moderation makes legal compliance easier. You can set automatic thresholds for removal of illegal content, which means it will be taken down in no time. The AI can also sift through content which would otherwise raise privacy issues if processed by people. Blurring of sensitive information can also be done to ensure private data protection. The whole content review process becomes faster, which minimizes the chances of non-compliance for your business and of unregulated exposure for users.

4. Multilingual Moderation with AI Is Easier

Content moderation needs to be executed in different languages to meet the needs of global platforms and local websites alike. This applies to all types of content, including visual materials that contain words.

The traditional moderation approach would be to hire or outsource to a team of moderators who have the necessary linguistic knowledge. This can be both difficult and expensive. It may also be quite slow — especially if multilingual moderators have to handle a couple of projects in different languages at the same time.

With machine translation, moderation in languages other than English can be automated to a certain extent. Even when the process is handled by people, they can get immense support from the automatic translations and flagging.

5. Automatic Moderation Allows On-the-Go Adjustments

Manual moderation can be somewhat slow to adjust to novel situations. When there is a new screening policy to apply, or new types of inappropriate content to include, this requires creating new procedures for content moderators. Often, introducing changes to the moderation process also requires re-training to help moderating staff stay on top of the most recent trends.

AI-powered moderation can help with the flexibility challenge by allowing easy adjustments to content screening thresholds, project moderation rules, and many more variables. Automatic platforms can be tweaked in various ways to accommodate the current needs of the moderation process.

In addition, when the automatic review is paired with human moderation, the process can become truly flexible. Batch sizes and priority levels for projects can be changed across the systems, making the accommodation process for moderators easier and faster. Assigning projects to the right team members is also enabled, leading to higher productivity and improved satisfaction among moderators with specific skill sets.

How Imagga Can Help Your Online Platform?

Artificial Intelligence has fueled the creation of powerful content moderation platforms. Imagga’s CM solution is here to make the moderation challenge easier to handle.

Imagga processes all types of content — from text and visuals to video and live streaming. You can manually set the types of inappropriate content you want to filter, as well as the thresholds for removal and referral to human moderators. In fact, you can control the whole process — and automate the parts that you want, while keeping the control you need.

With Imagga, you can adjust content retention policies to comply with legal requirements. The platform also makes the moderators’ jobs easier by allowing different project priority levels and batches, as well as custom moderation rules and easy flagging process.

Ready to give it a go? Take a look at our extensive how-to guide and get in touch to see how Imagga’s solution can solve your challenges related to content moderation.

Image Background Removal API | What Is It? How Does It Work?

Image recognition based on Artificial Intelligence is changing the way many industries are going about their business — and it’s also making life easier for end consumers too.

A great example of how machine learning algorithms come in handy in our day-to-day work and leisure activities is image background removal. Gone are the days when we manually had to erase the background and cut out objects from a photo with painstaking efforts. Automatic image background removal can get that done in no time — and with no manual work on our side.

In the current guide, you can find out the essentials about picture background removal and how an API based on Artificial Intelligence can be of immense help in your visual tasks.

What Is Image Background Removal?

Professionals across many industries — including advertising, e-commerce, and marketing, among others — need to remove the background of images on a regular basis. The uses of background removal for photos are so numerous that it’s difficult to even list them — from social media and website images for brands to birthday greetings cards, and everything in between.

In fact, image background removal is a necessity for a wide range of professionals today. Online stores, fashion and design websites, interior design websites, and content management systems are just a few of the extra examples — on top of traditional graphic designers and different types of digital marketers. Media content managers, developers and car dealers are a couple of other professionals whose jobs may entail image background removal.

Background removal is also a necessity for many people who want to experiment with their personal photo collections. Users like to create image collages and make their extensive photo libraries come to life — be it for a family reunion presentation, a personalized greeting card, or a special gift to loved ones.

Previously, the only way to extract the background from a visual was to use an advanced software solution like Adobe Photoshop. You had to cut out the desired objects by hand through selecting the contours around them via point setting. For inexperienced graphic designers, it was a slow and tedious process that often did not yield the desired results. It sometimes even ended up in strange cutouts that seemed as though done by a child.

Visual solutions have advanced tremendously and Adobe Photoshop and Illustrator, as well as online tools like Canva, offer easier ways to tackle background removal. And yet, there’s still manual work to be done with precision and at least some experience.

For graphic designers, photographers, and marketers who work on tight schedules, taking the time to remove image backgrounds by hand can turn into a nightmare. They need quick and effective methods for taking care of mundane tasks like this one — and ideally, with a single click.

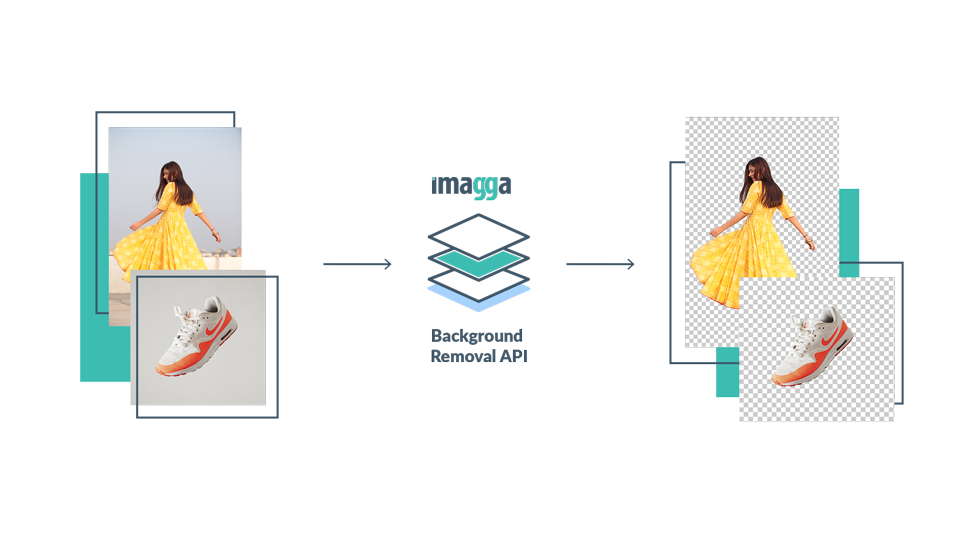

What Is an Image Background Removal API?

Instead of removing backgrounds from images by hand, you can use advanced AI-based solutions that get the job done with no fuss. That’s what, in essence, is a background remover API.

1. How Does It Work?

The API is powered by machine learning algorithms that enable the so-called computer vision — which in turn allows for the quick and automatic recognition of objects in visuals. The software is trained to identify objects, people, places, elements, actions, backgrounds, and more. The more images are processed through the algorithm, the better it becomes at spotting different details.

On the basis of these capabilities, image recognition can be applied for a wide variety of automation tasks — image categorization, cropping, color extraction, visual search, facial recognition, and many more, including seamless background removal.

The AI-powered platform can recognize the objects and their outlines within an image with unseen accuracy — and to smoothly separate them from the background against which they have been photographed or placed.

2. Benefits and Uses of an Image Background Removal API

The benefits of automatic background removal are numerous:

- High quality and utmost precision of object cutouts

- Fully automatic process with a single API request

- Processing of thousands of images at once via the API implementation

- Saving tons of time and money for businesses

- Enabling creativity for professionals and regular users alike

As noted earlier, background removal is a common task that is required across different industries and activities. Automatic removal with an image recognition API is thus useful for many types of professionals, as well as for end users.

Professional photographers and graphic designers may as well become the biggest fans of automatic background removal. They can focus their time on actually taking photos and creating visual assets rather than on their tedious technical processing — as the API can help optimize their workflows.

Marketing and advertising experts are the next in row. Visuals are powering up all social media, and nowadays there are barely any posts without images because they perform much better than text. That’s why marketers rely heavily on them — and background removal is a common task they need to take care of before sharing a visual on their brand’s channel.

Online shops, auto dealers, fashion and design platforms, and all kinds of e-commerce businesses are another set of common users for a background remover API. They all need to process visuals in order to sell their goods and services — and removing unwanted backgrounds from images is a typical task. With automatic removal, they can save time on these mundane jobs, while optimizing their online catalog for higher conversion.

Last but not least, both traditional and online media need image background removal in their daily work. Editorial visuals often have to be cropped, cleaned, and processed in different ways, including removing any elements in the background. In particular, media content editors regularly have to separate objects and people from photos to include them in news reports — be it during an election campaign or for announcing a novelty.

How to Use an API Background Removal?

Getting started with Imagga’s picture background removal API is a simple process. Sign up for our private beta testing.

Frequently Asked Questions

An image background removal API to remove backgrounds from images I is a tool that allows you to process thousands of photos for background removal at once — with the help of Artificial Intelligence.

It's not difficult to integrate Imagga’s picture background removal API. You can sign up with us today and send requests to our REST API through your Imagga profile straight away. Sign up for our private beta testing.

What Is a Content Moderator? Responsibilities, Skills, Requirements and more

The role of the content moderator in today’s digital world is a central one. Moderators take on the challenging task of reviewing user-generated content to ensure the safety and privacy of online platforms. They act, in a sense, as first-line responders who take care that our digital experiences are safe. Read on to find out what is a content moderator!

The content moderation process, as a whole, is a complex one because it entails the thorough screening of various types of content that goes online. The purpose is to ensure the protection of platform users, safeguard the reputation of digital brands, and guarantee compliance with applicable regulations.

In many cases, this means that content moderators have to go through every single piece of text, visual, video, and audio that’s being posted — or to review every report for suspicious content.

What Is a Content Moderator?

Content moderators are crucial in the process of ensuring the safety and functionality of online platforms that rely on user-generated content. They have to review massive amounts of textual, visual, and audio data to judge whether it complies with the predetermined rules and guidelines for the safety of a website.

Moderators help platforms uphold their Trust and Safety programs — and ultimately, provide real-time protection for their user base. Their efforts are focused on removing inappropriate and harmful content before it reaches users.

In this sense, the role of content moderators is essential because their work shields the rest of us from being exposed to a long list of disturbing and illegal content, containing:

- Terrorism and extremism

- Violence

- Crimes

- Sexual exploitation

- Drug abuse

- Spam

- Scam

- Trolling

- Various types of other harmful and offensive content

What Does a Content Moderator Do?

The job of the content moderator is a multifaceted one. While a large portion of it may consist of removing posts, it’s actually a more complex combination of tasks.

On the practical level, content moderators use targeted tools to screen text, images, video, and audio that are inappropriate, offensive, illegal or harmful. Then they decide whether pieces of content or user profiles have to be taken down because they violate a platform’s rules or are outright spam, scam, or trolling.

In addition, content moderators may also reply to user questions and comments on social media posts, on brands’ blogs, and in forums. They can also provide protection from inappropriate content and harassment on social media pages.

By doing all of this, moderators help uphold the ethical standards and maintain the legal compliance of digital businesses and online communities. Their timely and adequate actions are also essential in protecting the reputation of online platforms.

As a whole, the job of the content moderator is to enable the development of strong and vibrant communities for digital brands where vulnerable users are protected and platforms keep their initial purpose.

What Types of User-Generated Content Does a Content Moderator Review?

The variety of user-generated content is growing by the day. This means that content moderators have to stay on top of all technological developments to be able to review them adequately.

The main types of content that are being posted online today include text, images, video, and audio. They are the building blocks of all user-generated content.

Yet the combinations between these formats are growing, with new ones emerging constantly. Just think of the news stories and live streams on platforms such as Facebook, Instagram, Twitter, and even LinkedIn.

Content moderators may also review some other content formats, such as:

- User posts on forums

- Product and service reviews on ecommerce platforms and on forums

- External links in social media posts

- Comments on blog posts

With the development of new technology, the types of user-generated content that may need content moderation screening is bound to grow — increasing the importance of the review process for digital platforms.

Alternative Solutions to Using a Content Moderator

In recent years, the gigantic volume of content that has to be reviewed has pushed for major technological advancements in the field. They have become necessary to address the need for faster moderation of huge amounts of user-generated posts that go live — and for unseen levels of scalability.

This has led to the creation and growing popularity of automated content moderation solutions. With their help, moderation becomes quicker and more effective. AI-powered tools automate the most tedious steps of the process, while also protecting human moderators from the most horrific content. The benefits of moderation platforms are undoubtful — and complement the qualified and essential work of people in this field.

Imagga’s content moderation platform, in particular, offers an all-around solution for handling the moderation needs of any digital platform — be it e-commerce, dating, or other. The pre-trained algorithms, which also learn on the go from every new moderation decision, save tons of work hours for human moderators. Machine learning has presented powerful capabilities to handle moderation in a faster and easier way — and with the option of self-improvement.

As noted, content moderation often cannot be a fully automatic process — at least at this stage of technological development. There are many cases that require an actual individual to make a decision because there are so many ‘grey areas’ when it comes to content screening.

Imagga’s platform can be used by an existing moderation team to speed up their processes and make them safer and smoother. The hard work is handled by the AI algorithms, while people have to participate only in fine-tuning contentious decisions.

In practice, this means the platform sifts through all posted content automatically. When it identifies clearly inappropriate content that falls within the predefined thresholds, it removes it straight away. If there is content, however, that is questionable, the tool forwards the item to a human moderator for a final decision. On the basis of the choices that people make in these tricky cases, the algorithm evolves and can cover even larger expanses.

Content Moderation Skills

While content moderation solutions have taken up a large part of the hardest work, the job of the content moderator remains irreplaceable in certain situations. It’s a role that is quite demanding and requires a wide range of skills.

The basic task of the moderator is to figure out what content is permissible and what’s not — in accordance with the preset standards of a platform. This requires having sound judgment, so analytical skills are essential.

To achieve this, moderators have to have a sharp eye for detail and a quick mind — so they can easily catch the elements within a piece of content that are inappropriate. On many occasions, it’s important to also be thick-skinned when it comes to disturbing content.

The down-to-earth approach should be complemented with the ability to make the right contextual analysis. Beyond the universally offensive and horrible content, some texts and visuals may be inappropriate in one part of the world, while perfectly acceptable in another.

In general, moderators should be good at overall community management, respecting the specificities and dynamics of particular groups. The best-case scenario is to have previous experience in such a role. This would equip one with the knowledge of communication styles and management approaches that preserve the core values of an online group.

Multilingual support is often necessary too, having in mind the wide popularity of international platforms that host users from all over the world. That’s why moderators who know a couple of languages are in high demand.

Last but not least, the content moderator's job requires flexibility and adaptability. The moderation process is a dynamic one — with constantly evolving formats, goals, and parameters. While complementing human moderators’ work, new technological solutions also require proper training.

How to Become a Content Moderator?

As the previous section reveals, being a content moderator is not simply a mechanical task. In fact, it is a demanding role that requires a multifaceted set of skills. While challenging at times, it’s an important job that can be quite rewarding.

To become a content moderator, one needs to develop:

- Strong analytical skills for discerning different degrees of content compliance

- Detail-oriented approach to reviewing sensitive content

- Contextual knowledge and ability to adapt decision-making to different situations and settings