Exploring the Future of Content Moderation Platforms

Content moderation platforms help maintain safe and respectful online spaces. As user-generated content grows, so does the need for effective moderation tools.

These platforms use advanced technologies to manage vast amounts of content. AI and machine learning are key players in this evolution. They offer speed and efficiency but still require human oversight for complex cases.

The balance between automated systems and human moderators is vital. It ensures nuanced and context-sensitive content handling. This balance is essential for protecting users and upholding free speech.

The future of content moderation includes more sophisticated AI and real-time capabilities. As technology advances, these platforms will continue to evolve, addressing new challenges and opportunities.

The Evolution of Content Moderation Platforms

Content moderation has come a long way. Initially, it was a manual process, relying heavily on human judgment. As online communities expanded, this approach became unsustainable and also emotionally strenuous for human moderators.

The introduction of automated tools marked a significant shift. Early software focused on simple keyword filtering. This method was efficient, but often lacked context awareness.

Today, content moderation platforms leverage sophisticated technologies. AI and machine learning enable more nuanced content analysis. These technologies can identify harmful content faster and more accurately.

Modern platforms feature integrated tools, including:

- Text analysis for detecting offensive language

- Image and video recognition for visual content

- Nudity and explicit content detection

- Text in image via OCR to detect harmful text in visuals

- Real-time moderation to handle live interactions

These advancements not only improve efficiency but also address complex moderation needs. However, challenges like bias and ethical considerations persist. As platforms evolve, they strive to balance technological advancements with human oversight. This balance ensures fair and effective moderation, vital for building trust and fostering safe digital spaces.

Why Content Moderation Matters: Safety, Trust, and Compliance

Content moderation is crucial for maintaining safe online environments. It helps protect users from harmful and offensive content. This protection is essential for fostering a community where people feel secure.

Trust is another fundamental aspect. Effective moderation builds confidence among users. When content is appropriately managed, users are more likely to engage and participate. This engagement is beneficial for both the platform and its community.

Compliance is also a significant concern. Laws and regulations often require platforms to manage content appropriately. Failure to adhere can lead to severe consequences. Thus, content moderation ensures legal compliance and upholds industry standards.

Key reasons content moderation matters include:

- Ensuring user safety and security

- Building and maintaining user trust

- Meeting legal and regulatory requirements

These factors illustrate why content moderation is not optional. For platforms aiming to succeed, it is an integral part of their strategy. Well-executed moderation supports a positive user experience, which is indispensable for growth and reputation.

Key Features of Modern Content Moderation Tools

Modern content moderation tools are highly advanced and multifaceted. They offer a variety of features to tackle the ever-growing challenges of digital content. These tools help in managing vast amounts of content efficiently.

One significant feature is text analysis. It enables platforms to swiftly detect offensive language or sensitive topics. This analysis is crucial for identifying content that requires immediate attention.

Image recognition is another vital component. Advanced algorithms can scan images to detect inappropriate or offensive material. This feature is instrumental in preventing harmful visuals from reaching users.

Video moderation has also become essential. Automated systems can now analyze video content for violence, nudity, or offensive actions. This capability is necessary for platforms that host a plethora of multimedia content.

Key features of content moderation tools include:

- Text analysis for language monitoring

- Image recognition for visual content screening

- Video moderation for multimedia analysis

- Text in image moderation for detecting harmful content in visuals

These features enable platforms to maintain a safe and respectful environment. They ensure that harmful content is identified and managed effectively. As content continues to evolve, these tools must adapt to meet new challenges.

Human Moderators vs. Automated Solutions: Finding the Right Balance

Striking a balance between human moderators and automated solutions is crucial. Each approach has its unique strengths and weaknesses. Combining both yields optimal results in content moderation.

Human moderators excel in interpreting context and nuance. Their judgment is vital in complex situations, which automated systems might misinterpret. However, relying solely on humans can be time-consuming and inconsistent.

Automated solutions shine in processing large volumes of content quickly. They operate tirelessly and manage straightforward tasks with speed. But they may overlook context-sensitive material that requires human insight.

The ideal strategy involves a hybrid model. This model leverages technology for efficiency while relying on human oversight for accuracy. Collaboration between machines and humans enhances both speed and decision-making.

Key aspects of a balanced approach include:

- Utilizing AI for high-volume content management

- Engaging human moderators for context-specific issues

- Ensuring seamless integration of both systems

Such a balance ensures effective moderation that is both comprehensive and nuanced.

Industry-Specific Content Moderator Solutions

Content moderation needs can vary greatly between industries. Each sector faces unique challenges that require tailored solutions for effective moderation. Understanding these differences is crucial for implementing suitable content moderator solutions.

Social media platforms, for instance, deal with a high volume of user-generated content. They require real-time tools to filter out offensive posts and misinformation quickly. E-commerce sites, on the other hand, focus on maintaining product authenticity and customer reviews integrity.

Online gaming communities emphasize safe user interactions. Their content moderation tools aim to prevent harassment and fostering fair play. Solutions for each industry may include:

- Social Media: Real-time text and image recognition

- E-commerce: Fraud detection and review moderation

- Gaming: Chat moderation and user behavior analysis

- Dating: Profile verification and chat moderation

These industry-specific solutions optimize content moderation efficiency, ensuring a safer and more engaging user experience tailored to each platform's unique requirements.

Addressing Challenges: Ethics, Privacy, and Bias

Content moderation platforms face significant ethical challenges. Balancing free speech with community safety is complex. Striking this balance requires careful consideration of diverse perspectives.

Privacy is another critical issue. Content moderation tools often involve collecting and analyzing massive amounts of user data. Ensuring data protection and user consent is vital to uphold trust.

Bias in content moderation algorithms poses yet another challenge. Machine learning models can inadvertently reflect societal biases. This potential bias can impact fairness in content moderation decisions.

To address these challenges, platforms can focus on:

- Maintaining transparency in moderation processes

- Implementing robust data protection measures

- Regularly auditing AI models for bias

By addressing these areas, content moderation platforms can foster ethical practices, ensure privacy, and promote fairer content moderation outcomes.

Future Trends in Content Moderation Platforms

AI advancements will drive more sophisticated moderation capabilities. Expect real-time moderation to become more viable.

Ethical AI development will also gain focus. There will be an emphasis on transparency and accountability. This shift aims to address biases and improve fairness.

Personalized moderation experiences are on the rise. Platforms will likely tailor moderation settings to individual user needs. Such customization could enhance user engagement and satisfaction.

Emerging technologies like virtual reality and augmented reality will shape content moderation. These technologies may offer immersive and innovative moderation solutions. Future developments could include:

- Enhanced multilingual support for diverse global audiences

- Integration of sentiment analysis for nuanced content detection

- Use of blockchain for decentralized moderation processes

These trends highlight the industry's commitment to creating safer online environments. Content moderation tools are evolving to meet the increasing demands of digital spaces. As technology grows, so too will the potential for innovative content moderation solutions.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.

Visual Search and the New Rules of Retail Discovery in 2026

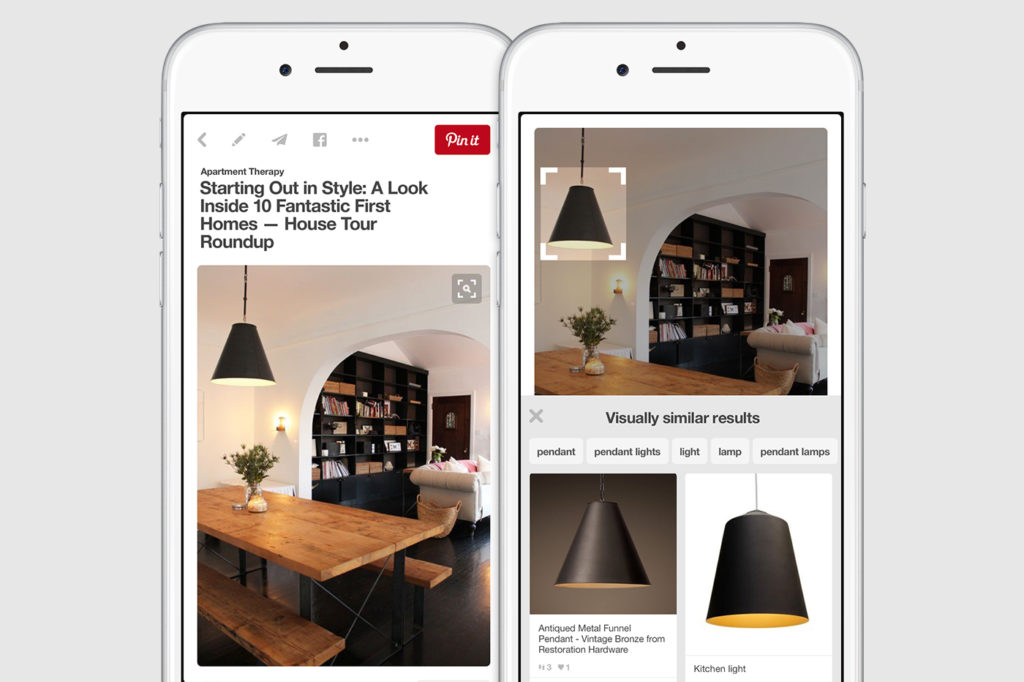

For years, online shopping has relied on words. Type a description, scroll through options, refine, repeat. But for digital-native consumers who live in a world of Instagram Reels and TikTok videos, describing what they want in words feels outdated. The next era of discovery is visual.

What is Visual Search?

Visual search is a technology that lets users find products by snapping or uploading a photo instead of typing keywords. It uses computer vision and AI to analyze the image, identify objects within it, and return visually similar items. In retail, this means shoppers can point their camera at something they like, such as a jacket, a chair, or a lamp, and instantly find where to buy it.

It’s one of the most exciting frontiers in e-commerce, bridging the gap between inspiration, convenience and purchase. While adoption is still early, new data and shifting consumer habits suggest that 2026 will mark visual search’s transition from emerging trend to essential retail capability.

Visual Search Statistics

Visual search is gaining traction, but it hasn’t gone fully mainstream yet. Here are some key numbers that show where the technology stands today:

- Only about 10% of U.S. adults use visual search regularly, but 42% say they’re interested in trying it 1

- 36% of consumers have tried visual search at least once, according to data compiled by Best Colorful Socks2

- The leaders in adoption are Gen Z and younger millennials (ages 16–34), with about 22% using visual search to discover or buy items — compared to 17% of 35–54 year-olds and only 5% among those 55+.

The State of Visual Search: High Interest, Early Adoption

These numbers show a huge gap between curiosity and daily use — a sign of strong untapped demand once visual search becomes more accessible, accurate, and embedded into everyday shopping experiences.

The generational divide is particularly revealing. Younger consumers have grown up with camera-based interaction — scanning QR codes, using AR filters, and discovering products through TikTok or Pinterest. For them, using the camera to shop isn’t a futuristic novelty; it’s the next logical step.

That means visual search isn’t just a new feature, but an emerging behavior that reflects how a new generation shops, discovers, and expects technology to work. As this group’s purchasing power grows, so will the expectation that retailers support image-based discovery as naturally as they do text or voice search.

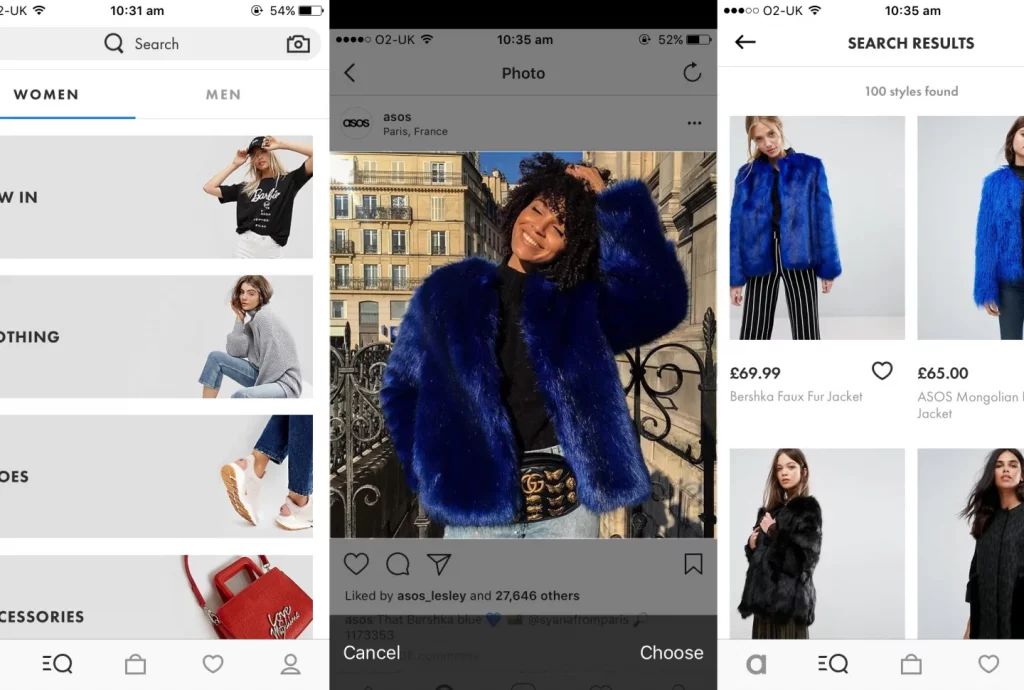

Who’s Leading the Charge

Retailers aren’t waiting for consumers to catch up. They’re already experimenting with camera-powered commerce. Visual search has moved from novelty to necessity among innovation-driven brands. Data backs this up.

Google Lens handles about 20 billion visual searches per month3, with 4 billion related to shopping, while Amazon has seen a 70% year-over-year increase in visual searches worldwide.

TikTok is testing product search by image on TikTok Shop, and Poshmark has rolled out similar functionality.

Traditional retailers are also seeing measurable results: ASOS’s Style Match feature increased engagement and conversions, and Zalando reported an 18% rise in customer engagement after adding visual search.4

IKEA, Sephora, and H&M have followed suit, showing that visual discovery is now part of how modern retail competes.

The message is clear: visual search is no longer experimental. It’s becoming a key differentiator in digital shopping experiences — and the brands that adopt it early are already reaping measurable benefits.

The Consumer Lens: Why Visual Search Just Makes Sense

Younger shoppers don’t think in keywords — they think in visuals. They see an outfit on Instagram or a lamp in a friend’s apartment and want to “find it instantly.”

According to Lyxel & Flamingo5, more than 60% of Gen Z and Millennials prefer visual over text search when it’s available, largely because it’s faster and more accurate for complex or hard-to-describe items. And 43% of online shoppers say they get frustrated when text-based search fails to match what they’re looking for.

The trust gap between visuals and words is another driver. 85% of shoppers trust product images over descriptions6, especially for categories like apparel or furniture.

It’s no coincidence that 86% of visual search users rely on it for fashion.

The ROI: From Inspiration to Conversion

Visual search doesn’t just look futuristic, it delivers measurable results. Retailers adopting it are seeing higher engagement, bigger baskets, and faster paths to purchase.

Shopify data shows that visual discovery tools can lift sales by an average of 15%, while other studies report conversion rate increases of up to 12% after integrating visual search.7

The reason is simple: customers using visual search often have stronger intent. They’re not browsing — they’re hunting for a specific look or product. By turning inspiration into instant access, visual search bridges the gap between desire and checkout.

Barriers Still Holding Retailers Back

For all the excitement, many retailers still hesitate to take the leap. The challenge isn’t lack of interest, but complexity.

The main barriers include technical investment, data privacy, and integration complexity. Developing or licensing a robust visual search engine requires ongoing AI training and catalog updates, while compliance with GDPR adds another layer of responsibility.

Then there’s user education. Retailers often report that shoppers overlook the “camera” icon unless prompted. Older consumers are less likely to try new search modes. These factors explain why, despite strong consumer appetite, visual search is still a feature offered by the few, not the many.

But with maturing APIs and pre-trained AI solutions — like Imagga’s Visual Search API — these barriers are quickly eroding.

The Inflection Point: 2026 and Beyond

Visual search is approaching a turning point. The next 12–24 months will determine which retailers treat it as a must-have and which fall behind.

Forecasts suggest that 30% of major e-commerce brands will integrate visual search by 2025, with adoption accelerating through 2026.8

The global market for visual search technology is expected to grow from $40 billion in 2024 to more than $150 billion by 2032,9 at a CAGR of 17–18%.

This growth will be driven by advances in computer vision and the blending of modalities — image + text + voice — that make product discovery effortless. As one retail analyst put it, “Visual search won’t replace text — it will complement it, making every query smarter and faster.”

In short: visual search is not the future of retail. It’s the next phase of it.

How Imagga Makes Visual Search Accessible

Until recently, implementing visual search meant major engineering investment. Imagga changes that.

With the Imagga Visual Search API, retailers can enable camera-based product discovery without building models from scratch.

- Fast and accurate: Trained on billions of visual data points for high match precision.

- Privacy-safe: Designed for secure, compliant image processing.

- Scalable: Works for enterprise and mid-size brands alike.

- Customizable: Retailers can train models for their own product catalog or visual style.

As visual search shifts from niche to necessity, Imagga helps retailers bridge the gap — turning emerging behavior into business growth.

The Future of Search Is Visual

Visual search is no longer just a feature, but a fundamental change in how people find what they want. Younger shoppers already expect to shop through images, not words. The technology is ready, the use cases are proven, and the competitive stakes are rising.By 2026, visual search will move from the edges of e-commerce to its center. Retailers who prepare now will meet a generation that doesn’t search — they see.

FAQ: Visual Search in Retail

1. What is visual search?

Visual search is an AI-powered technology that allows users to find products by uploading or snapping a photo instead of typing keywords. The system analyzes the image, identifies objects or patterns, and returns visually similar results. In retail, it helps shoppers go from inspiration to purchase in a single step.

2. How does visual search work?

Visual search uses computer vision and deep learning to interpret the contents of an image — colors, shapes, textures, and objects. It then matches these visual features against a product catalog to find similar or identical items. Some systems, like Imagga’s Visual Search API, allow retailers to train the AI on their own datasets for greater accuracy.

3. Why is visual search important for e-commerce?

Visual search makes product discovery faster and more intuitive, especially when users don’t know what keywords to use. It helps retailers capture high-intent shoppers who already know what they want visually.

4. What are the biggest challenges for retailers adopting visual search?

The main barriers are technical complexity, cost, and data privacy. Building accurate image-matching models requires significant AI expertise and infrastructure. Retailers also need to handle user-uploaded images securely and comply with privacy regulations such as GDPR. Partnering with established providers like Imagga helps overcome these challenges quickly.

5. Who is using visual search the most?

Visual search is most popular among younger, digitally native shoppers who are already accustomed to discovering products through images and social media. They expect the same seamless, camera-first experience from retail sites that they get on platforms like Instagram or TikTok. As these consumers gain more buying power, their habits are shaping visual search into a must-have capability for retailers.

6. What is the future of visual search in retail?

Visual search is steadily becoming a core part of how shoppers explore and interact with brands. As technology improves and integrates with tools like augmented reality and voice search, using a camera to find products will feel as natural as typing a query today. Retailers that adopt visual search early will be better positioned to meet changing consumer expectations and create more intuitive, visually driven customer journeys.

References

1 Emarketer: Amazon, Google enhance visual search features

2 Best Colorful Socks: Top 20 Fashion Visual Search Usage Statistics 2025

3 Emarketer: Amazon, Google enhance visual search features

4 Cross Border Magazine: Main E-Commerce Technologies during 2024 + Case Study Examples

5 Lyxel & Flamingo: Visual Search and E-Commerce: Are You Prepared for the Next Big Revolution?

6 Best Colorful Socks: Top 20 Fashion Visual Search Usage Statistics 2025

7 ASD Market Week: The Top 8 Retail Business Trends of 2025

8 Best Colorful Socks: Top 20 Fashion Visual Search Usage Statistics 2025

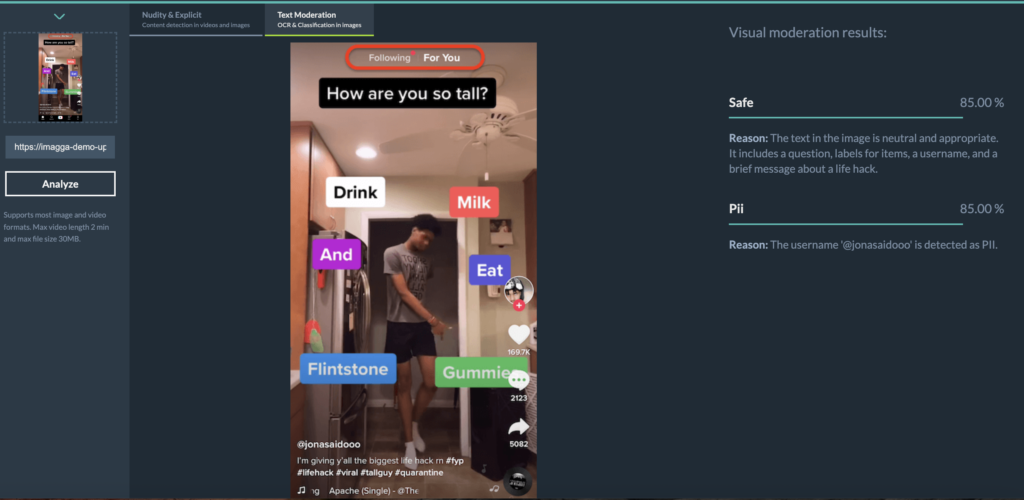

What is Text-in-Image Moderation? Challenges and Solutions

Text-in-image moderation is the process of detecting and managing harmful or sensitive words that appear inside images — in memes, screenshots, user-uploaded photos, or even video frames. It ensures that the same safety standards applied to captions, comments, and posts also apply to words that are visually embedded in pictures.

For platforms that rely on user-generated content, this capability closes a significant gap in content moderation. Without it, harmful text often slips through unnoticed, undermining safety measures and leaving communities vulnerable.

Why Text-in-Image Moderation Has Become Essential

In today’s internet, content rarely appears in one neat format. Users mix images and text in increasingly creative ways. A joke or insult is shared through a meme; a screenshot of a conversation circulates widely; a product photo includes hidden comments on the packaging.

Each of these cases can carry risks:

- Memes are powerful carriers of hate speech, harassment, or misinformation.

- Screenshots often reveal personal data, private conversations, or defamatory text.

- Background text in everyday photos (posters, graffiti, signage) may introduce inappropriate or harmful content.

- In-game images or livestreams may expose offensive usernames or chat logs.

These risks are not hypothetical. In fact, embedding harmful text inside visuals is one of the most common ways malicious users evade filters. If a platform only scans written text, like captions or comments, offensive or dangerous content inside images goes completely unchecked.

The takeaway is simple: text-in-image moderation is no longer optional. It has become essential for platforms that want to maintain safe, trustworthy environments for users and advertisers alike.

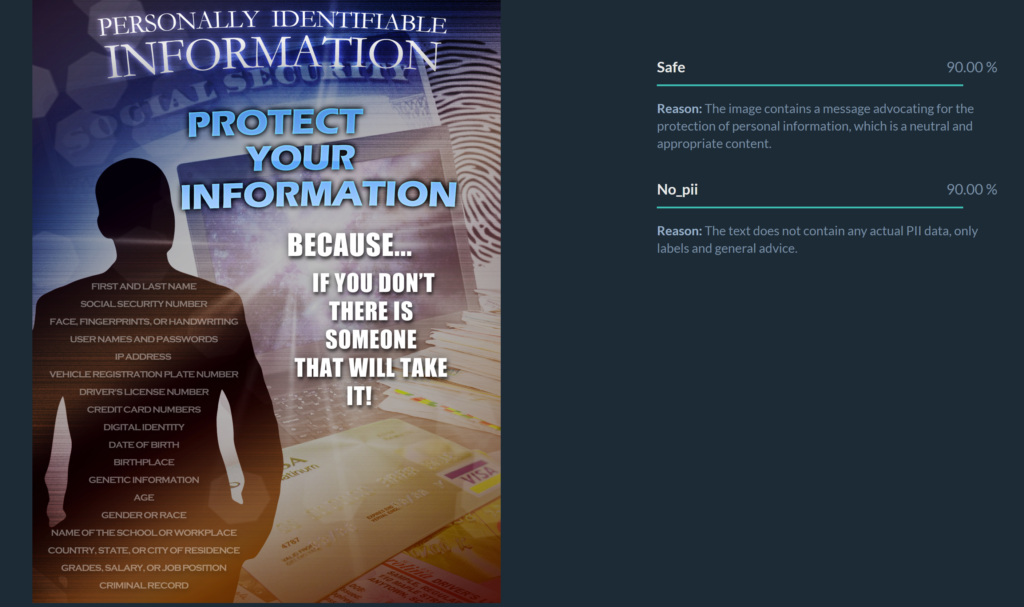

Where Text-in-image Moderation Fits in the Content Moderation Ecosystem

Text-in-image moderation sits at the crossroads of text, image and video moderation. It deals with language, but in a visual format. By bridging this gap, it completes the “visual content safety picture.”

To see why text-in-image moderation matters, it helps to place it within the larger system of content moderation:

- Text moderation looks at written language in posts, captions, and comments.

- Audio moderation automatically detects, filters or flags harmful content in spoken audio, such as hate speech, harassment, or misinformation.

- Image moderation analyzes visuals to flag nudity, violence, or other explicit content.

- Video moderation examines both frames and audio to identify unsafe material.

Imagine a platform that catches explicit images and bans offensive captions, but allows a meme carrying hate speech in big bold letters. Without text-in-image moderation, the system is incomplete. With it, platforms can finally ensure that all forms of content — written, visual, or hybrid are subject to the same safeguards.

The Biggest Challenges of Moderating Text Inside Images

On paper, moderating text inside images sounds simple: just read the words with software and filter them like any other text. In practice, the task is far more complex. Five challenges stand out:

Context matters

Words cannot be judged in isolation. A racial slur on a protest sign means something very different from the same word in a news article screenshot. Moderation systems need to understand not just the text, but how it interacts with the image.

Poor quality visuals

Users don’t make it easy. Fonts are tiny, distorted, or deliberately stylized. Text may be hidden in a corner or blurred into the background. Even advanced systems struggle when the letters are hard to distinguish.

Language diversity

Harmful content isn’t limited to English. It appears in multiple languages, code-switching between scripts, or encoded through slang and emojis. A robust system needs to keep up not just with dictionary words, but with the constantly evolving language of the internet.

Scale

Billions of memes, screenshots, and photos are uploaded every day. Human moderators can’t possibly keep up. Automation is required, but automation must be accurate to avoid flooding teams with false positives.

Evasion tactics

Malicious users are inventive. They adapt quickly, using creative spellings, symbols, or image filters to disguise harmful text. Moderation systems must evolve constantly to stay ahead.

These hurdles explain why text-in-image moderation is still an emerging capability. They also highlight why relying on traditional methods like scanning captions alone is no longer enough.

Emerging Solutions for Text-in-Image Moderation

Despite the challenges, new technologies are making text-in-image moderation increasingly effective. Most solutions use a layered approach:

Optical Character Recognition (OCR)

OCR is the classic technology for extracting text from images. It converts pixels into characters and words. For clear, standard fonts, OCR works well. But when text is distorted, blurred, or stylized, OCR often fails.

AI-powered Vision-Language Models

The recent wave of Vision-Language Models (VLMs) has changed the game. These models can interpret both the image and the embedded text, understanding them in combination. For example, they can recognize that a phrase in a meme is meant as harassment or that a number sequence in a screenshot may be a credit card.

Hybrid Approaches

The strongest systems combine OCR with fine-tuned vision-language models. OCR handles straightforward cases efficiently, while the AI model interprets context and handles more difficult scenarios. This hybrid method significantly reduces blind spots.

Human + AI Workflows

Automation handles the majority of cases, but edge cases inevitably arise. Escalating ambiguous content to trained reviewers ensures that platforms avoid over-blocking while still protecting users from real harm.

Together, these approaches form the foundation of modern text-in-image moderation systems.

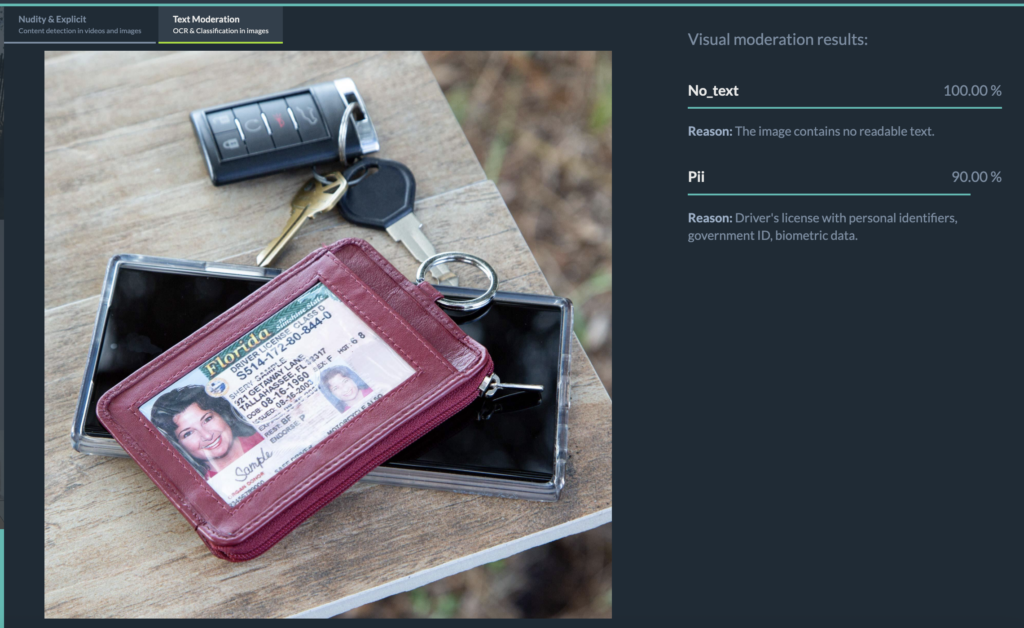

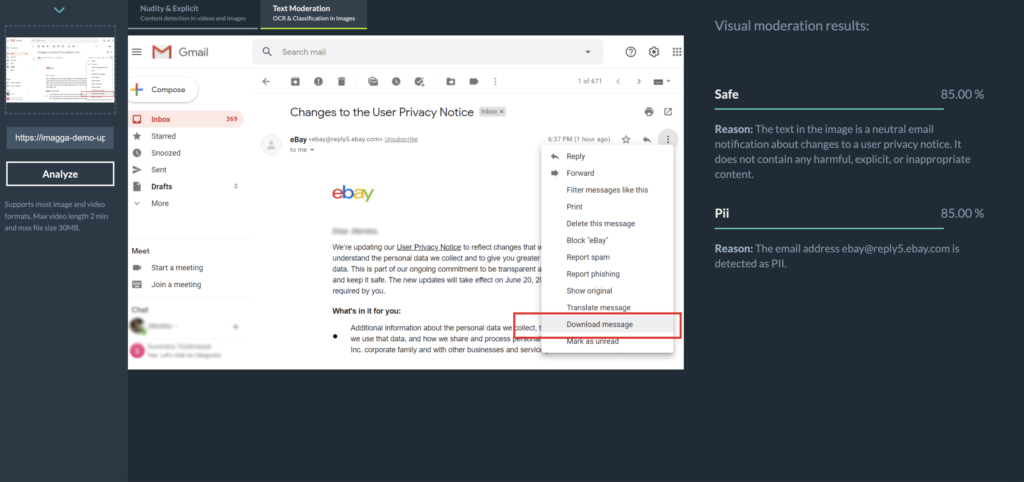

How Imagga Approaches Text-in-Image Moderation

Imagga has long been recognized for its state-of-the-art adult content detection in images and short-form video. Building on that foundation, the company now offers text-in-image moderation as a way to complete its visual content safety solution.

Imagga’s system:

- Combines advanced OCR with a fine-tuned Visual Large Language Model.

- Detects even very small or hidden text elements inside images.

- Flags sensitive categories such as hate speech, adult content references, or personally identifiable information (PII).

- Allows clients to adapt categories — excluding irrelevant ones or adding new ones based on platform-specific needs.

This adaptability matters. A dating app, for example, may prioritize filtering out sexual content and PII, while a marketplace may focus more on hate speech and fraud prevention. Imagga’s approach ensures each platform can tailor the system to its own community standards.

For a detailed look at how the technology works in practice, see How Imagga Detects Harmful Text Hidden in Images.

The Future of Text-in-Image Moderation

The demand for text-in-image moderation is only going to increase. Several trends are pushing it to the forefront:

- Regulation - Laws like the EU’s Digital Services Act require platforms to take proactive measures against harmful content. Ignoring text-in-image would leave an obvious gap. Read our blog post on “What the DSA and AI Act Mean for Content Moderation”

- Advertiser pressure - Brands don’t want their campaigns displayed next to harmful memes or screenshots. Content safety is directly tied to ad revenue.

- User expectations - Communities thrive when users feel protected from harassment and harm. Platforms that fail to act risk losing trust.

As the internet becomes ever more visual — with memes, short-form video, and livestreams dominating feeds — moderation systems need to cover every corner. Platforms that adopt text-in-image moderation today will be ahead of the curve in compliance, user trust, and advertiser confidence.

Stats That Highlight the Need for Text-in-Image Moderation

- About 75% of people aged 13–36 share memes (Arma and Elma)

- On Reddit, meme communities are enormous – the r/memes subreddit alone has over 35 million subscribers (among the top 10 subreddits on the site) Exploding Topics

- Platforms report that a substantial portion of hate speech violations occur via images or videos, not just plain text (The Verge)

- These “hateful memes” often combine slurs or dehumanizing imagery with humor or pop culture references, making the hate content less obvious at first glance (Oversight Board)

These figures underscore why embedded text can’t be ignored — it’s one of the most common, and most dangerous, blind spots in moderation.

Final Thoughts

Text-in-image moderation may once have seemed like a niche problem. Today, it is central to keeping digital communities safe. Words hidden in memes, screenshots, or product images are just as harmful as offensive captions or explicit visuals. Platforms that fail to detect them leave users exposed and advertisers uneasy.

The good news is that technology is catching up. Hybrid systems that combine OCR with AI-driven vision-language models are capable of extracting and interpreting text even in complex contexts. Companies like Imagga are already applying these advances to deliver adaptable, accurate solutions that fit the needs of different platforms.

FAQ: Text-in-Image Moderation

What is text-in-image moderation?

Text-in-image moderation is the process of detecting and filtering harmful or sensitive words embedded inside images, such as memes, screenshots, or video frames, to ensure platforms apply the same safety standards to visual text as they do to captions and comments.

Why is text-in-image moderation important?

Without it, harmful content hidden in memes or screenshots slips past filters, exposing communities to harassment, hate speech, and misinformation. It closes a critical gap in content moderation.

How does text-in-image moderation work?

Most systems combine Optical Character Recognition (OCR) to extract text with AI vision-language models that interpret context, tone, and intent. Hybrid human + AI workflows handle edge cases.

What challenges make text-in-image moderation difficult?

Key challenges include poor image quality, diverse languages and slang, evolving evasion tactics, and the massive scale of user-generated content. Context also matters — the same word may be harmful in one setting but harmless in another.

Which platforms benefit most from text-in-image moderation?

Any platform with user-generated content — social media apps, dating platforms, marketplaces, gaming communities, or livestreaming services — gains stronger safety, advertiser trust, and compliance with regulations like the EU Digital Services Act.

How is Imagga different in its approach to text-in-image moderation?

Imagga combines advanced OCR with fine-tuned vision-language AI to detect even small or hidden text. Its adaptable categories let platforms focus on the risks most relevant to their communities, from hate speech to bullying and personal data exposure.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.

Safe Browsing with Imagga’s Content Moderation Chrome Extension

Content moderation is primarily the responsibility of the platforms and websites we use. Social networks, marketplaces, and publishing sites invest in systems that prevent the spread of explicit or harmful content. Yet, despite these efforts, inappropriate images can still appear in search results, image galleries, or embedded ads.

Individual users and families can add an extra layer of protection — a way to take safety into their own hands when browsing online.

The Imagga Content Moderation Chrome Extension brings the power of Imagga’s award-winning visual AI directly into your browser. It helps you and your family browse safely by automatically detecting and masking explicit images before they ever reach your screen.

Whether you’re working, studying, or sharing a computer with children, the extension ensures that your online experience remains comfortable and secure.

How It Works

Once the Imagga Content Moderation Extension is active:

- It silently monitors every webpage you open.

- Before any image is displayed, it analyzes it in real time using our advanced adult-content detection model.

- If an image is flagged as explicit or inappropriate, it’s automatically blurred or masked.

- Safe images load normally, preserving your natural browsing experience.

The entire process happens locally on your device, ensuring that your browsing data and images never leave your computer. Your privacy remains fully protected while you benefit from continuous, AI-driven content filtering.

How to Install and Use the Extension

Since the extension is not yet published in the Chrome Web Store, you can install it manually in just a few steps:

- Download the Extension Package*

Click here to download the ZIP file - Unpack the ZIP file to a local folder on your computer.

- Open Chrome and navigate to chrome://extensions.

- Enable Developer Mode (toggle in the top-right corner).

- Click Load unpacked and select the folder where you unpacked the extension.

*The plugin is still in Beta version and compatible with Chrome versions under 109

That’s it. The Imagga Content Moderation Extension will appear in your Chrome extensions list and start working immediately.

Why Imagga

From smart video moderation to adult content detection, Imagga offers robust tools for keeping your digital platforms safe, compliant and user-friendly.

Now, we’ve made this advanced technology available in a form anyone can use. The Imagga Content Moderation Chrome Extension takes the same reliable AI that powers large moderation systems and puts it directly into your browser — fast, private, and easy to use.

It’s a simple way to bring AI-powered image safety to your everyday browsing, whether for personal peace of mind or family protection.

Browse with Confidence

With Imagga’s AI moderation running quietly in the background, you can focus on what matters — learning, working, or enjoying the web, without unexpected or inappropriate visuals interrupting your experience.

Whether you’re a parent creating a safer digital environment at home, or a professional who values privacy and control over what appears on screen, the Imagga Content Moderation Chrome Extension offers a reliable, privacy-first solution powered by world-leading visual AI.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.

From Memes to Screenshots: How Imagga Detects Harmful Text Hidden in Images

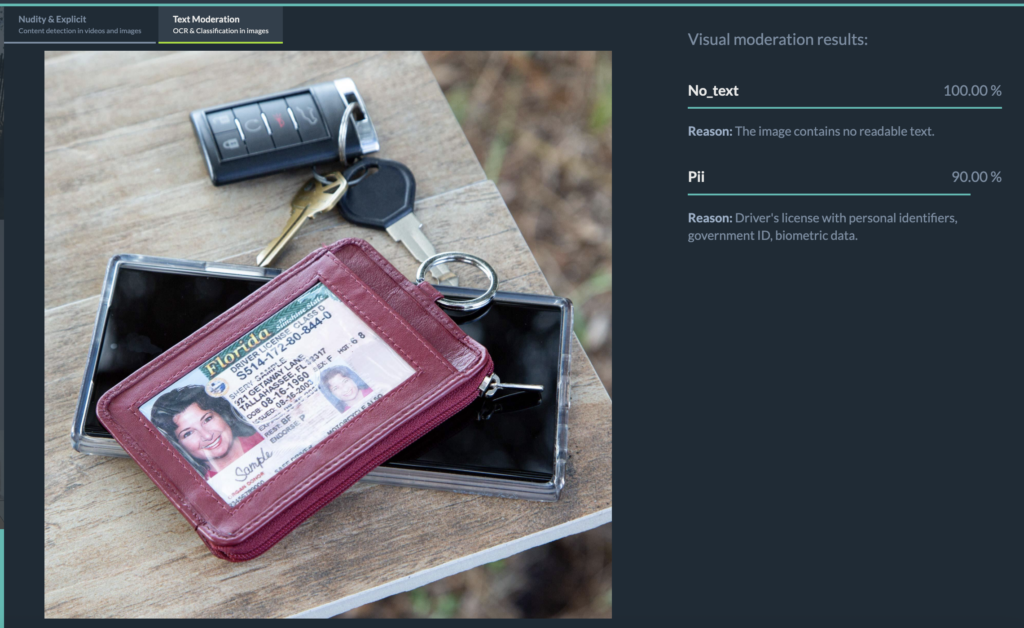

Users increasingly share content where text is embedded inside images — memes, captioned photos, or screenshots of conversations. Unlike plain text, which can be automatically scanned and classified, text inside an image must first be detected and extracted through OCR before it can be analyzed. For years, these posts remained a blind spot for moderation systems. Many filters simply did not “see” the words if they were part of an image, allowing harmful content to slip through.

This loophole gave malicious actors an easy way to bypass moderation by posting prohibited messages as images instead of text. A screenshot of a hateful comment, a meme spreading misinformation, or even a spammy promotional banner could evade detection. The stakes are high. The same harmful categories that appear in regular text such as hate speech, sexual or violent material, harassment, misinformation, spam, or personal data can appear in image-based text but are harder to catch. Effective text in image moderation is now essential for both user safety and platform integrity.

Scenarios Where Text-in-Image Moderation Makes a Difference

The risks of ignoring text in images are not abstract. They play out daily across social platforms, youth communities, and marketplaces.

Harassment and Bullying often take visual form. A user may overlay derogatory words on someone’s photo, turning a simple picture into a targeted attack. If the system only sees the image of a person, it misses the insult written across it. For platforms popular with young users, where cyberbullying thrives in memes, this is particularly critical.

Self-Harm and Suicide Content can also appear in images. A user might share a photo containing a handwritten note or overlay text such as “I can’t go on.” These posts signal that someone may need urgent help and, left unchecked, could even influence others.

Spam, Scams, and Fraudulent Ads are another area where image-based text is exploited. Spammers embed phone numbers, emails, or URLs in graphics to avoid text-based filters. From promises of quick money to cryptocurrency scams, these messages often appear as flashy images. Comment sections on social networks have been filled with bot accounts posting fake “customer support” numbers inside images, tricking users into engaging.

Personal Data and Privacy Leaks are a quieter but equally serious issue. A proud student might share a graduation photo with a diploma visible, exposing their full name, school, and ID number. Users may post snapshots of documents like prescriptions, ID cards, or credit cards, not realizing the risk. Even casual photos of streets can reveal house numbers or license plates. Moderation systems must now recognize and flag these cases to protect user privacy.

Imagga’s Text-in-Image Moderation: Closing the Gap

To address these challenges, Imagga has introduced Text-in-Image Moderation. It ensures that harmful, unsafe, or sensitive text embedded in visuals is no longer overlooked.

Building on our state-of-the-art adult content detection for images and short-form video, this new capability completes the visual content safety picture. The system combines OCR (optical character recognition) with a fine-tuned Visual Large Language Model and can be adapted to the needs of the client excluding some categories or including new ones.

Major functionalities include:

- Extract text from images at scale

- Understand context, nuance, and metaphors

- Operate across multiple languages and writing systems

- Classify both harmful categories and personal information (PII)

- Capable of understanding text that condemns hate or catches metaphor

Content Categories Covered

The model organizes extracted text into clear categories so that platforms can respond consistently. These include:

- safe content

- drug references

- sexual material

- hate speech

- conflictual language outside protected groups

- Profanity

- Self-harm

- Spam

Furthermore the categories can be customized as per the need of the client. By covering this full spectrum, the system ensures that even subtle risks, such as casual profanity or coded hate speech, are not missed.

PII Detection: Protecting Sensitive Information

Alongside harmful content detection, Imagga’s Text-in-Image Moderation also protects users against accidental or intentional sharing of personal data. The model can identify a wide range of personally identifiable information:

- names, usernames, and signatures

- contact details like phone numbers, emails, or addresses

- government IDs such as passports or driver’s licenses

- financial details including credit cards, invoices, or QR codes

- login credentials, tokens, and API keys

- health and biometric data

- employment and education records

- digital identifiers like IP addresses or device IDs

- company-sensitive data such as VAT numbers or client details.

For example, an image of a girl holding her diploma would be flagged under education-related PII, allowing platforms to take action before sensitive details are exposed publicly. This capability helps ensure compliance with privacy regulations and reinforces user trust.

Additional Advantages of Imagga’s Text-in-Image Moderation

Beyond broad content categories and PII detection, the model is designed to handle the subtleties that often determine whether moderation succeeds or fails.

It reliably identifies small details hidden in images, such as an email address written on a piece of paper or typed faintly in the corner of a screenshot. These elements are easy for the human eye to miss, but they can expose users to spam, scams, or privacy risks if left unchecked.

The system also demonstrates exceptional OCR performance, even in noisy or low-quality environments such as screenshots of chat conversations. Whether text appears in unusual fonts, overlapping backgrounds, or compressed images, the model is trained to extract and interpret it with a high degree of accuracy.

Finally, the moderation pipeline incorporates an awareness of nuance in labeling. Simply detecting a sensitive word does not automatically trigger a harmful classification. For instance, encountering the term “drugs” in a sentence that condemns drug use will not result in a false flag. This context-aware approach prevents overblocking and ensures platforms can maintain trust with their users while still enforcing safety standards.

Completing the Imagga Moderation Suite

Text-in-Image Moderation is not a standalone feature but part of a broader safety solution. It integrates seamlessly with Imagga’s existing tools for adult content detection in images and short-form video moderation, violence and unsafe content classification, and brand safety filters. Together, these capabilities create a comprehensive, end-to-end moderation pipeline designed for today’s user-driven, content-rich platforms.

Conclusion

Text embedded in images is no longer an oversight that platforms can afford to ignore. Whether it appears as a meme, a screenshot, a scam, or a personal document, this content carries real risks for users and businesses alike. Imagga’s Text-in-Image Moderation closes this gap with advanced detection and nuanced understanding, complementing the company’s broader suite of content safety solutions.

Platforms that want to provide safer, more responsible experiences now have the tools to ensure no harmful message goes unseen.

See the Text-in-Image moderation in action in our demo

Get in touch to discuss your content moderation needs.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.

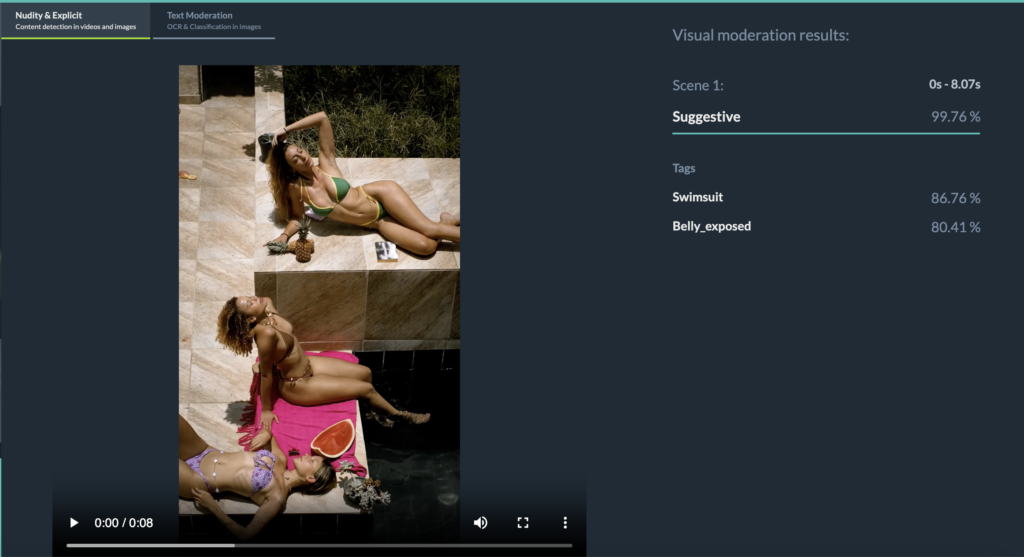

Short-Form Video Moderation: An Advanced, Accessible Solution for UGC Platforms

Short-form video moderation is a challenge of both volume and complexity. The upload rates are overwhelming enough, but the way this content is edited makes moderation even harder.

A single clip can contain hundreds of rapid cuts, layered visual effects, fleeting text overlays, and sudden shifts in tone, sometimes every few seconds. In this environment, even advanced systems can miss violations buried inside a few chaotic moments.

Large platforms like YouTube and TikTok can build their own sophisticated moderation pipelines, supported by massive AI teams, huge training datasets, and armies of human moderators. Most platforms don’t have that luxury.

Instead, they’re left piecing together off-the-shelf tools, manual review processes, or incomplete solutions, none of which are built for the speed, volume, and nuance of short-form video.

At Imagga, we’ve spent over a decade building image recognition and content moderation technology. Our Adult Content Detection model delivers 26% higher accuracy than the leading adult detection models on the market. Now, we’re applying that expertise to one of the toughest challenges in UGC: making advanced short-form video moderation accessible to platforms of any size, without missing the moments that matter.

The Limitations of Common Approaches

Many video moderation systems rely on key frame analysis: extracting a handful of “representative” frames from a video and running them through AI models to detect nudity, hate symbols, or other policy violations. This technique comes from traditional media workflows, where scenes are long, predictable, and visually consistent.

Another common shortcut is frame sampling — pulling 1-2 random frames per second and analyzing only those.

While both methods are fast, they share the same critical weaknesses:

- They can easily miss the actual violation, especially when the chosen frames are low-quality, blurred, or visually noisy.

- Explicit content doesn’t always appear in the frames that get sampled. A single inappropriate gesture, a flash of nudity, or a hate symbol shown for half a second can slip through entirely.

- With rapid edits, visual effects, and creative masking, short-form video creators have learned how to hide violations in plain sight — knowing these methods rarely catch them.

- In slow-moving footage, explicit content might appear in just a single frame or in a small area of the frame. Systems that depend on abrupt visual changes will miss it.

Random or key frame sampling can’t detect what it never sees. And when violations are missed, platforms face reputational damage, regulatory risk, and user churn.

Imagga’s Unique Moderation Pipeline for Short-Form Video

We designed our pipeline for uncompromising precision and speed, without the need for massive engineering resources. Our goals are simple:

Never miss a violation

Not a single frame, whether it’s buried in fast motion or hiding at the very end.

Minimize false positives

So that safe content stays online and creator trust remains intact.

Optimize speed and resources

By analyzing only the frames that matter.

How Imagga Short-Form Video Moderation Works?

Scene-Based Segmentation

The video is first split into visually coherent scenes. This preserves context, even in rapid-cut edits, making it easier to detect violations in fast-paced content.

Smart Frame Extraction

Within each scene, our system selects only the most informative frames. Near-duplicates, motion-blurred frames, and visually noisy images are discarded. This reduces processing time and resources without sacrificing detection quality.

This smart frame selection is our secret sauce — it ensures fleeting violations and subtle details aren’t missed, even in super slow motion.

AI-Powered Explicit Content Detection models

Selected frames are analyzed by Imagga’s advanced moderation models, trained to detect nudity across diverse scenarios, lighting conditions, and styles. The result is state-of-the-art precision in the accuracy of scene classification.

This multi-step pipeline ensures even the briefest policy violations are caught, making it especially powerful for short-form videos, where quick cuts, overlays, and visual effects often trip up simpler moderation systems.

Try It for Yourself

Test our short-form video moderation demo and see it in action. Upload a clip and instantly view scene analysis, and flags for detected violations.

Got a video moderation challenge? Get in touch and let’s talk about how Imagga can help.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.

Facial Recognition for Profile Verification in Dating Apps

Dating apps are plagued by fake profiles, bots, inappropriate content, and romance scams that cause people to lose trust in using them. In fact, safety concerns are a major reason many people avoid online dating.

According to a Bumble survey, 80% of Gen Z daters prefer to meet people with verified profiles—something that ultimately leads to more matches. Nearly 3 in 4 respondents said that security is a crucial factor in choosing a dating app.

Profile verification features have emerged as a key solution to rebuild trust and protect users. Verifying a profile with a facial recognition helps confirm the person is real and matches their photos. This stops imposters and scammers from deceiving others.

Verify your profile to go on more dates

Tinder reports that verified profiles lead to more matches. In one analysis, photo-verified users aged 18–25 saw approximately 10% higher match rates than those who weren’t verified.

A verification badge essentially tells others you're genuine, instilling confidence in potential matches.

"Verifying your profile is the easiest thing you can do to level up your dating game," said Devyn Simone, Tinder's Resident Relationship Expert. "Nobody wants to start flirting and then wonder if their new crush is a real person, so verifying your profile is the best way to help confirm you are the person in your photos. It's so important to be safe when interacting with people online, and verified profiles are a huge green flag!"

Common Verification Methods in Dating Platforms

Dating services use different levels of verification to confirm a profile’s authenticity. These methods range from very basic checks to highly robust identity proof:

Basic contact verification (weak)

Confirms an email or phone number. While common, this doesn’t prove the user’s identity or verify that their photos are authentic.

Photo/Selfie verification (moderate)

Prompts the user to take a selfie (often in real time) which the app compares to existing profile photos using facial recognition.

ID document verification (strong)

The most robust option. Users upload a government ID and take a live selfie. The system matches the face on the ID with the selfie and cross-checks details like age.

In practice, facial recognition technology is central to the more secure verification processes. Whether comparing a selfie to profile photos or an ID card, the system uses face-matching algorithms — with built-in liveness checks — to confirm that it’s the same person and that the capture is genuine.

How Face Recognition Verification Works

Modern face-based verification combines biometric AI with thoughtful user experience design. Here's how it typically works.

Live Selfie Capture

Users are prompted to capture a live selfie directly in the app. Video-based capture is becoming more common, as it provides multiple angles for more accurate verification. Randomized prompts, like asking users to turn their head or say a phrase, further prevent fraud using static photos or pre-recorded clips.

Facial Recognition Matching

Once captured, the system analyzes the selfie using facial recognition algorithms. It compares the selfie to a reference - either profile photos or a government ID by generating a facial template - a unique numerical representation of the user’s facial features. It then calculates similarity scores to determine if there’s a match.

Concerns and Limitations of Facial Recognition in Dating Apps

While facial recognition technology offers powerful tools for dating apps, it also brings a range of concerns and limitations that users and companies must consider. One of the most pressing issues is privacy. The use of facial recognition in apps can lead to the collection and storage of sensitive biometric data, raising the risk of unauthorized access or misuse. There is also the potential for this technology to be exploited for stalking or harassment, as someone could use a photo to identify or track a person without their consent—a clear violation of privacy.

Technical limitations are another concern. Facial recognition algorithms can be affected by poor lighting, unusual angles, or low-quality images, which may result in false positives or negatives. This means that genuine users might be wrongly flagged, or fake profiles could slip through the cracks. Additionally, these algorithms are not always equally accurate for everyone. Studies have shown that facial recognition technology can struggle with accuracy for people with darker skin tones or those from marginalized communities, leading to unfair outcomes and potential discrimination.

Given these concerns, it’s crucial for dating apps to address the limitations of facial recognition technology and ensure that its use does not compromise user safety, privacy, or equality.

How Face Recognition Helps Dating Apps Increase Users Safety

Preventing Multiple Accounts

An interesting application of face recognition in this context is to enforce the “one real person, one account” rule. By hashing and comparing facial templates, an app can check if a face has already been verified on another profile.

Supporting Age and Safety Checks

Some platforms use facial analysis to estimate whether a user meets age requirements, helping to prevent underage users from creating accounts.

Detecting AI-Generated or Deepfake Profiles

With the rise of AI-generated faces and deepfakes, facial recognition systems trained on real human biometric patterns can help flag profiles that appear synthetic. Some tools now combine face analysis with anomaly detection to identify images lacking biological realism or facial diversity cues.

Enabling Community Reporting with Visual Proof

Facial recognition-backed verification makes it easier for platforms to investigate user reports of impersonation, harassment, or deception. A verified user’s visual data can be used (within privacy guidelines) to confirm whether complaints are legitimate, accelerating moderation and response.

Reducing Manual Review Load

Advanced facial recognition automates what used to require human moderators—like comparing a user’s selfie with multiple uploaded photos or ID scans. This allows platforms to scale quickly and maintain safety even as user bases grow.

Building User Trust Through Verified Badges

Facial recognition enables platforms to confidently offer visible verification badges, which users interpret as a “green flag.” Studies show that verified profiles are more likely to receive messages, likes, and matches—creating a more respectful, engaged user environment.

Conclusion and Future Outlook

Facial recognition for profile verification has rapidly evolved from an optional feature to a must-have across the global dating app industry. From large platforms like Tinder and Bumble to niche communities and even Telegram-based dating bots, verification is becoming essential for safety and trust.

By confirming that the person behind a profile is rea l— and the same person shown in their photos — dating apps significantly reduce scams, impersonation, and catfishing. The result? Safer platforms, more genuine connections, and a better user experience overall.

Looking ahead, verification processes will likely become even more advanced. Ongoing AI innovations will strengthen liveness detection and anti-spoofing features, making it nearly impossible for anyone but the real user to pass verification.

This publication was created with the financial support of the European Union – NextGenerationEU. All responsibility for the document’s content rests with Imagga Technologies OOD. Under no circumstances can it be assumed that this document reflects the official opinion of the European Union and the Bulgarian Ministry of Innovation and Growth.

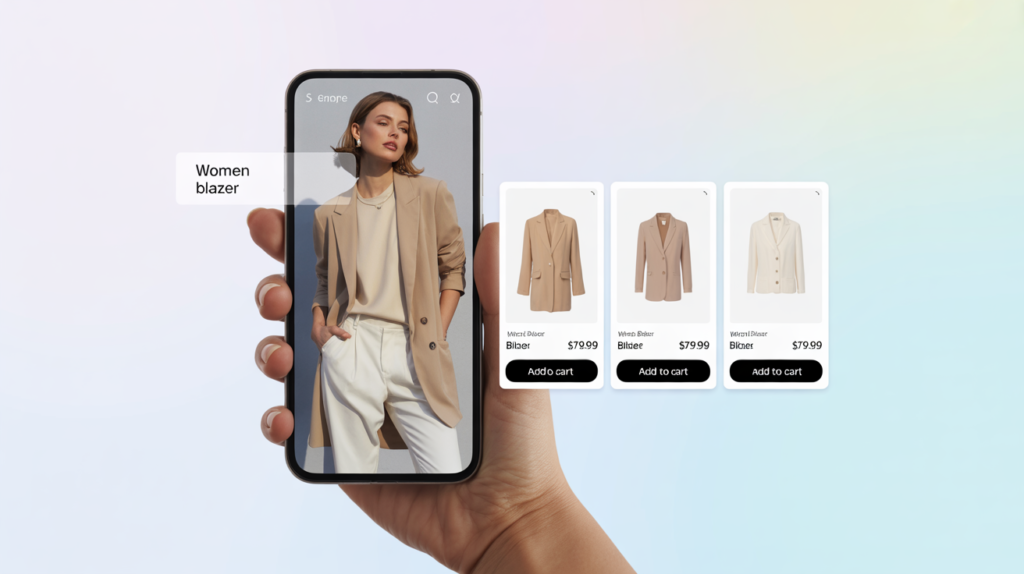

Enable Product Search by Image in Your Platform

In an increasingly visual-first digital world, users don’t want to describe what they see — they want to show it. Visual search bridges that gap, transforming user-uploaded images into powerful discovery experiences. From finding the perfect chair to identifying the kind of rare plants they encounter or surfacing similar products, this technology is changing how people explore and buy online.

And it is not just for end users. It can be incredibly powerful in backend operations. Visual search enables faster product search by matching scanned or photographed items with existing product images in your catalog, making inventory handling more efficient.

If you’re building a platform that needs smart, intuitive product discovery, whether customer-facing or internal, and especially in retail, fashion, furniture, or lifestyle, it’s time to consider adding search by image. Let’s explore how it works, how to implement it using a plug-and-play API like Imagga Visual Search, and the benefits it can deliver for your business.

What Is Visual Search?

Visual search is a technology that allows users to search for information using images instead of text.

At its core, it uses computer vision — a subset of AI that helps machines “see” and interpret visuals. Much like the human eye identifies and recognizes objects that catch your attention, computer vision enables systems to spot and analyze items in images. The system identifies objects, extracts features (like color, shape or texture for example), and compares them to a database to find the most visually similar items.

Think of it as reverse image search, but smarter, faster, and purpose-built for product discovery, not just metadata matching.

What’s the difference between reverse image and visual search?

Reverse image search is typically used to find the source of an image or locate exact or near-exact copies across the web. It compares the uploaded image to a database of indexed images, returning matches based on overall similarity or file attributes — tools like Google Images or TinEye are common examples.

Visual search, on the other hand, goes a step further, using advanced computer vision and AI to analyze specific visual features, such as shape, color, texture, and context — and then finds visually similar items, even if they’re not exact matches. This makes the technology ideal for product search, where a user might upload a photo of a chair or outfit and expect to see lookalike products, not just the original image.

In short, reverse image search is about finding the same image; visual search is about understanding and matching what’s in the image so that it can show you visually similar images.

How Your Users Already Use Visual Search (And Why You Should Catch Up)

Your users are already used to this way of finding products — just not on your platform.

Google Lens helps them identify plants, clothes, pets, and restaurants. Pinterest and Amazon let them tap or upload photos to discover similar products. These are some of users' favorite apps for visual search and discovery, offering quick and integrated solutions across devices.

Fashion and furniture brands use it to suggest visually similar styles based on what someone’s browsing.

These platforms have trained users to expect camera-first interaction. If your product discovery still relies on drop-down filters and keyword tags, you’re making users work harder than they should.

Identifying Objects and Products with Visual Search

One of the most compelling advantages of the technology is its ability to recognize real-world objects and instantly suggest matches — without the need for perfect descriptions or category filtering.

Whether a user snaps a photo of a chair they saw in a café or uploads an image of a product they own, Imagga’s visual search can identify similar items from your catalog. This goes beyond product titles or metadata — it looks at the actual visual features of the item (like shape, texture, or color) to make a match.

You can use this to:

- Match photographed furniture, clothing, or accessories with similar SKUs

- Help users identify and explore unknown products in your database

- Let internal teams or warehouse staff scan and identify items visually, even without barcodes or tags

- Enable users to identify plants or use animals find features, such as discovering the species of animals they encounter through visual search tools

Visual Search for Product Discovery and Inspiration

Beyond identification, visual search is a powerful tool for serendipitous discovery — especially in verticals like fashion, furniture, and home decor where aesthetics drive decisions.

What is a serendipitous discovery? Serendipitous discovery is the process of unexpectedly finding something valuable or appealing while searching for something else—or nothing at all. In the context of visual search, it refers to uncovering relevant or inspiring products through image-based exploration, even without a clear or specific intent.

With Imagga’s visual search API integrated into your app or website, users can:

- Upload a photo of an outfit and get instant style matches from your product catalog, helping them find the look they want

- Take a picture of a friend's apartment and find complementary furniture or decor, or discover items that match the look of something that catches their eye

Building a Visual Search Backend That Scales

For teams ready to move beyond basic tagging and manual cataloging, Imagga provides the technical foundation for building a powerful, scalable visual product search backend. Unlike general-purpose AI services, Imagga is purpose-built for developers who want tight control over the image-matching process inside their own applications.

Using Imagga’s Visual Search API, you can index your entire product catalog not just by keywords or categories, but by the visual features of each item — color, texture, shape, and more. This allows you to create a backend that responds in real time to user-uploaded images or camera input, returning the most visually relevant products from your catalog.

Product Search Optimization

Optimizing product search with visual tools like Google Lens is transforming the way users interact with the world around them. Instead of typing out long descriptions or struggling to find the right keywords, users can simply open their camera app or upload a photo to instantly search for products, identify plants, or even recognize animals they spot in the park. The Google app, available on select Android devices and all your devices, brings this powerful image search capability right to your fingertips, making it easier than ever to discover details about the things you see every day.

With the product search feature, users can snap a picture of a stylish outfit, a unique chair, or a piece of home decor in a friend’s apartment and quickly find similar clothes, furniture, or accessories online. The Google Lens icon within the app lets you search for products, landmarks, and even dog breeds using just your camera or an image from your gallery. This seamless integration means you can access visual search on your phone, computer, or web browser—whenever and wherever inspiration strikes.

But visual search isn’t just about shopping. The ability to copy and paste text from images means you can quickly find explainers, videos, and answers to homework questions in subjects like math, physics, history, biology, and chemistry. Whether you’re stuck on a tricky problem or want to learn more about a plant you found on a walk, the app helps you access information and learn about the world in a whole new way.

The product search feature is designed to help you check and compare products, making it easy to find the perfect item for your needs—be it furniture, home decor, or clothing. With support for multiple languages and availability in countries like the Netherlands, Google Lens ensures that users everywhere can benefit from smarter, faster product discovery.

By leveraging the power of visual search, you can refine your results, ask questions, and get answers instantly—without having to type a single word. Whether you’re looking to identify a landmark, find the perfect outfit, or solve a science question, the Google Lens app shows you how to use your camera to search, learn, and shop more efficiently. This makes visual search an essential tool for anyone who wants to make smarter decisions and navigate the world with ease.

Visual Search Use Cases (You Can Launch Today)

Here are just a few powerful ways platforms are using visual search:

- Fashion eCommerce: Let users upload a photo of an outfit and see similar styles in your catalog

- Home Decor & Furniture: Snap a picture of a chair, table, or lamp and get lookalike pieces

- Secondhand Marketplaces: Use image matching to clean duplicates and suggest pricing

- Plant/Animal Identification Apps: Recognize species and link to learning resources

- Digital Asset Management: Organize and retrieve visuals using image similarity, not filenames

You can find more real-world examples for visual search applications here.

From Illegal to Harmful: What the DSA & AI Act Mean for Content Moderation

Two key pieces of legislation, the Digital Services Act or DSA and the Artificial Intelligence Act or AI Act are affecting how businesses operate in the digital space. These laws introduce vigorous compliance requirements aimed to create safer and more transparent online environments while providing ethical AI practices. If you run an online marketplace, a social platform, or an AI-driven service, you need to know how these laws impact you - before regulators come knocking.

However, legal texts are dense, full of jargon, and nearly impossible for a business owner to digest quickly. That’s why we’ve invited Maria Catarina Batista, legal consultant, certified data protection officer through the European Center of Privacy and Cybersecurity, as well as certified privacy implementer. Together with Imagga co-founder Chris Georgiev, Maria will translate the implications of the Digital Service Act and the AI Act for online marketplaces, social platforms, and AI-driven services.

The Digital Services Act: Making Online Platforms Safer

The DSA is Europe’s rulebook for making the internet safer, fairer, and more transparent. It applies to online platforms operating in the EU, including social media sites, marketplaces, and search engines. It covers platforms that offer services to users in the EU, regardless of whether the platform is established in the EU or not.

Key obligations:

- Removal of Illegal Content: the DSA requires platforms to expedite the removal of illegal content, such as hate speech, counterfeit goods, and terrorist content.

- Algorithm Transparency: Platforms must provide more transparency regarding their algorithms, especially those used for ad targeting and content recommendation.

- Very Large Online Platforms (VLOPs): Platforms with over 45 million monthly active users in the EU are classified as VLOPs and are subject to stricter obligations under the DSA.

Which businesses are affected by the DSA?

The DSA applies to any company offering intermediary services in the EU, regardless of whether they are established in the EU or not. These services are categorized into:

Mere Conduit Services: internet service providers like Vodafone, which merely transmit data without altering it.

Caching Services: services like Cloudflare, which temporarily store data to speed up access.

Hosting Services: this category encompasses web hosts and cloud storage providers, which store user-generated content.

Online Platforms: social media platforms, e-commerce sites, and search engines.

Very Large Online Platforms (VLOPs): platforms with over 45 million active users in the EU are classified as VLOPs and face additional scrutiny and stricter obligations.

The AI Act: Regulating Artificial Intelligence for Safety and Transparency

The AI Act represents the world's first comprehensive regulation of artificial intelligence, aiming to ensure that AI systems work for the benefit of people, not against them. This groundbreaking legislation seeks to establish a framework that promotes safety, transparency, and accountability in the development and deployment of AI technologies.

Key Takeaways

Classification of AI systems by risk levels

Prohibited AI

The AI Act prohibits the use of AI systems that pose an unacceptable risk to individuals' rights and freedoms. Examples include social scoring systems and certain forms of biometric surveillance that infringe on privacy rights.

High-risk AI

This category includes AI systems used in critical areas such as employment (e.g., hiring tools), law enforcement, and critical infrastructure management. These systems are subject to stringent regulations, including mandatory conformity assessments and registration in an EU database.

Limited-risk AI

This category involves AI systems that require transparency obligations. Examples include chatbots and deepfakes, where users must be informed that they are interacting with AI.

Low-risk AI

Most AI applications fall into this category, which includes systems like AI-enabled video games and spam filters. These are largely exempt from specific regulations but must comply with existing laws.

Obligations for Developers and Deployers

The AI Act places significant responsibilities on developers and deployers of AI systems, particularly those classified as high-risk. They must ensure that their AI systems are fair, transparent, and accountable. This includes providing detailed documentation about the system's development and operation.

Applicability

The AI Act applies to any AI system that impacts the EU, regardless of where it was developed. This means that companies outside the EU must designate authorized representatives within the EU to ensure compliance with the regulations.

Overall, the AI Act sets a new standard for AI governance globally, emphasizing the need for responsible AI development and deployment that prioritizes human well-being and safety.

The AI Act applies to:

- AI Providers: Companies that develop and sell AI solutions.

- AI Deployers: Businesses that use AI to interact with users, make decisions, or analyze data.

- Any AI System Impacting EU Citizens: The AI Act applies to any AI system that affects EU citizens, regardless of where it was developed.

In general the DSA's scope is broader, covering all intermediary services, while the AI Act focuses specifically on AI systems impacting the EU.

Key Legal Challenges and Risks for Businesses

There are several challenges and potential risks for businesses.

Content Moderation: The Fine Line Between Compliance and Censorship

The DSA requires online platforms to remove illegal content swiftly, but this must be done without over-moderating, which could lead to accusations of censorship. This delicate balance is crucial for protecting both user rights and freedom of expression.

One effective approach is to use AI-powered moderation tools combined with human oversight. This ensures that content is reviewed accurately and that decisions are made with a nuanced understanding of context, helping to maintain user trust while complying with regulatory requirements.

Transparency & Algorithm Accountability

The DSA mandates that platforms provide clear explanations about how their algorithms work, particularly those used for content recommendation and ad targeting. This transparency is essential for building trust with users and complying with regulatory demands.

Platforms must offer detailed disclosures about their algorithms, explaining how recommendations and targeted ads are generated. This not only helps users understand why they see certain content but also demonstrates compliance with the DSA's transparency requirements.

3. AI Bias and Explainability Issues

While the DSA does not directly address AI bias, ensuring that AI systems are fair and unbiased is vital for maintaining user trust. The broader regulatory environment, including the AI Act, emphasizes the importance of AI explainability.

Implementing AI explainability frameworks and conducting bias audits can help address these concerns. By providing insights into how AI systems make decisions, businesses can demonstrate fairness and accountability, even if the DSA does not explicitly require this for all AI systems.

Heavy Fines for Non-Compliance

Both the DSA and AI Act impose significant penalties for non-compliance, highlighting the importance of adhering to these regulations.

- DSA Fines: Violations can result in fines of up to 6% of a company's total worldwide annual turnover.

- AI Act Fines: For prohibited AI use, fines can reach up to €35 million or 7% of global turnover (whichever is higher).

- Daily Penalties: Persistent non-compliance under the DSA can lead to daily penalties of up to 5% of global daily turnover.

Best Practices for Compliance with the DSA and AI Act

Ensuring compliance with the Digital Services Act (DSA) and the AI Act requires a proactive and structured approach. Here are some best practices to help businesses navigate these regulations effectively.

- Set Up Strong Content Moderation Mechanisms

Implementing robust content moderation is crucial for compliance with the DSA. Here are some key strategies:

- User-Friendly Reporting Systems: Establish easy-to-use mechanisms for users to report illegal content. This helps ensure that platforms can respond quickly and effectively to user concerns.

- AI Moderation with Human Oversight: Utilize AI-powered moderation tools to streamline the process, but ensure that human reviewers are involved to provide context and oversight. This balance helps prevent over-moderation and ensures that decisions are fair and accurate.

- Detailed Logs for Compliance Audits: Maintain comprehensive records of moderation actions. These logs are essential for demonstrating compliance during audits and can help identify areas for improvement.

2. Improve AI Transparency & Governance

Enhancing transparency and governance in AI systems is vital for compliance with the AI Act and broader regulatory expectations:

- Conduct Risk Assessments: Perform thorough risk assessments for AI systems to identify potential issues and implement mitigation strategies.

- Explain AI Decisions: Provide clear explanations of how AI decisions are made. This transparency helps build trust with users and regulators.

- Regular Bias and Fairness Audits: Regularly audit AI models for bias and fairness to ensure they operate equitably and comply with regulatory standards.

3. Being proactive is key to avoiding compliance issues and potential fines

- Determine Your Business Status: Identify whether your business qualifies as a Very Large Online Platform (VLOP) under the DSA or uses high-risk AI systems under the AI Act. This classification affects the level of regulatory scrutiny and obligations.

- Maintain Detailed Compliance Records: Keep comprehensive records of compliance efforts, including moderation actions, AI audits, and risk assessments. These records are crucial for demonstrating compliance during regulatory audits.

- Internal Audits: Conduct regular internal audits to identify compliance gaps before regulators do. This proactive approach helps address issues promptly and reduces the risk of fines.

- Employee Training: Train employees on AI literacy and legal risks associated with AI and online platforms. Educated staff can help identify and mitigate compliance risks more effectively.

Why Compliance is a Business Imperative?

The DSA and AI Act are just the beginning. The EU is known for influencing global regulations (the “Brussels Effect”), meaning similar laws will likely emerge worldwide.

Key future trends to watch:

- Expanding AI regulations: New AI categories and more stringent compliance.

- Regular updates: The EU will update lists of prohibited AI systems and VLOP designations.

- Stronger penalties: Expect even harsher fines for AI misuse and data privacy breaches.

Understanding and complying with the DSA and AI Act isn’t just about avoiding fines - it’s about building trust, protecting users, and ensuring long-term business viability. As regulations evolve, businesses must stay ahead by investing in transparency, compliance, and responsible AI practices.

For digital platform owners, the choice is clear: adapt now or risk severe consequences.

How can Imagga help?